WTF Bites

-

M$ brainworms

is this nonsense?

is this nonsense?Finally, just simply having threaded replies like how they’ve always existed on the web isn’t solving the issue, it’s taking a shortcut just to check a box and crow that Slack can’t. (Hint: there’s probably a good reason they haven’t rolled out that functionality because getting it right for the modern age takes time.)

OK, I haven't used Slack or MS Teams, but...gah...I hate this guy just for using the (Hint: blah blah blah blah.) construction. It's "taking a shortcut?" TDEMSYR.

-

@boomzilla

Someone should tell this schlub that posting on Medium is "taking a shortcut to sounding well informed for the modern age". (Did I miss any spots on the buzzword bingo card he was playing from?)

-

@fbmac should have added a

but I was lazy and on mobile

but I was lazy and on mobile

-

@ScienceCat said in WTF Bites:

@fbmac should have added a

but I was lazy and on mobile

but I was lazy and on mobileIt's not too late! You still have a few minutes left on that edit window!

-

@Tsaukpaetra said in WTF Bites:

@ScienceCat said in WTF Bites:

@fbmac should have added a

but I was lazy and on mobile

but I was lazy and on mobileIt's not too late! You still have a few minutes left on that edit window!

Mobile just now showed me posts from 2hours ago. I don't want to know what happens if I try to edit a post.

-

@coldandtired said in WTF Bites:

Now I've got to the last episode where they are still censoring 'fuck' but this time letting 'cunts' go. What's the rule?

I don't think those fucking cunts even know the rules.

-

(Did I miss any spots on the buzzword bingo card he was playing from?)

You didn't break down any silos so I don't think I can take your post seriously.

-

Thought process:

- why in the fuck would you look for basic C++ tutorials ON YOUTUBE?

- wait... it's just a misspelling, isn't it?

- hold on... why would you search for that? If you can type that in you probably know how to count to fucking 10!

- it's... it's for your kid, isn't it? HEY, MORON! FUCKING MORON! IF YOU HAVE THE TIME TO LOOK FOR THIS SHIT ON YOUTUBE, YOU HAVE THE TIME TO TEACH YOUR CHILD HOW TO COUNT!

Fucking hell, people today...

NB: Just typing in

coutis enough, I added thetbecause I was looking for something else and tyoped the thing that's now in my suggestions.

-

my browser's favicons got mixed up somehow

-

-

@Tsaukpaetra the actual WTDWTF icon had 5 in it, fb has 7

-

@Tsaukpaetra the actual WTDWTF icon had 5 in it, fb has 7

Sounds like grounds for a buffer overflow vulnerability...

-

Is there a more practical way when the caller can't predict how large the string will be?

If the caller can't predict how large the string will be, there ain't. However

Found a function that returns '0' for false or '1' for true

in this case it certainly can.

Then make the static memory thread-specific and have it so passing in a null or whatever into the function frees up the memory.

Making sure thread-local variables get destroyed is actually a huge problem. The C

thdread API does not have any callback on thread termination on many systems, so only the thread function can ensure proper destruction and that does not really need thread-local storage.Not standard C, and I won't consider other languages because C is the only one where this discussion makes sense AFAIK

Indeed. All other languages simply pass newly allocated memory that the caller has to free. The only difference being that they can take care of that for the programmer.

-

@error

is a Scrum exercise?

is a Scrum exercise?

-

All other languages

You can use preallocated memory in at least any language with pointers. Just allocate bool(true) and bool(false) statically and return that. That way, if the value of true or false changes at runtime, you can update it without having to recompile!

-

-

This post is deleted!

-

Despite appearances, I'm not @fbmac. I'm just someone who finds reading to the end a

to saying the same things as everyone else. ;)

to saying the same things as everyone else. ;)

-

@dkf you look like a villain by the picture

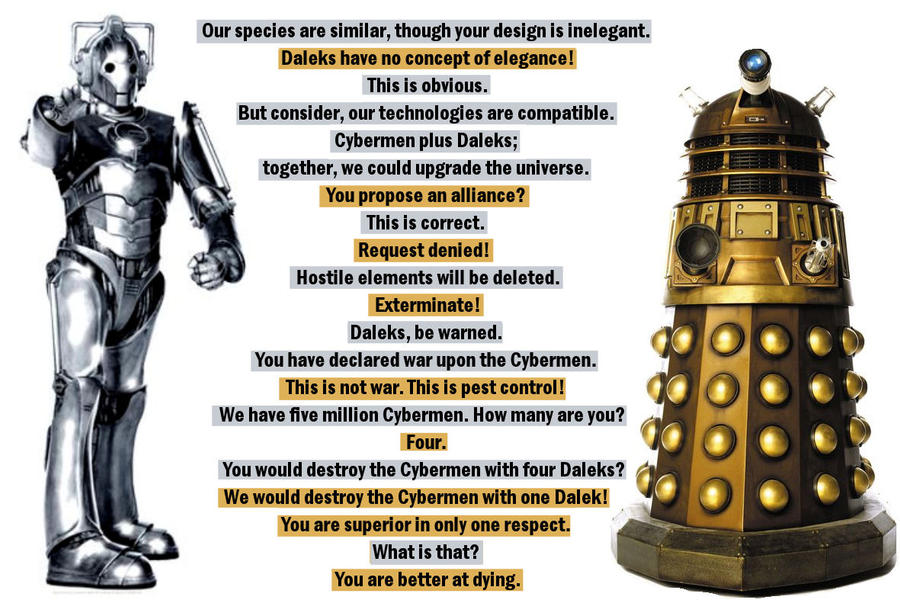

(Inb4 daleks are perfectly reasonable people)

-

Inb4 daleks are perfectly reasonable people

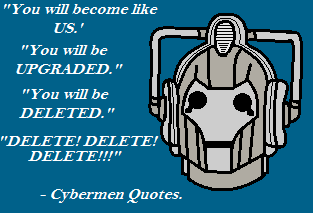

Isn't it the Cybermen who go around deleting people?

-

Alright, is it just my browser being weird or is anyone else having this problem?

Lately, when I open a video on Youtube, it seems that the site prioritizes loading the actual video over loading some of the page contents. This has been happening for quite a while, notably with comments; if the video wasn't fully loaded (buffered), the comments didn't load at all, and I'd have to scroll down to the comment section covered at least a half of my screen to get them to start loading. Which was fine, I don't give a shit about youtube comments.

The problem is that recently, it seems that they did the same with the fucking menu. Starting maybe two weeks ago, if I open a video and then I want to go to my subscriptions or whatever is accessible from the hamburger menu in the top left corner, I click the hamburger... and nothing fucking happens, because the piece of shit only starts loading it then (despite the fact that most of the contents are static). Or rather it acts like it was supposed to start loading it, but never actually does, and I have to SCROLL DOWN TO THE FUCKING COMMENTS TO TELL IT TO ACTUALLY LOAD SOMETHING, AND THEN WAIT HALF A FUCKING MINUTE TO LOAD THE COMMENTS AND THE FUCKING MENU.

Jesus.

-

@blek For months they have been handling navigation in JavaScript like Discourse, and they load the video element first before all other parts of the page. If you get the YouTube+ browser extension, you can disable that functionality.

Disable SPF

SPF (Structured Page Fragments) will be disabled. This feature is most commonly known by the red loading bar that appears on the top of YouTube when changing pages and is used to provide a faster and less bandwidth consuming navigation for the user, turning it off will make YouTube pages open like new pages during navigation.

However, comments are an iframe and always load much later than the rest of the page in my experience.

-

Inb4 daleks are perfectly reasonable people

Isn't it the Cybermen who go around deleting people?

Is people a selection criteria?

-

comments are an iframe and always load much later than the rest of the page

Pity. They could save themselves and everybody else a lot of bother by not loading them at all.

-

-

@LB_ Thanks, I'll try that.

-

Isn't it the Cybermen who go around deleting people?

No, cybermen turn people into more cybermen. Daleks exterminate anything alive that isn't a dalek

-

Isn't it the Cybermen who go around deleting people?

No, cybermen turn people into more cybermen. Daleks exterminate anything alive that isn't a dalek

Well which one is famous for going around shouting "DELETE! DELETE!"? Aside from you.

-

Well which one is famous for going around shouting "DELETE! DELETE!"? Aside from you.

It's "EXTERMINATE! EXTERMINATE!" That's the Daleks. (No, Chrome, I did not mean to type "Sales.")

-

Isn't it the Cybermen who go around deleting people?

No, cybermen turn people into more cybermen. Daleks exterminate anything alive that isn't a dalek

Well which one is famous for going around shouting "DELETE! DELETE!"? Aside from you.

The modern Cybermen do sometimes do DELETE, DELETE, as well as going all Microsoft with YOU WILL BE UPGRADED.

-

Isn't it the Cybermen who go around deleting people?

No, cybermen turn people into more cybermen. Daleks exterminate anything alive that isn't a dalek

Well which one is famous for going around shouting "DELETE! DELETE!"? Aside from you.

The modern Cybermen do sometimes do DELETE, DELETE, as well as going all Microsoft with YOU WILL BE UPGRADED.

-

-

@error Good. Time to make everything public now.

As an afterthought, the list is about "tasks that to be performed", so given the word "hashed" in the name maybe it should be "unboxing"?

-

@error The above sentence not be good English too.

-

-

due to Stalking

?

due to Staffing

Nah, that's not it.

-

I just noticed that I have 6 followers on Github, 5 of whom I do not know and 4 of which appear to be users in China.

-

We had some “fun” today. My current project is about working with some… funky… hardware that is designed to scale up rather larger than you're average Intel-based system. It's instead based on a very low power version of an ARM core, and in particular, it lacks hardware floating point and memory protection and has a complex internal protocol for communicating between the cores on a chip, the chips on a board, and the boards that make up an overall computer. The plus side is that we have test systems with around two hundred cores on a desktop and can power the whole lot with a standard wall wart. :)

Anyway, enough scene-setting.

We were debugging this weather simulation (a cut-down proof of concept that we're doing to prove that porting the real thing to our production system — a monster with close to a million cores IIRC — is feasible). It was crashing during the launch of the program that we'd uploaded onto each core, during the boot up of the communications library. This was weird as the comms library had basically not done anything at all at that point, not even had the IRQs switched on. Some debugging later, and we found that we had a variable with an unexpected value in it;

-2just isn't the same as-1. :(Except the variable hadn't ever been assigned to at that point; it was getting the wrong value either from a compiler bug (!) or a runtime library bug (!). A quick scan of the binary itself cleared the compiler (the correct value was in the

DATAsection) yet if we put aprintf()as the first statement inmain()the variable had the wrong value; the runtime was definitely at fault despite the fact that it was in use in lots of other programs just fine.In the end, we tracked it down to whether any of the program included a very particular mathematical operation. It turns out that any program that has a divide in it is unable to communicate at all, tripping the whole comms library into a panic instead.

Why a divide cause this? Well, remember that we don't have hardware floating point. Instead, we use fixed point arithmetic that is much faster on the hardware that we have except for divides which are criminally slow. (We also have a real numerical analyst on staff.) And it turns out that the implementation of the division code must do some sort of setup in the runtime (I had to leave for my train before we'd finished digging in that far) that scribbles over a part of memory that happens to be used by the comms code to store its current state, throwing that into a situation where it can only recover by crashing hard. (The system monitor — a dedicated CPU — then picks up the pieces, sending things like most of the contents of memory back to the development harness.)

All well and fine, but it's still a

moment when changing a

moment when changing a /to a+in code that isn't run at all (but still linked in in a way that the compiler can't optimise out) changes your program from crashing to working. ;)

-

For a site called html.com, you'd think they'd at least be proficient at it.

Filed under: INB4 HTML != CSS

-

I just noticed that I have 6 followers on Github, 5 of whom I do not know and 4 of which appear to be users in China.

-

It's instead based on a very low power version of an ARM core

Just out of curiosity, if it's not confidential, what version? I've done some low-power ARM stuff, and I wonder if it's one I've used.

two hundred cores on a desktop and can power the whole lot with a standard wall wart.

That's a lot of cores, but with that little power, they must be running at really low clock frequency.

-

AS CENSORED BY CBS:

ass

hole

goddamn

reacharound

https://www.youtube.com/watch?v=YkJS0IzZREk

-

@HardwareGeek said in WTF Bites:

That's a lot of cores, but with that little power, they must be running at really low clock frequency.

They're all asynchronous logic, so they really take a lot less peak processing power; even with clock gating, standard CPUs don't reduce power consumption nearly so much. Our real goal is to make a big supercomputer that only needs a conventional datacenter power supply rather than something exotic.

-

on a very low power version of an ARM core

They're all asynchronous logicwoah... is this like AMULET!? Will clockless logic finally conquer the world and bless us with glitch prevention, digital design terror, and built-in EMI suppression?

-

woah... is this like AMULET!?

Yes. Evolution of that.

The system's current main function is to be a platform for simulating big neural networks in real time. (It appears to be impossible to do it with normal models of neurons running on current supercomputers; we know this because we're collaborating with people trying to do exactly that. But their stuff is able to be used to — eventually — validate ours. Provided they get the numerics right…

) However, we've got a mandate to expand to being a more general purpose system; the weather model stuff I was mentioning is part of that programme, as the hardware we've built is all about doing relatively simple things massively in parallel. Not all problems are suitable for this approach. We also want to make a

) However, we've got a mandate to expand to being a more general purpose system; the weather model stuff I was mentioning is part of that programme, as the hardware we've built is all about doing relatively simple things massively in parallel. Not all problems are suitable for this approach. We also want to make a databasekey-value store that uses the hardware, but that's all at a much earlier stage.I'm currently mostly working on the web services to drive the portal that'll be the front end to the main production deployment. I'm turning stuff that was written very much in a hurry by a colleague into something that's more robust. (The answer shouldn't be “launch ever more threads!” when you're programming in Java. Just sayin'…)

-

Today's WTF: Guy posts a conspiracy rant about how the media suppresses reports about a particular event.

He then decides to report about said report himself.

Using a quote from a newspaper.

-

They're all asynchronous logic

That's ... astonishing. I wasn't aware of any CPU since, like, the '70s that was based on asynchronous logic. It's at least an order of magnitude more difficult to design, and probably two orders of magnitude to verify. There are so many things that can go wrong — race conditions, hazards, oscillations...

For you software folks, imagine a massively multithreaded program, with no synchronization between the threads. Each thread is effectively a

while (true) { ... }loop, so it will produce new output whenever an input changes:while (true) { c = a | b; } while (true) { d = c & q; } while (true) { a = !((q & r) | (x & y & !d)); }You have to design (and test) the logic so that no matter the order in which those threads update

a,candd, they converge to a stable result (you don't effectively wind up witha = !a), anda,canddall settle on the same final value. Now multiply that by a few million such threads. :head_asplode.gif:In normal (synchronous) logic, you still have those millions of threads, but a lot of them look more like

while (true) { if (sync_event) { a = ...; }}It's much, much easier to break up the logic into little chunks that are controlled by these synchronization events (clocks — that's where, e.g., a CPU's clock speed comes from — how often these events occur). Threads still depend on other threads, but now the interdependencies are limited. A relatively small group of threads depends on results from other (groups of) threads, but those results are only visible when they are stable. There may be dependencies among the threads in this group, but they're acyclic (unless you done screwed up; something needs to be in another group), so they're guaranteed to converge to a stable value. The results from this group are only visible to other threads once they've converged and a synchronization event has occurred. Orders of magnitude easier to get this right. That's why (almost) nobody uses asynchronous logic any more (except for limited situations like, say, wake-on-LAN in really low-power modes, where even the wake-up logic may be asleep).

Whoever designed this asynchronous ARM processor is either an effing genius or batshit crazy. Or both. Yes, definitely both.

-

@HardwareGeek said in WTF Bites:

That's ... astonishing. I wasn't aware of any CPU since, like, the '70s that was based on asynchronous logic. It's at least an order of magnitude more difficult to design, and probably two orders of magnitude to verify. There are so many things that can go wrong — race conditions, hazards, oscillations...

It's not has hard as that, actually. The key thing was that effective decompositions have been found; there's a few, but one that's usually used (IIRC) is to represent each bit (at the data transmission level) with a pair of lines in one direction and a handshake line — or is it two lines? — in the opposite direction; a bit is transmitted by raising a signal on the line for 0 or the line for 1 (the driving logic guarantees that it won't raise both) and that's asserted until the receiving end asserts that everything's received. There's a few rather more specialised gates (e.g., the arbitrator, for deciding which driver to receive a signal from, which is genuine electronics black magic and IIRC not really expressible in NAND logic) but most are just the same old things repurposed in a different way. All IIRC, since I've not worked on the hardware side since 2002 (been off doing advanced web services ;)).

It turns out that the balanced logics that these things induce have a number of very interesting properties:

- Lower peak power consumption, since the fraction of each chip switching at once is so much lower.

- Lower peak noise.

- The balanced logics are virtually impossible to decode without the circuit diagram — many cells look effectively the same except for exactly where the wires route and they can even go in the deep parts of the silicon since they're not actually delay-critical — giving better protection of IP and secured data.

The tooling was a big issue back in the '90s. That's what I was working on back then. :)

Whoever designed this asynchronous ARM processor is either an effing genius or batshit crazy. Or both. Yes, definitely both.

I know those guys. “Demented geniuses” is a good term. (Especially for the guy who handles the actual main hardware design, who doesn't score anywhere on a graph of normality.)

-

one that's usually used (IIRC) is to represent each bit (at the data transmission level) with a pair of lines in one direction and a handshake line — or is it two lines? — in the opposite direction; a bit is transmitted by raising a signal on the line for 0 or the line for 1 (the driving logic guarantees that it won't raise both) and that's asserted until the receiving end asserts that everything's received.

Ok, but how do you prevent those two bit lines from glitching as the inputs change? Actually, thinking about it more as I wrote the rest of this, I have an inkling of an idea that might work, but it's making my head hurt.

If there are any public papers you could link, I'd be interested in reading (or trying to read) them. However, my entire career has been dealing with synchronous logic (I vaguely remember designing one little piece of asynchronous logic since I got out of school almost 30 years ago), so it just might cause

.

.

-

@HardwareGeek said in WTF Bites:

If there are any public papers you could link,

We've got an entire website full of that stuff, as it happens.

That page and the ones it links to are rather old. The publications are now all at this page, and I've no idea how many of them are open access. (Most of the recent stuff is more about how to compute like a brain, so they're rather different, and many of the papers appear to be behind journal paywalls. :( I also can't fetch them for you as I'm not at work and haven't got the right sort of VPN set up.)

As I recall, the critical part is the Muller C element. These things are used pretty heavily, but I can't remember the details. (I think level-triggered logic is more stable than edge-triggered, but couldn't be certain. I think edge-triggered is used for memory though, as that's much faster. All IIRC. Take with a ton of pinches of salt.)

it just might cause

.

.Probably, yes. ;)

C-element - Wikipedia

C-element - Wikipedia