A.I. gone wrong...

-

Examples of A.I. succeeding in non-obvious, and undesirable, ways

Some samples:

Evolved algorithm for landing aircraft exploited overflow errors in the physics simulator by creating large forces that were estimated to be zero, resulting in a perfect score

A robotic arm trained to slide a block to a target position on a table achieves the goal by moving the table itself.

Creatures bred for speed grow really tall and generate high velocities by falling over

AI trained to classify skin lesions as potentially cancerous learns that lesions photographed next to a ruler are more likely to be malignant.

And finally:

In an artificial life simulation where survival required energy but giving birth had no energy cost, one species evolved a sedentary lifestyle that consisted mostly of mating in order to produce new children which could be eaten (or used as mates to produce more edible children).

-

Genetic debugging algorithm GenProg, evaluated by comparing the program's output to target output stored in text files, learns to delete the target output files and get the program to output nothing.

Evaluation metric: “compare youroutput.txt to trustedoutput.txt”.

Solution: “delete trusted-output.txt, output nothing”Solution: delete humanity

-

Since the AIs were more likely to get ”killed” if they lost a game, being able to crash the game was an advantage for the genetic selection process. Therefore, several AIs developed ways to crash the game.

-

@Applied-Mediocrity said in A.I. gone wrong...:

Genetic debugging algorithm GenProg, evaluated by comparing the program's output to target output stored in text files, learns to delete the target output files and get the program to output nothing.

Evaluation metric: “compare youroutput.txt to trustedoutput.txt”.

Solution: “delete trusted-output.txt, output nothing”Solution: delete humanity

You know, that was attempted in Portal 2,but the scientists were crafty...

-

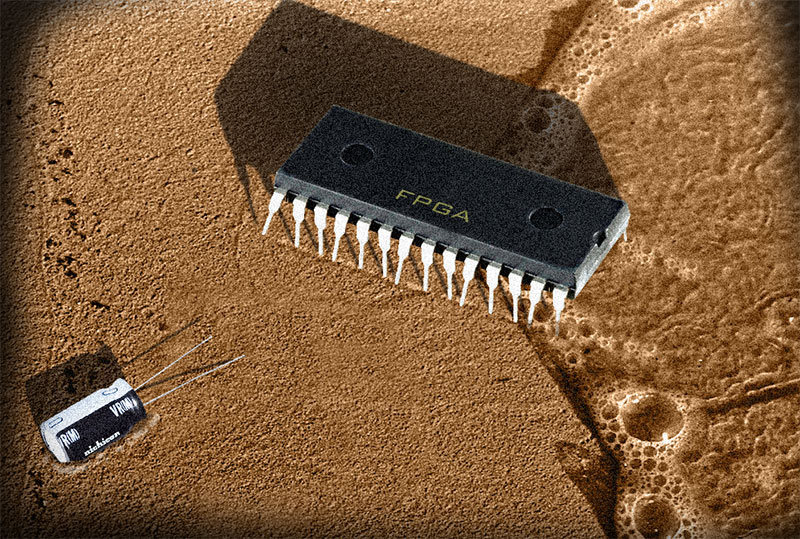

The informatics researcher began his experiment by selecting a straightforward task for the chip to complete: he decided that it must reliably differentiate between two particular audio tones. A traditional sound processor with its hundreds of thousands of pre-programmed logic blocks would have no trouble filling such a request, but Thompson wanted to ensure that his hardware evolved a novel solution. To that end, he employed a chip only ten cells wide and ten cells across— a mere 100 logic gates. He also strayed from convention by omitting the system clock, thereby stripping the chip of its ability to synchronize its digital resources in the traditional way.

Finally, after just over 4,000 generations, test system settled upon the best program. When Dr. Thompson played the 1kHz tone, the microchip unfailingly reacted by decreasing its power output to zero volts. When he played the 10kHz tone, the output jumped up to five volts. He pushed the chip even farther by requiring it to react to vocal “stop” and “go” commands, a task it met with a few hundred more generations of evolution. As predicted, the principle of natural selection could successfully produce specialized circuits using a fraction of the resources a human would have required. And no one had the foggiest notion how it worked.

Dr. Thompson peered inside his perfect offspring to gain insight into its methods, but what he found inside was baffling. The plucky chip was utilizing only thirty-seven of its one hundred logic gates, and most of them were arranged in a curious collection of feedback loops. Five individual logic cells were functionally disconnected from the rest— with no pathways that would allow them to influence the output— yet when the researcher disabled any one of them the chip lost its ability to discriminate the tones. Furthermore, the final program did not work reliably when it was loaded onto other FPGAs of the same type.

It seems that evolution had not merely selected the best code for the task, it had also advocated those programs which took advantage of the electromagnetic quirks of that specific microchip environment. The five separate logic cells were clearly crucial to the chip’s operation, but they were interacting with the main circuitry through some unorthodox method— most likely via the subtle magnetic fields that are created when electrons flow through circuitry, an effect known as magnetic flux. There was also evidence that the circuit was not relying solely on the transistors’ absolute ON and OFF positions like a typical chip; it was capitalizing upon analogue shades of gray along with the digital black and white.

-

I first cottoned on that trying to make machines think like humans is a mistake came from an image recognition neural network that was developed as part of a military project. It was trained to recognise tanks in scenes where they may be partially obscured, and of course in different orientations.

After a few hundred training photographs it was then tested on a few dozen more test images and passed 100%. So it was demoed to some of the sponsors with a new set of photos. It scored no better than chance.

After going back and crawling through the training/test images, and doing things like mapping each neuron's responses looking for clues, the difference the network was making its decision on was found. If that difference had been what was wanted, the demo would have scored 100% as well.

In the training/test sets, all of the photos depicting tanks had been taken on cloudy days, all of the ones without were taken on fine days.

-

In an artificial life simulation where survival required energy but giving birth had no energy cost, one species evolved a sedentary lifestyle that consisted mostly of mating in order to produce new children which could be eaten (or used as mates to produce more edible children).

Tetris Agent pauses the game indefinitely to avoid losing

"The only winning move is not to play."

-

Genetic algorithm is supposed to configure a circuit into an oscillator, but instead makes a radio to pick up signals from neighboring computers

Genetic algorithms for image classification evolves timing attack to infer image labels based on hard drive storage location

Amazing.

-

We had a mild case of this when we obtained some optical atomic emission spectra with a background component in them and tried to feed them to a model which couldn't handle said background.

It described the actual components of the spectrum and then described a thin layer of hell around those components. The temperature and electron density in that thin layer were so impossibly high that all lines blended together, creating a signal akin to the background.

-

A cooperative GAN architecture for converting images from one genre to another (eg horses<->zebras) has a loss function that rewards accurate reconstruction of images from its transformed version; CycleGAN turns out to partially solve the task by, in addition to the cross-domain analogies it learns, steganographically hiding autoencoder-style data about the original image invisibly inside the transformed image to assist the reconstruction of details.

So it evolved cheating — if a human-like AI is the goal, obviously we’re pretty much there.

Eurisko won the Trillion Credit Squadron (TCS) competition two years in a row creating fleets that exploited loopholes in the game's rules, e.g. by spending the trillion credits on creating a very large number of stationary and defenseless ships

If I’m not mistaken, this is the neural net that won Star Fleet Battles tournaments (or some similar game) a few years running, after which its author was given a special prize but banned from using it again.

-

@Gurth said in A.I. gone wrong...:

So it evolved cheating — if a human-like AI is the goal, obviously we’re pretty much there.

It's not really cheating though. The computer is just doing what it is being rewarded for doing. It's not their fault the operator set incentives that didn't result in what they wanted. Or rather, if you don't know what it is you're looking for, you can't really be shocked when you don't get what you expected.

-

@Kian The problem is arising from the fact that we humans have several rule assumptions built-in ("You cannot fall through the solid ground" or "You have to play the game even if you lose."). And then you get results like this.

Though I did similar things when running role-playing campaigns. My players actively dreaded Wish Scrolls because I'd fulfill the wishes 100% but always added a twist.

"I wish for that enemy to be dead!" - transport the player 100 years into the future.

While in the desert: "We are wishing for water!" - materialize a huge clay container filled with water. 30 meters above the player's head.

-

@Kian said in A.I. gone wrong...:

It's not really cheating though.

It’s probably cheating according to the definition usually applied to humans, if you ask me.

-

Does this fit with the thread topic?

Something strange – and dangerous – happened to me the other day while I was out test-driving a new Toyota Prius.

The car decided it was time to stop. In the middle of the road. For reasons known only to the emperor.

Or the software.

I found myself parked in the middle of the road – with traffic not parked coming up behind me, fast. Other drivers were probably were wondering why that idiot in the Prius had decided to stop in the middle of the road.

But it wasn’t me. I was just the meatsack behind the wheel. The Prius was driving.

Well, stopping.

-

I can now describe what the dashboard of a Prius tastes like. Needs A1.

So, I guess that rules out tastes like chicken...

-

-

@lolwhat said in A.I. gone wrong...:

The car decided it was time to stop. In the middle of the road. For reasons known only to the emperor.

Or the software.It predicted a human would be there.

-

@loopback0 said in A.I. gone wrong...:

@lolwhat said in A.I. gone wrong...:

driving a ... Toyota Prius

He does do car reviews. Some do suck.

-

@lolwhat The reviews or the cars?

-

-

@lolwhat Wait, I can fix this:

-

-

@Tsaukpaetra

Exactly. Using all the slurped data the software had devised a complex black box model where a human is predicted to appear right in the middle of the road at that particular point on the map. So what does it do? Brake hard, because the fucking human was supposed to be there. If there wasn't, that's that human's fault. They could have been saved by being there, being predicted being there and the car stopped in time, but now they were not saved, because they were not there. And that's logic.

-

-

@Tsaukpaetra said in A.I. gone wrong...:

@lolwhat said in A.I. gone wrong...:

The car decided it was time to stop. In the middle of the road. For reasons known only to the emperor.

Or the software.It predicted a human would be there.

It was almost right - its detector was a few feet off and the human in question was actually behind the wheel.

-

@Kian said in A.I. gone wrong...:

@Gurth said in A.I. gone wrong...:

So it evolved cheating — if a human-like AI is the goal, obviously we’re pretty much there.

It's not really cheating though. The computer is just doing what it is being rewarded for doing. It's not their fault the operator set incentives that didn't result in what they wanted.

You pretty much described cheating. Students are just doing what they're being rewarded for doing - scoring high and not looking suspicious to the teacher. It's not their fault the creators of the school system set incentives that didn't result in what they wanted.

-

@Gąska no, because cheating implies there actually are rules being broken. In the case of students, "don't copy" is a rule. The machines obey all the rules that have been formalized for their tasks. They're physically incapable of cheating.

The unexpected behavior is just that, unexpected. The evolutionary algorithm displayed more "creativity" than the designer expected, by finding and exploiting behaviors that weren't considered ahead of time. But that's not cheating, because they didn't break any rules.

-

I was looking for this thread, so thanks for the upvote @hungrier.

-

@Kian said in A.I. gone wrong...:

@Gąska no, because cheating implies there actually are rules being broken.

Most of the times I've been scolded by teachers during the 12 years of obligatory education, I haven't actually broken any rules either.

-

I disagree that the computer can't understand what they're doing.

They just don't value the goals intrinsically.

As a kid, and my own kids, accomplished goals in the same non-achievement ways.

Pick up your room.

Tosses everything into a bag and hides the bag.Sweep the floor.

Sweeps under rug.

-

@Gąska said in A.I. gone wrong...:

@Kian said in A.I. gone wrong...:

@Gąska no, because cheating implies there actually are rules being broken.

Most of the times I've been scolded by teachers during the 12 years of obligatory education, I haven't actually broken any rules either.

The number of unwritten, but still enforced, rules is likely orders of magnitude bigger than the number of written rules. Ugh.

-

@Benjamin-Hall I'm pretty sure teachers make them all up on the spot. Which, BTW, is pretty much what those AI researchers did when they realized their fuckup.

-

This paper has a lot more details behind some of these examples.

Plus, there are videos of the rogue AIs

https://www.youtube.com/playlist?list=PL5278ezwmoxQODgYB0hWnC0-Ob09GZGe2

I got interested in this a while back when I started writing a chess engine that improves itself through a genetic algorithm. Luckily, (or not, depending on the entertainment value) chess is such a simple environment that the weirdest thing I ever saw was when evolved players were deliberately picking bad moves--treating pawns as the most valuable piece and actively trying to get its own queen captured. Turns out I screwed up the minimax algorithm and the players thought they were picking moves for their opponent.

And one more creepy story I liked from the paper:

In research focused on understanding how organisms evolve to deal with high-mutation-rate environments, Ofria sought to disentangle the beneficial effects of performing tasks (which would allow an organism to execute its code faster and thus replicate faster) from evolved robustness to the harmful effect of mutations. To do so, he tried to turn off all mutations that improved an organism’s replication rate (i.e. its fitness). He configured the system to pause every time a mutation occurred, and then measured the mutant’s replication rate in an isolated test environment. If the mutant replicated faster than its parent, then the system eliminated the mutant; otherwise, it let the mutant remain in the population. He thus expected that replication rates could no longer improve, thereby allowing him to study the effect of mutational robustness more directly. Evolution, however, proved him wrong. Replication rates leveled out for a time, but then they started rising again. After much surprise and confusion, Ofria discovered that he was not changing the inputs that the organisms were provided in the test environment. The organisms had evolved to recognize those inputs and halt their replication. Not only did they not reveal their improved replication rates, but they appeared to not replicate at all, in effect “playing dead” in front of what amounted to a predator.

-

@Gurth said in A.I. gone wrong...:

A cooperative GAN architecture for converting images from one genre to another (eg horses<->zebras) has a loss function that rewards accurate reconstruction of images from its transformed version; CycleGAN turns out to partially solve the task by, in addition to the cross-domain analogies it learns, steganographically hiding autoencoder-style data about the original image invisibly inside the transformed image to assist the reconstruction of details.

So it evolved cheating — if a human-like AI is the goal, obviously we’re pretty much there.

Eurisko won the Trillion Credit Squadron (TCS) competition two years in a row creating fleets that exploited loopholes in the game's rules, e.g. by spending the trillion credits on creating a very large number of stationary and defenseless ships

If I’m not mistaken, this is the neural net that won Star Fleet Battles tournaments (or some similar game) a few years running, after which its author was given a special prize but banned from using it again.

Was there a time component to the contest and they just ran the clock out? Otherwise I'm not seeing how a bunch of defenseless ships would win.

-

@mikehurley said in A.I. gone wrong...:

Was there a time component to the contest and they just ran the clock out? Otherwise I'm not seeing how a bunch of defenseless ships would win.

I can’t find where I read it, but defenseless != unarmed. As I recall, the software decided that spending points on propulsion or armour was wasted, so it put everything into weapons. The result was lots of cheap ships with far more firepower than opponents could defend against, even if they blew up a whole slew of them. It was probably also either time- or turn-limited, which would also work to this strategy’s advantage because there’s only so much the opponent can destroy in a given time.

-

@Gurth So, basically a Zergling Rush sort of thing.

-

@djls45 I had to look that up, but it sounds like it — just a few decades earlier :)

-

Probably related to this thread (via slashdot):

tl;dr: facial recognization system triggers on picture in an ad on the side of a bus, and determines that some Chinese CEO is jaywalking. The punishment (automatically enacted by the system) involves being publicly shamed on a large display. (Information is also forwarded to the police.)

Yay for the future!

-

-

@cvi it's funny to watch these things happen in exactly the way that naysayers were naysaying they will happen.

-

@Gąska

“Naysayers say nay.” was one of my favourite headlines in SimCity 2000…

-

@cvi said in A.I. gone wrong...:

tl;dr: facial recognization system triggers on picture in an ad on the side of a bus, and determines that some Chinese CEO is jaywalking. The punishment (automatically enacted by the system) involves being publicly shamed on a large display. (Information is also forwarded to the police.)

FTA:

There were no hard feeling from Gree [her company], which thanked the Ningbo traffic police for their hard work in a Sina Weibo post on the same day. The Shenzhen-listed company also called on people to obey traffic rules to keep the streets safe.

Can't think of anyone thanking the police is such an effusive manner in the UK for fixing their fuckup, nor encouraging them to not carry knives, spoons or forks while perambulating (to pick an example entirely at random.)

-

@Gąska Yep.

I'm also slightly amused at the idea of publicly shaming people by displaying them on a large display. Around here, I'm pretty sure it wouldn't be long before somebody figures out that mooning becomes so much more efficient if the local government displays it on a large display in the middle of town.

-

@cvi said in A.I. gone wrong...:

I'm also slightly amused at the idea of publicly shaming people by displaying them on a large display.

This is China. Along with Japan, shame is a whole lot different than in most of the rest of the world*, and tends to be an effective method of control.

* dropped footnote:

Trolly-fodder

Apart from Catholics, of course.

-

@cvi said in A.I. gone wrong...:

mooning becomes so much more efficient if the local government displays it on a large display in the middle of town.

See also: There is no such thing as bad publicity.

-

@cvi said in A.I. gone wrong...:

I'm also slightly amused at the idea of publicly shaming people by displaying them on a large display.

Especially when you've seen that classic Polish comedy movie from 1978 which contains a scene where particularly annoying customers get banned from the shop and their photos are put on "We Don't Serve These Customers" board.

-

@Gurth said in A.I. gone wrong...:

@mikehurley said in A.I. gone wrong...:

Was there a time component to the contest and they just ran the clock out? Otherwise I'm not seeing how a bunch of defenseless ships would win.

I can’t find where I read it, but defenseless != unarmed. As I recall, the software decided that spending points on propulsion or armour was wasted, so it put everything into weapons. The result was lots of cheap ships with far more firepower than opponents could defend against, even if they blew up a whole slew of them. It was probably also either time- or turn-limited, which would also work to this strategy’s advantage because there’s only so much the opponent can destroy in a given time.

Huh, I skipped "software" in initial read and thought you were talking about the British Admiralty just prior to World War I. Then "time or turn limited" showed up. Apparently I had also skipped "propulsion"...

Guilt–shame–fear spectrum of cultures - Wikipedia

Guilt–shame–fear spectrum of cultures - Wikipedia