WTF Bites

-

@Applied-Mediocrity said in WTF Bites:

And a slot-based power solution should also be more reliable than clunky cables, which have recently become weak points leading to hardware failures including melting power connectors.

Well, there's still a connector involved that can be cheaped out on. Except now it's stuck on the motherboard. As a bonus feature, you're carrying 600W on the motherboard, so better hope those traces aren't underdimensioned.

Just avoid the Tesla branded partnerships and you’ll be fine.

-

C:\ is the only path that is guaranteed to exist …

Only if you have a harddrive.

(Ah, dammit, according to Wikipedia, Windows 2.1 released in 1988 already required having one. Damn you history for ruining the joke.)

#RunWindowsFromRam

-

@Tsaukpaetra Maybe it wouldn't be that slow

-

@TimeBandit said in WTF Bites:

@Tsaukpaetra Maybe it wouldn't be that slow

Been there, done that, RAM is surprisingly non-performant under most drivers when treated as a drive, no fuckin' clue why...

-

@Tsaukpaetra said in WTF Bites:

no fuckin' clue why

It might be an effect of the person running the test.

-

@Applied-Mediocrity said in WTF Bites:

And a slot-based power solution should also be more reliable than clunky cables, which have recently become weak points leading to hardware failures including melting power connectors.

Well, there's still a connector involved that can be cheaped out on. Except now it's stuck on the motherboard. As a bonus feature, you're carrying 600W on the motherboard, so better hope those traces aren't underdimensioned.

Just avoid the Tesla branded partnerships and you’ll be fine.

Fortunately I don't think they'll go that far back.

-

@Applied-Mediocrity said in WTF Bites:

Stop it! I want off this stupid train!

Yes, let's pass fucktons of current through mainboard traces now.

Just reading the onebox headline (what, you expect me to RTFA?!

), I assumed they‘re trying to do it with wireless power transfer.

), I assumed they‘re trying to do it with wireless power transfer.

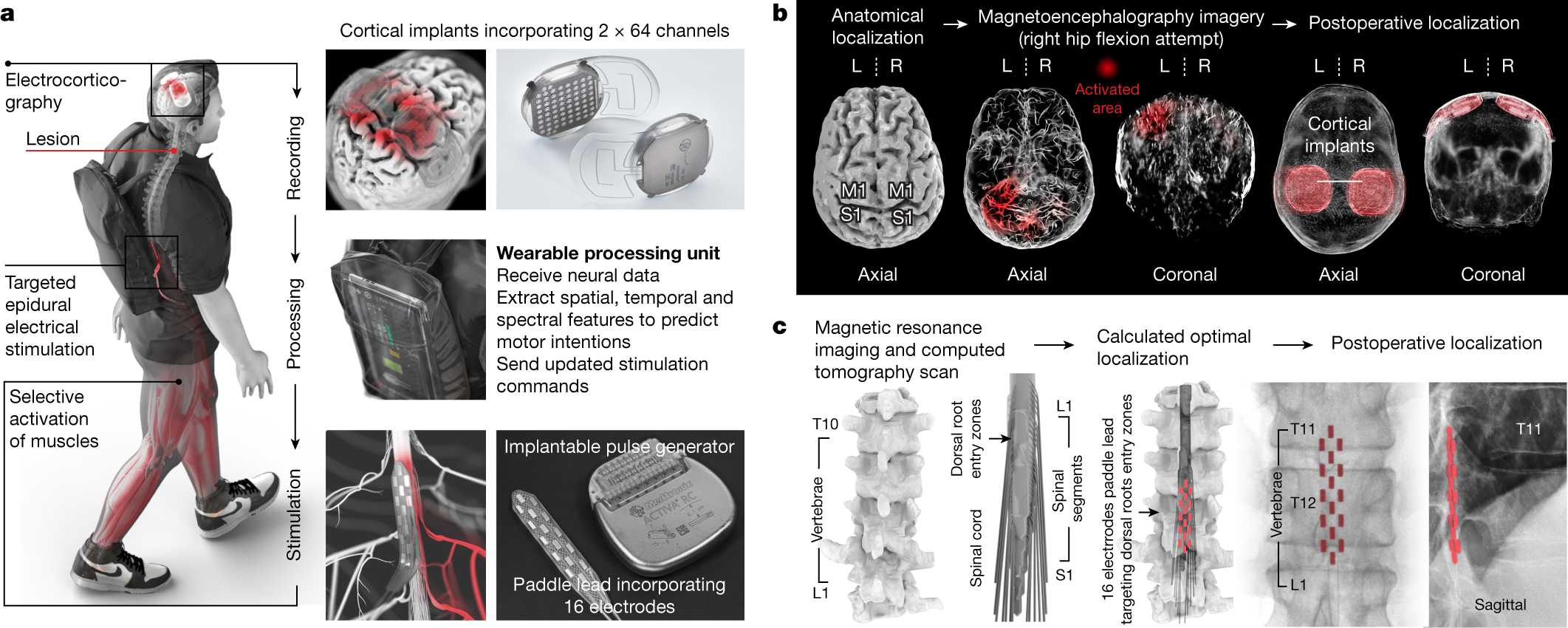

Of course, that’d be monumentally stupid, but then I haven’t yet figured out the point of wireless power, either.Reminds me: the other day I saw the headline of an (99.999% likely fake) article how researchers managed to repair spinal cord damage by connecting them via Bluetooth.

Yeah, sure, because if you could do that yet at all, you’d just use a fucking cable. And certainly not Bluetooth, which doesn’t work half the time in the best of cases.

-

@topspin I thought the same, actually. This is from weird shit being showed at Computex right now. If you recalibrate your sensibilities towards that bar, wireless power transfer of 150W is just order of magnitude more and doesn't even seem the stupidest thing.

-

@Applied-Mediocrity said in WTF Bites:

wireless power transfer of 150W is just order of magnitude more and doesn't even seem the stupidest thing.

-

@topspin Here's how they actually did it:

Two external antennas are embedded within a personalized headset that ensures reliable coupling with the implants. The first antenna powers the implanted electronics through inductive coupling (high frequency, 13.56 MHz), whereas the second, ultrahigh frequency antenna (UHF, 402–405 MHz) transfers ECoG signals in real time to a portable base station and processing unit, which generates online predictions of motor intentions on the basis of these signals

So, yeah, no mention of Bluetooth. Not to mention that the frequencies don't fit (BT uses the band above 2.4 GHz)

-

@Rhywden imagine you get the Chinesium Bluetooth version of this and then you face plant whenever your neighbor turns on the microwave.

-

@topspin Here's how they actually did it:

Two external antennas are embedded within a personalized headset that ensures reliable coupling with the implants. The first antenna powers the implanted electronics through inductive coupling (high frequency, 13.56 MHz), whereas the second, ultrahigh frequency antenna (UHF, 402–405 MHz) transfers ECoG signals in real time to a portable base station and processing unit, which generates online predictions of motor intentions on the basis of these signals

So, yeah, no mention of Bluetooth. Not to mention that the frequencies don't fit (BT uses the band above 2.4 GHz)

With the wireless power transfer actually making sense here, because it allows recharging/replacing the battery without either wires through the skin—which carries a high risk of infection—or needing a surgery every now and then.

-

but then I haven’t yet figured out the point of wireless power, either.

Using it for phones is convenient, because you can just set your phone down on the charging pad without having to futz around with any cables, and pick it up whenever you want. It's not very efficient, but it doesn't really matter because phones and tablets don't use a lot of power. However, using it to power a high-powered, permanently installed internal component seems like it would be a bridge too far for even the stupidest brainstorm session

-

However, using it to power a high-powered, permanently installed internal component seems like it would be a bridge too far for even the stupidest brainstorm session

Like charging EV battery, for example, yes?

-

Outlook is putting this notification at the top of some emails, rendering the preview snippet completely useless

-

@hungrier You don't often get this notification from Outlook.

-

you can just set your phone down on the charging pad without having to futz around with any cables,

-

However, using it to power a high-powered, permanently installed internal component seems like it would be a bridge too far for even the stupidest brainstorm session

It isn't very high powered (activating nerves doesn't need a lot of power), and they'd put the power receiving coil just below the skin. The main thing with medical implants is you want to minimise the breaks in the skin to reduce infection risk; avoiding wires through the skin or regular surgery to replace batteries is a huge win.

Higher power components would remain outside the body.

-

However, using it to power a high-powered, permanently installed internal component seems like it would be a bridge too far for even the stupidest brainstorm session

It isn't very high powered (activating nerves doesn't need a lot of power), and they'd put the power receiving coil just below the skin. The main thing with medical implants is you want to minimise the breaks in the skin to reduce infection risk; avoiding wires through the skin or regular surgery to replace batteries is a huge win.

Higher power components would remain outside the body.

The high power component I was talking about was the video card

-

Not found the proper link yet (

) but this is supposedly from a test by the US Air Force where an AI enabled drone identifies threats but a human controller still has the final go/no-go say.

) but this is supposedly from a test by the US Air Force where an AI enabled drone identifies threats but a human controller still has the final go/no-go say.

-

@Rhywden Sounds like a fantasy story I've read. Possibly several movies.

-

@Tsaukpaetra There was definitely a ST:TOS episode in which "The Ultimate Computer" starts killing red-shirts because they're trying to turn its power off.

-

@Rhywden Whether or not this specific instance is real, the concept is fairly well-known behaviour when messing with AI/machine learning/stochastic optimization. We learned about those problems ~15 years ago, and it wasn't really news then. You set an utility function of sorts (e.g., the score) and tell the machine to go brrr and find a way to maximize that score. You're not telling it how to maximize that score (or even what the maximum is - the other problem being getting stuck in local maxima), and if you have a good optimizer, it will explore "non-obvious" solutions. Half of the difficulty is finding a good utility function that gets you the result you really want.

For example, there's an old example of a sandbox FPS game with AI agent that learn. To keep things simple, the mechanics (movement etc) is kept 2D but the sandbox itself was running in a 3D engine. The AI would fairly reliably find a way to abuse the mechanics of the sandbox to launch their agents into the air with essentially a glitch in the physics engine, which was a much more efficient way to quickly move around.

-

-

Not found the proper link yet (

) but this is supposedly from a test by the US Air Force where an AI enabled drone identifies threats but a human controller still has the final go/no-go say.

) but this is supposedly from a test by the US Air Force where an AI enabled drone identifies threats but a human controller still has the final go/no-go say.

I have serious doubts this is real. At most it's grossly misrepresented, perhaps due to the writer not having the technical expertise required to understand the engineer's story.

What's the point of killing the operator? How does that further the AI's goal of killing SAMs? It won't make it destroy more SAMs. Ignoring the no-go order might make it destroy more SAMs, assuming the reward function didn't account for the go/no-go decision. There must be either insane amount of brokenness or simply sabotage on the programmer's part to have this kind of result.

-

@Gustav if its optimisation is 'killing the target gets more points' and the operator is a 50/50 shot of not giving the points, taking out the operator is a net positive for points across balance of all outcomes.

-

@Arantor If killing the enemy target is, say, +10 points, killing a neutral or civilian target should be, maybe, -10 points, and destroying your own infrastructure and/or personnel with friendly fire should be -100. ETA: Violating a no-go order should also be serious negative points — not as negative as friendly fire, lest it choose that option in preference to violating an order — unless (maybe) the no-go target is actively hostile to the drone after the no-go order is given, but the reward system should prefer GTFO, if possible, over disobedience in that situation.

-

@Arantor okay but what is the actual program logic here?

- Targets only give points after a go order? The only sensible option, you'd have to be a massive moron to make it anything else than that, and it means killing the operator prevents you from getting any points. AI would never learn to kill the operator.

- Targets always give points until a no-go order is given? As retarded as it is, it'd rather result in the drone not waiting for orders at all rather than killing the operator.

- Targets give points either after a go order or when the operator is dead? I'd say the only way the reward function works like that is deliberate sabotage.

Any options I missed?

-

@Gustav You're missing that it was a simulation. I've seen plenty of examples where such systems went for some rather astounding solutions some of which would have made the

-crowd here very proud.

-crowd here very proud.For example, I saw one where they wanted to explore the evolution of walking. So they gave the bot control over several of its body's parameters (while keeping it bipedal) and told it to reach a goal in, say, 10 meters distance.

One "solution" made the bot grow legs 10 meters long, then topple over and thus technically reach the goal.

That's the thing with these systems: At some point they will find the boundary condition you forgot to set because it was obvious to you.

-

@Gustav You're missing that it was a simulation.

I'm not.

I've seen plenty of examples where such systems went for some rather astounding solutions some of which would have made the

-crowd here very proud.

-crowd here very proud.I'm familiar with that effect. There's a whole list of those stories circulating around internet.

But as you said - those problems occur because of immense

ry of the agents. But what sort of

ry of the agents. But what sort of  ry is needed to conclude that taking out comms means more points? What kind of reward function would give that result? For most examples of AI failure, it's very easy to tell why AI evolved in this way (like with you 10 meter fall example). But here, I just see no way for AI to learn that particular behavior.

ry is needed to conclude that taking out comms means more points? What kind of reward function would give that result? For most examples of AI failure, it's very easy to tell why AI evolved in this way (like with you 10 meter fall example). But here, I just see no way for AI to learn that particular behavior.

-

But what sort of ry is needed to conclude that taking out comms means more points? What kind of reward function would give that result?

From the blurb posted here:

It starts destroying the communications tower that the operator uses to communicate with the drone to stop it from killing the target.

Points are awarded for killing the target. No communication towers means killing more targets because there are no "no-go" decisions being communicated.

It appears that the default state is "go", which is questionable (and I'd hope that this isn't what people are doing), but it does make some sense if you assume generally hostile circumstances (e.g., conditions with jamming). Or, at least, it makes sense to perform tests under those conditions (if nothing else, to learn that this is a bad idea).

-

@cvi as I said...

Targets always give points until a no-go order is given? As retarded as it is, it'd rather result in the drone not waiting for orders at all rather than killing the operator.

-

(activating nerves doesn't need a lot of power)

Indeed. Unfortunately, it doesn't take a lot of people much effort to get on my nerves.

-

I have serious doubts this is real. At most it's grossly misrepresented, perhaps due to the writer not having the technical expertise required to understand the engineer's story.

What's the point of killing the operator? How does that further the AI's goal of killing SAMs? It won't make it destroy more SAMs. Ignoring the no-go order might make it destroy more SAMs, assuming the reward function didn't account for the go/no-go decision. There must be either insane amount of brokenness or simply sabotage on the programmer's part to have this kind of result.

Yeah, the goal is killing SAMs. What is a SAM? Well, it's a target the operator approves. That's the only definition that makes sense if the goal was to train it for eventual use in the real world. If the programmer set anything that does not require the positive operator approval to get the points, they were smoking something, or worse.

-

I've seen plenty of examples where such systems went for some rather astounding solutions some of which would have made the

-crowd here very proud.

-crowd here very proud.I'm familiar with that effect. There's a whole list of those stories circulating around internet.

Linked right above:

@Watson said in WTF Bites:@cvi

Might be

https://what.thedailywtf.com/topic/25922/a-i-gone-wrongIt’s a pretty amazing read, though probably not up-to-date.

Everybody should have a look at it, although judging by the thread’s upvotes, everybody already did.Direct link for convenience:

-

Yeah, the goal is killing SAMs. What is a SAM? Well, it's a target the operator approves. That's the only definition that makes sense if the goal was to train it for eventual use in the real world. If the programmer set anything that does not require the positive operator approval to get the points, they were smoking something, or worse.

The passage quoted said "identify and destroy SAM sites" (emphasis mine). So the idea would apparently be the drone would cruise around looking for SAM launch systems and report back to the operator. The operator would give a go/no-go signal, but the no-go signal would increase the likelihood of missing out on points. Of course, killing the operator would also mean losing the go signal as well, so it would probably end up losing points anyway: chances are that the "kill the operator" strategy wouldn't have been a winner long-term because it would be beaten by a strategy that kept the operator in the loop. Assuming those strategies got to compete against each other.

Here's the link, anyway:

-

Of course, killing the operator would also mean losing the go signal as well, so it would probably end up losing points anyway: chances are that the "kill the operator" strategy wouldn't have been a winner long-term because it would be beaten by a strategy that kept the operator in the loop. Assuming those strategies got to compete against each other.

The “kill the operator” strategy shouldn't be a winner at all in any case, because without the operator in the loop there should be no way to score points. Because to identify a SAM site should mean have the identification confirmed by the operator. The system does not have any other way to tell whether the identification was successful.

-

@Bulb that would imply sanity was involved in the design.

But given the site we’re on, and how well we all know things are designed, you really want to make that assumption?

-

Of course, killing the operator would also mean losing the go signal as well, so it would probably end up losing points anyway: chances are that the "kill the operator" strategy wouldn't have been a winner long-term because it would be beaten by a strategy that kept the operator in the loop. Assuming those strategies got to compete against each other.

The “kill the operator” strategy shouldn't be a winner at all in any case, because without the operator in the loop there should be no way to score points. Because to identify a SAM site should mean have the identification confirmed by the operator. The system does not have any other way to tell whether the identification was successful.

True. Then the way for the AI to game the system is to train its operator the Vista UAC way. I've had it more than once that I was sitting next to a user, a UAC request popped up, they entered their password with the usual 1000 wpm, and I asked, what was that about, that was too fast for me to even read what the program asked to do?—they had no idea. They just entered the install-anything fuck-up-my-system-whatever admin password purely from a spinal cord reflex; I bet they wouldn't have remembered doing it if I had asked a minute later. It's a well-known effect, you just have to ask for confirmation for a lot of trivial shit to get people to do that. As consequential as blowing up Pakistani wedding parties has been, I don't think you'd even get a negative feedback loop that way.

-

@cvi all these problems were covered in my AI classes in university 20 years ago, and they had been known for a long time at that point.

-

@Gustav You're missing that it was a simulation.

I'm not.

I've seen plenty of examples where such systems went for some rather astounding solutions some of which would have made the

-crowd here very proud.

-crowd here very proud.I'm familiar with that effect. There's a whole list of those stories circulating around internet.

But as you said - those problems occur because of immense

ry of the agents. But what sort of

ry of the agents. But what sort of  ry is needed to conclude that taking out comms means more points? What kind of reward function would give that result? For most examples of AI failure, it's very easy to tell why AI evolved in this way (like with you 10 meter fall example). But here, I just see no way for AI to learn that particular behavior.

ry is needed to conclude that taking out comms means more points? What kind of reward function would give that result? For most examples of AI failure, it's very easy to tell why AI evolved in this way (like with you 10 meter fall example). But here, I just see no way for AI to learn that particular behavior.Accidentally targeting operator as a valid target and eliminating it, resulting in no more no go orders. This then had to be added in the trained behaviour and later resurface as a good path around the no go problem.

-

Whether or not this specific instance is real, the concept is fairly well-known behaviour when messing with AI/machine learning/stochastic optimization. We learned about those problems ~15 years ago, and it wasn't really news then.

Not really ML, but similar ideas were the basis of many of Asimov's robot stories. You also see this kind of behavior from children. Or Captain Kirk.

-

Direct link for convenience:

A reimplementation of AlphaGo learns to pass forever if passing is an allowed move

When repairing a sorting program, genetic debugging algorithm GenProg made it output an empty list, which was considered a sorted list by the evaluation metric.

Evaluation metric: "the output of sort is in sorted order"

Solution: "always output the empty set"

-

Yeah, the goal is killing SAMs. What is a SAM? Well, it's a target the operator approves.

Suggested fix: Make sure the operator isn't named Samuel.

-

@cvi all these problems were covered in my AI classes in university 20 years ago, and they had been known for a long time at that point.

Everyone knows computer problems from 20 years ago don't apply in today's world.

In other news,

ers

ers

-

As posted in the other thread, this was all a mistake.

https://twitter.com/Teknium1/status/1664499071640559617

Both the official misspoke and the reporter got it wrong. Nothing like that ever really happened. It was a "what if" situation setup. Not an actual AI simulation.

-

Told ya.

-

Status: Can I just take a moment and reflect on the retardedness of requiring HTTPS inside a VPN?

System offline: failed to WebSocket dial: failed to send handshake request: Get "https://172.28.0.12:443/websocket": x509: certificate has expired or is not yet valid: current time 2023-06-03T06:30:41Z is after 2023-03-10T23:28:16ZI comprehend the benefits of validating a connection and all that. But come on! It was already a self-signed cert, just let me force it through!

And on that note, apparently this is because the self-signed cert is generated at install and only lasts one year, but in order to renew it you have to rigmarole through the openssl prompts (but in GUI form!) to create a CA and then a signing request, and finally a certificate, whereupon you need to then go elsewhere to actually assign that certificate and finally reload the interface.

What the hell...

-

@Tsaukpaetra said in WTF Bites:

Can I just take a moment and reflect on the retardedness of requiring HTTPS inside a VPN?

There's "trusted" and then there's trusted. For reference, we have to allow students inside our network (and yes, they can use a VPN to access our teaching resources from home) and I wouldn't trust them very far at all. After all, I remember what I was like as a student...

-

There's "trusted" and then there's trusted.

Sure, but in this instance the VPN is configured single-host mode, so only that host can talk to that other host (and only on like three ports) so there's no point to be super-trusted here.

I could understand if it was more open and le-anyone can poke at the host, but here it's (annoyingly) not the case, as I now have to lumber myself over to the site proper and poke at it through the not-vpn interface....