I, ChatGPT

-

@boomzilla said in I, ChatGPT:

Waiting for it to make shitposts for me.

The year is 2034. ChatGPT update 117.0 now can generate text with

in 110 different languages. WTDWTF posters refuse to update past version 5.0 as "that had all the features I ever needed"

in 110 different languages. WTDWTF posters refuse to update past version 5.0 as "that had all the features I ever needed"

-

@izzion said in I, ChatGPT:

The year is 2034. ChatGPT update 117.0 now can generate text with in 110 different languages.

Made possible by the introduction of the

Unicode codepoint the same year.

Unicode codepoint the same year.

-

TBF... A lot of sap could be replaced by chatgpt and it would be about as useful.

-

@DogsB said in I, ChatGPT:

TBF... A lot of sap could be replaced by chatgpt and it would be about as useful.

Tree sap is more useful than either ChatGPT or SAP.

-

@dkf said in I, ChatGPT:

Tree sap is more useful

That's literally true if you're talking about boiled maple tree sap.

-

Screenshot if it failed to embed

-

@Zecc Nope thread is

.

.

-

-

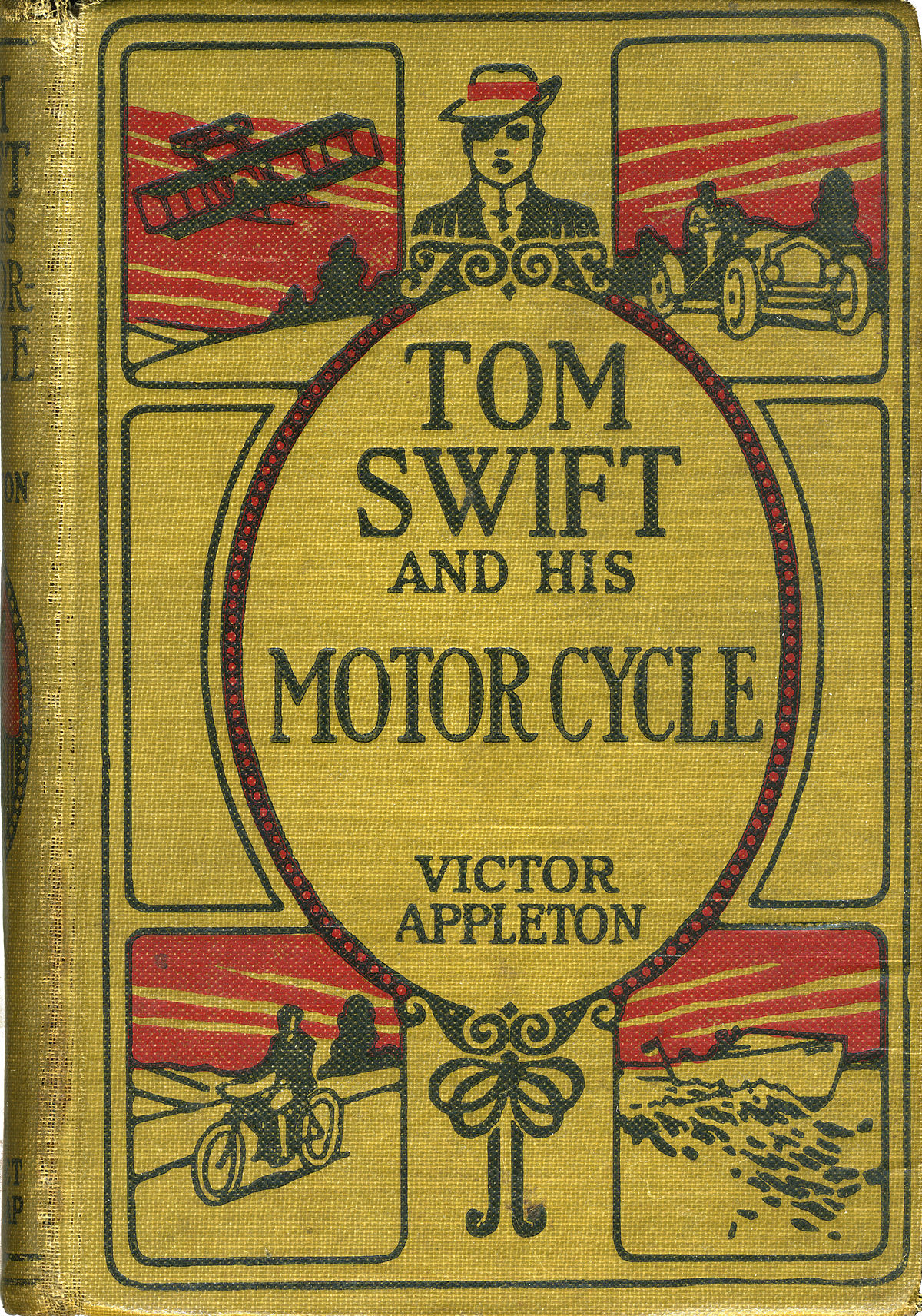

Swifties assemble!

-

-

@HardwareGeek said in I, ChatGPT:

@DogsB said in I, ChatGPT:

Swifties assemble!

33 volumes of the second series

33 volumes! I could barely get through seven of the boxcar kids. And thats just the second series.

-

@DogsB I don't know how many of them I read, but it was practically the only fiction I read for several years as a kid, from my very first access to the school library until I read all they had, and I had a shelf full of them at home, too.

-

@DogsB said in I, ChatGPT:

Swifties assemble!

Where? Purely for research purposes.

(I hope given the circumstances that's believable.)

-

@HardwareGeek said in I, ChatGPT:

@DogsB said in I, ChatGPT:

Swifties assemble!

From that article:

The name "taser" was originally "TSER", for "Tom Swift Electric Rifle". The invention was named for the central device in the story Tom Swift and His Electric Rifle (1911); according to inventor Jack Cover, "an 'A' was added because we got tired of answering the phone 'TSER'.

Answering the phone with "tosser" would indeed get old... except for the excessively young at heart.

-

@dkf said in I, ChatGPT:

The name "taser" was originally "TSER", for "Tom Swift Electric Rifle". The invention was named for the central device in the story Tom Swift and His Electric Rifle (1911);

By coincidence, at the moment you posted this, I was watching a court proceeding involving a case of resisting arrest in which a taser was deployed against the defendant. I had no idea the device, real or fictional, was that old. (And before anyone tries to give me grief about my

, that story was published almost 50 years before I was born, and I never read any Tom Swift stories that old. I think all the ones I ever read were contemporary at the time I was reading them, 1960s — maybe into the very early 70s, but I'd probably broadened my horizons into less juvenile fiction by the 70s. Actually looking at the beginning of TFA (I just grabbed the link previously, and didn't look at TFA itself), I see this was entirely within the second series, and probably mostly from the latter part of that series, although I do recognize the first few titles listed in the "Tom Swift, Jr." article as books that I owned or read.)

, that story was published almost 50 years before I was born, and I never read any Tom Swift stories that old. I think all the ones I ever read were contemporary at the time I was reading them, 1960s — maybe into the very early 70s, but I'd probably broadened my horizons into less juvenile fiction by the 70s. Actually looking at the beginning of TFA (I just grabbed the link previously, and didn't look at TFA itself), I see this was entirely within the second series, and probably mostly from the latter part of that series, although I do recognize the first few titles listed in the "Tom Swift, Jr." article as books that I owned or read.)

-

@boomzilla said in I, ChatGPT:

these things really are just advanced Markov generators

it is what it is. there is much energy spent on how to classify it, if it deserves to use the "intelligence" word

gpt-4, neural network with one trillion parameters, do many things that seem to require an understanding of things

-

@sockpuppet7 Yet time and again things demonstrate no real understanding. They're dream engines.

-

@sockpuppet7 said in I, ChatGPT:

it is what it is. there is much energy spent on how to classify it, if it deserves to use the "intelligence" word

They should ask ChatGPT.

-

@sockpuppet7 said in I, ChatGPT:

things that seem to require

That sufficiently sophisticated Chinese translation book makes that seem as well.

-

-

-

@boomzilla Maliciously sabotaging someone else's business is severely illegal. Nightshade is sabotage in the classic sense, not particularly different from throwing wooden shoes into machinery. Use with extreme caution if you use it at all!

-

@Mason_Wheeler Except... in this case it is a (meagre, probably low-effectiveness) technical barrier against the AI corps doing what they might be actually prohibited from doing anyway.

-

@dkf Who is prohibited from looking at something that is lawfully available to them and learning from it?

-

@Mason_Wheeler said in I, ChatGPT:

@boomzilla Maliciously sabotaging someone else's business is severely illegal. Nightshade is sabotage in the classic sense, not particularly different from throwing wooden shoes into machinery. Use with extreme caution if you use it at all!

I would agree with you if they were attacking the scraping servers or something and corrupting their data. But I think this take of yours is super bad. This is leaving wooden shoes on your porch and having the machine owners taking them and throwing them in the machines themselves.

-

@boomzilla Not really. What it is is something that, in a slightly different context, you're quite vocally against: the active poisoning of education.

-

@Mason_Wheeler said in I, ChatGPT:

@boomzilla Not really. What it is is something that, in a slightly different context, you're quite vocally against: the active poisoning of education.

I'm impressed that you still come up with

takes like this, which is wrong in the same way as your wooden shoe analogy.

takes like this, which is wrong in the same way as your wooden shoe analogy.Unless the people doing this have made a deal with the AI people to supply them with "good" images and then swap them out for these, but there's no indication that's happening anywhere that I'm aware of.

-

@boomzilla And now we're back to my question to @dkf: why do the AI companies need such a deal in the first place? It's perfectly legal and reasonable to take material that is lawfully made available to you, study it, and learn from it. So perfectly legal that no one even thinks to question it; to do so at all is simply absurd.

And in Alice Corp. v. CLS Bank International the Supreme Court pointed out, quite reasonably, that simply adding "apply it with a computer" to a process does not magically turn it into a fundamentally different type of process. That would seem to be the most relevant piece of case law here. So, by what law or precedent does this notion that consent is required for learning arise?

-

@Mason_Wheeler said in I, ChatGPT:

@boomzilla Maliciously sabotaging someone else's business is severely illegal. Nightshade is sabotage in the classic sense, not particularly different from throwing wooden shoes into machinery. Use with extreme caution if you use it at all!

Creating shitty content is, unfortunately, completely legal.

Creating shitty content for the purpose of honey-potting people who illegally scrape content ... is probably ineffective, but saying that's illegal is like a burglar suing you for eating moldy left-overs in your fridge. Extremely fucking retarded.Besides that, the research is generally interesting as adversarial images have been used before, e.g. change a single pixel in an image of a speed limit sign (

) and the detected result changes completely.

) and the detected result changes completely.

-

@topspin Research is one thing. Actively deploying adversarial images to try to screw up real-world AIs is another, and speed limits are a prefect example of why. If you confused a self-driving car into thinking it had the right to go 70 in a residential neighborhood, and something went wrong, the blood would be on your hands.

-

@Mason_Wheeler said in I, ChatGPT:

@topspin Research is one thing. Actively deploying adversarial images to try to screw up real-world AIs is another, and speed limits are a prefect example of why. If you confused a self-driving car into thinking it had the right to go 70 in a residential neighborhood, and something went wrong, the blood would be on your hands.

Sure sure. And if someone posts these images on their website, together with an article describing the research, then the scrapers come along and incorporate that shit into their models without asking, they're violating copyright and you want to blame the researchers instead.

The fix is simple: stop using unlicensed images.

-

@Mason_Wheeler said in I, ChatGPT:

If you confused a self-driving car into thinking it had the right to go 70 in a residential neighborhood, and something went wrong, the blood would be on

yourthe car vendor's hands.FTFY

This world is made for humans and if they can read the signs that is fine. If miniscule manufacturing defects will cause your self-driving car to do something so dumb then you should not be making self-driving cars.

-

@topspin said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

@topspin Research is one thing. Actively deploying adversarial images to try to screw up real-world AIs is another, and speed limits are a prefect example of why. If you confused a self-driving car into thinking it had the right to go 70 in a residential neighborhood, and something went wrong, the blood would be on your hands.

Sure sure. And if someone posts these images on their website, together with an article describing the research, then the scrapers come along and incorporate that shit into their models without asking, they're violating copyright and you want to blame the researchers instead.

The fix is simple: stop using unlicensed images.Once again, the question no one wants to answer: where does any requirement exist to obtain a license for learning? This is not a copyright issue no matter how many people who have grown accustomed to using copyright as a magic hammer over the years may want to pervert it into one.

-

@Mason_Wheeler said in I, ChatGPT:

@topspin said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

@topspin Research is one thing. Actively deploying adversarial images to try to screw up real-world AIs is another, and speed limits are a prefect example of why. If you confused a self-driving car into thinking it had the right to go 70 in a residential neighborhood, and something went wrong, the blood would be on your hands.

Sure sure. And if someone posts these images on their website, together with an article describing the research, then the scrapers come along and incorporate that shit into their models without asking, they're violating copyright and you want to blame the researchers instead.

The fix is simple: stop using unlicensed images.Once again, the question no one wants to answer: where does any requirement exist to obtain a license for learning?

Copyright.

The mere existence of copyright shows that human minds and computers are treated fundamentally different under the law, now matter what some patent case-law says. They may not be fundamentally different, but without treating them differently copyright would not exist.

-

@topspin

This is not a copyright issue no matter how many people who have grown accustomed to using copyright as a magic hammer over the years may want to pervert it into one.

This is not a copyright issue no matter how many people who have grown accustomed to using copyright as a magic hammer over the years may want to pervert it into one.

-

@Mason_Wheeler said in I, ChatGPT:

@topspin

This is not a copyright issue no matter how many people who have grown accustomed to using copyright as a magic hammer over the years may want to pervert it into one.

This is not a copyright issue no matter how many people who have grown accustomed to using copyright as a magic hammer over the years may want to pervert it into one.

Just because you don't like the shitty consequences of the law doesn't mean you can ignore them.

-

@Mason_Wheeler said in I, ChatGPT:

You realize that it shows that you've edited your post?

Editing in a response to what I said after I replied to it and then pretending I ignored it doesn't give you grounds to .

.

-

@topspin The law says nothing whatsoever about learning from copyrighted works. And one of the most fundamental principles of the rule of law is, that which is not prohibited, you are free to do. Simply because a bunch of modern-day Luddites look at this and say "COPYRIGHT VIOLASHUNNN!!!" does not make it one.

-

@Mason_Wheeler said in I, ChatGPT:

@boomzilla And now we're back to my question to @dkf: why do the AI companies need such a deal in the first place? It's perfectly legal and reasonable to take material that is lawfully made available to you, study it, and learn from it. So perfectly legal that no one even thinks to question it; to do so at all is simply absurd.

Indeed. Though as mentioned in TFA, people are doing things like ignoring robots.txt. That happened here recently, in fact, from OpenAI.

And in Alice Corp. v. CLS Bank International the Supreme Court pointed out, quite reasonably, that simply adding "apply it with a computer" to a process does not magically turn it into a fundamentally different type of process. That would seem to be the most relevant piece of case law here. So, by what law or precedent does this notion that consent is required for learning arise?

In fact, I've argued exactly this point. And they're not preventing them from learning with this. They're just not getting what they want out of it. I'm sure the AI scrapers will figure this stuff out soon enough.

By your logic, however, if I put up really shitty art (because I suck at art) and the AI guys taught their AI to make shitty art I'm at fault for sabotaging them.

-

@topspin said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

You realize that it shows that you've edited your post?

Yes, immediately after I posted it, well before you wrote yours.

Editing in a response to what I said after I replied to it and then pretending I ignored it doesn't give you grounds to

.

.If you were paying attention even the slightest bit, you wouldn't have missed it.

-

@Mason_Wheeler said in I, ChatGPT:

@topspin Research is one thing. Actively deploying adversarial images to try to screw up real-world AIs is another, and speed limits are a prefect example of why. If you confused a self-driving car into thinking it had the right to go 70 in a residential neighborhood, and something went wrong, the blood would be on your hands.

But me posting a fucked up speed limit sign on my web page isn't the same as vandalizing a public sign no matter how badly you want us to believe it.

-

@boomzilla said in I, ChatGPT:

In fact, I've argued exactly this point. And they're not preventing them from learning with this. They're just not getting what they want out of it. I'm sure the AI scrapers will figure this stuff out soon enough.

Probably, but do we really want to set off an arms race in this space?

By your logic, however, if I put up really shitty art (because I suck at art) and the AI guys taught their AI to make shitty art I'm at fault for sabotaging them.

That's not my logic at all. Intent matters; the concept of mens rea is fundamental to law. Showing off low-quality work is one thing; intentionally using a tool that has no purpose other than sabotage is another entirely.

-

@Mason_Wheeler said in I, ChatGPT:

@topspin said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

@topspin Research is one thing. Actively deploying adversarial images to try to screw up real-world AIs is another, and speed limits are a prefect example of why. If you confused a self-driving car into thinking it had the right to go 70 in a residential neighborhood, and something went wrong, the blood would be on your hands.

Sure sure. And if someone posts these images on their website, together with an article describing the research, then the scrapers come along and incorporate that shit into their models without asking, they're violating copyright and you want to blame the researchers instead.

The fix is simple: stop using unlicensed images.Once again, the question no one wants to answer: where does any requirement exist to obtain a license for learning?

This is false. I categorically reject that this is the issue here.

This is not a copyright issue no matter how many people who have grown accustomed to using copyright as a magic hammer over the years may want to pervert it into one.

I agree but you're still totally off base with this sabotage bullshit.

-

@Mason_Wheeler said in I, ChatGPT:

@topspin The law says nothing whatsoever about learning from copyrighted works. And one of the most fundamental principles of the rule of law is, that which is not prohibited, you are free to do. Simply because a bunch of modern-day Luddites look at this and say "COPYRIGHT VIOLASHUNNN!!!" does not make it one.

Where does the law create the exact distinction between learning and copying, then? How much of the original content is this "learned" content allowed to contain without being a derivative work? 10%? 50%? Everything but with a single bit difference?

-

@Mason_Wheeler said in I, ChatGPT:

@boomzilla said in I, ChatGPT:

In fact, I've argued exactly this point. And they're not preventing them from learning with this. They're just not getting what they want out of it. I'm sure the AI scrapers will figure this stuff out soon enough.

Probably, but do we really want to set off an arms race in this space?

Why not? Like other arms races, some interesting stuff will come out of it. I don't really have a dog in this fight so it's interesting to watch.

By your logic, however, if I put up really shitty art (because I suck at art) and the AI guys taught their AI to make shitty art I'm at fault for sabotaging them.

That's not my logic at all. Intent matters; the concept of mens rea is fundamental to law. Showing off low-quality work is one thing; intentionally using a tool that has no purpose other than sabotage is another entirely.

Fair, the analogy isn't perfect. But your claim is completely dumb. It's a burglar suing you because he broke his arm breaking into your house.

-

@Mason_Wheeler said in I, ChatGPT:

@topspin The law says nothing whatsoever about learning from copyrighted works. And one of the most fundamental principles of the rule of law is, that which is not prohibited, you are free to do.

Where exactly does the law say that I cannot put images on my web page that look like one thing to a human and like another thing to a machine?

-

@boomzilla said in I, ChatGPT:

Fair, the analogy isn't perfect. But your claim is completely dumb. It's a burglar suing you because he broke his arm breaking into your house.

Prejudicial language, assumes facts not in evidence.

Prejudicial language, assumes facts not in evidence.Your analogy of the burglar breaking into your house assumes criminality, which is in fact the issue being debated here. For a more neutral analogy on the same grounds, look at booby trapping your own property. This is quite illegal, because it could all too easily injure or kill lawful visitors (including emergency services) as well as the criminals you may have intended to catch.

-

@topspin said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

@topspin The law says nothing whatsoever about learning from copyrighted works. And one of the most fundamental principles of the rule of law is, that which is not prohibited, you are free to do. Simply because a bunch of modern-day Luddites look at this and say "COPYRIGHT VIOLASHUNNN!!!" does not make it one.

Where does the law create the exact distinction between learning and copying, then? How much of the original content is this "learned" content allowed to contain without being a derivative work? 10%? 50%? Everything but with a single bit difference?

What is the legal status of an art student painting a copy of an existing painting? (Hint: this is a non-hypothetical thing that art students do all the time in order to improve their skills.)

-

@Mason_Wheeler said in I, ChatGPT:

@topspin said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

@topspin The law says nothing whatsoever about learning from copyrighted works. And one of the most fundamental principles of the rule of law is, that which is not prohibited, you are free to do. Simply because a bunch of modern-day Luddites look at this and say "COPYRIGHT VIOLASHUNNN!!!" does not make it one.

Where does the law create the exact distinction between learning and copying, then? How much of the original content is this "learned" content allowed to contain without being a derivative work? 10%? 50%? Everything but with a single bit difference?

What is the legal status of an art student painting a copy of an existing painting? (Hint: this is a non-hypothetical thing that art students do all the time in order to improve their skills.)

Depends on whether said art student is going to make money (or gain fame) with that copy, doesn't it?

-

@Mason_Wheeler said in I, ChatGPT:

@topspin said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

@topspin The law says nothing whatsoever about learning from copyrighted works. And one of the most fundamental principles of the rule of law is, that which is not prohibited, you are free to do. Simply because a bunch of modern-day Luddites look at this and say "COPYRIGHT VIOLASHUNNN!!!" does not make it one.

Where does the law create the exact distinction between learning and copying, then? How much of the original content is this "learned" content allowed to contain without being a derivative work? 10%? 50%? Everything but with a single bit difference?

What is the legal status of an art student painting a copy of an existing painting? (Hint: this is a non-hypothetical thing that art students do all the time in order to improve their skills.)

Usually they're painting works in the public domain. Otherwise it's a derivative work according to the law, like it or not.

Now go ahead and define how much data has to differ from the original to be treated as learned instead of copying.

Tom Swift - Wikipedia

Tom Swift - Wikipedia