This guy's ignorance of the OS he's using is pissing me off

-

The OS itself doesn't even see a difference between moving an file and renaming a file.

It does, actually.Renaming a file is a case of creating a new link, and unlinking the old one. Moving a file only needs to happen when the new link would be on a different filesystem, at which point there's a copy of the file's data before creating the new link.

Linux people … simply do not understand the concept that a file's name is metadata, not data.

That's not restricted to any one operating system; indeed, ∗n∗x users are probably more likely to have come across the concept of hard linking files.

-

What was being argued is that it makes sense to keep file metadata in that file,

A PGP signature would have to sign the entire file including all of its metadata, unless the metadata was useless. Therefore, the PGP signature cannot be part of the metadata.

-

Renaming a file is a case of creating a new link, and unlinking the old one. Moving a file only needs to happen when the new link would be on a different filesystem, at which point there's a copy of the file's data before creating the new link.

False. On a system call level, there is only

rename(), and it doesn't work cross-filesystem. On the CLI level, there is onlymv, and it works both within and across filesystems, the latter by falling back to a copy (although you can force single-filesystem with an option).I think the distinction of in-directory rename versus cross-directory move is mainly valid on filesystems where the file's physical location is stored in the directory. On modern filesystems with global inodes or similar and hardlink support it's not relevant.

-

everything in the workflow has to support that ad-hoc standard or you risk losing metadata, data, links between the two, or all of the above.

Given that there are no standards for implementing multiple forks inside a filesystem, an ad-hoc naming relationship standard is actually less likely to cause trouble. And if the files so named always get packaged up together in some convenient archive format, so much the better.

-

Linux-using people don't understand that

Given that Unix and derivatives understand it a shitload better than Windows does (can't rename an in-use file in Windows -

) your claim strikes me as highly unlikely to be true.

) your claim strikes me as highly unlikely to be true.

-

Bullshit. All you're saying is "I'm afraid of change", and yet forcing change on people anyway.

-

I miss ResEdit

-

I just don't know how people survive without being able to delete in-use files and still use them.

-

I know, right? Deleting an in-use file once stopped my house burning down, cured my Morgellon's and neutered my cat. It was awesome!

-

It happened to many times for me to be closing everything hanting for what is blocking a directory or file I need to delete or replace.

-

Such a boon to usability, too. It's so easy to explain to people.

-

It wouldn't be nearly as annoying if Explorer wasn't such a fucking asshole about locking a file to try to generate its thumbnail or index it for searching, and then not releasing it when I tell it to delete the file.

Bonus annoying points if the file so locked is on a USB drive that I asked Explorer to safely remove.

-

Just because someone I had no interaction with designed it a way they felt best doesn't mean

YMBNH. Oh wait, you are!

-

Yes! I am! But I appreciate all these learning moments telling me how much of an arse hat I am!

-

an arse hat I am!

What's an arse hat? I'm asking, cause I think I was actually telling how much Blakey is that, but I can't be sure.

-

But I appreciate all these learning moments telling me how much of an arse hat I am!

Don't worry, those won't cease even after you've been here a while.

-

-

Arse is

a fancy pantsthe correct way ofsayingspelling ass.Unless you meant a donkey.

-

Arse is a fancy pants way of saying ass.

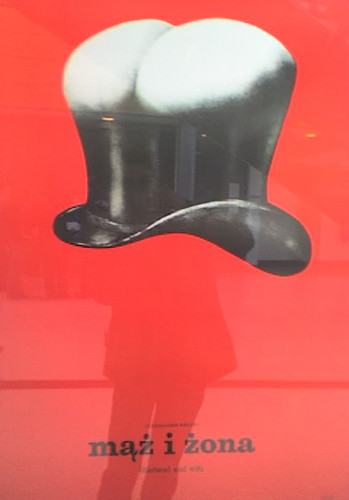

I know what arse is, it's the hat that's giving me a headache.

-

I know what arse is, it's the hat that's giving me a headache.

If you have your head up your own arse, you're wearing it like a hat.

-

If anyone here is still taking about alternate data streams / resource forks, I'd say the main problem is the lack of standardized APIs to support listing them. Just like the ISO C standard doesn't even have functions for listing files, the POSIX and C++11 standards (which do) don't offer anything for listing alternate streams and one has to rely on platform-specific stuff (

FindFirstStreamW()on Windows, no idea on mac)...

-

How do you move a file like that to a FS which doesn't allow alternate streams?

When mounting a non-HFS file system, Apple typically uses a format known as [URL=https://en.wikipedia.org/wiki/AppleSingle_and_AppleDouble_formats]AppleDouble[/URL] which stashes the resource fork and info (and any other named forks that might exist) in a separate file (whose name is prefixed with "._" to make it hidden to UNIX shells.) This was invented a long time ago for the A/UX environment and has since been revived for Mac OS X.

When preparing files for distribution, it is more common to combine the various forks and info into a single file. There were many different formats. Apple's A/UX (and almost nobody else) used AppleSingle. MacBinary was a very popular format. A text-encoded representation (conceptually similar to UU-encoded) called BinHex (with the ".hqx") extension was also used. Today, Apple prefers to use disk images (the .dmg format) for distribution because of Mac OS X's extensive use of "packages" (directories that are made to look and feel like single files) for applications and documents.

Mac-based mail (and FTP and other related) clients would automatically use these representations to preserve resource fork data. Applications not designed with this capability would result in resource data getting stripped. Depending on the nature of the file, the result might be harmless (e.g. a plain text file), some data might be lost (e.g. a document that stores text in the data fork and formatting in the resource fork) or it might be catastrophic (e.g. an application, where almost everything is in the resource fork.)

It was actually part of the arrangement with IBM to make NT compatible with OS/2, believe it or not. I don't know enough about OS/2 to tell you what IBM planned to use it for...

OS/2 had its own concept of a "resource fork" for storing "extended attributes" with files. In general, these EAs stored things like custom icon data and position information for the Workplace Shell. They were sometimes used by applications, but they were almost never required for apps to function. When the HPFS file system is used, EAs are stored natively. When other file systems (like FAT or non-OS/2 network volumes) are used, every directory will contain a file named "EA DATA. SF" (the spaces are a part of the name) which holds the extended attributes for every file in that directory.

... Office does and run the .zip compression algorithm on a folder tree in a specific format. That seems to be the modern way of solving that problem.

That's a similar concept similar to what Apple did, starting with OS X. Applications and "bundle" files are folders with a flag set so it will appear as a single file in the Finder. Bundles aren't compressed, but it's still the same concept. It predates MS Office's ".foox" formats, but not by much.

I think the first time I saw this concept was the invention of .jar files for Java - which are, as I'm sure you already know, zip files containing compiled Java code/data.

-

On the other hand, MacOS arguably misused resource forks as well. I would argue that an application is defined by its CODE resources, for example.

Can you really call it misuse when the feature was used in this capacity in the very first release of the software? Sounds to me like this was the intended use all along and discussions about "metadata only" are something that was invented many years later by people whose experience was on other platforms.

You went so far as to design an APPL file so forward-thinking that even the actual binary code itself could be easily swapped-out in a Resource-- and then when it came time to switch CPUs, you didn't use that capability!? Idiots.

Even worse, when it came time to switch architectures again (Mac OS X), they switched to the Mach-O format (from NeXT) - using yet another incompatible executable format. And then broke it again in 10.6 with a not-backward-compatible change to Mach-O. At least they didn't abandon Mach-O when transitioning to x86 and then x86_64....

A file's name is not its identity. It's merely a piece of metadata, a textual label (potentially one of many) that has been given to a collection of related data. Likewise, a filename suffix is an anchronistic, but sadly widespread and seemingly unkillable, representation of another piece of metadata

Another area where Apple was ahead of the curve. The Mac APIs (but not the C or UNIX ones) use a file-reference object for accessing files. This object has changed over the years and has been replaced with new-but-similar objects a few times, but the concept is simple - it identifies files and can track files as they are moved and renamed by storing its ID in multiple ways. Originally, it would store a path string (as a last resort, if all else fails) along with a volume ID and a file ID (conceptually like an inode number.) Later versions include proprietary URL data and other representations. Ideally, these objects are created via a file open/save dialog, by drag/drop of a file onto the app, or by double-clicking an associated document. Apps that persist the object instead of a name/path can usually locate the same file in the future even if it moves or gets renamed (within certain limits, of course.)

Ditto for associating files with apps. The original system of type- and creator-codes worked great. A file would be associated with the application that created it (or some other compatible app if the creator isn't available) without regard to its name. Mac OS X still supports this, but it's now only a fallback mechanism that is used only when other mechanisms (including name lookup) fail to find anything.

The idea of tracking the creator along with the type was especially genius. So you could have an HTML owned by an HTML editor and other owned by your web browser. Double-clicking each one would load it into the app that created it. (Of course, you could always do an explicit drag-drop or file-open operation to open it in something else.) Today, all documents with the same name-extension open in the same app unless you create explicit per-file associations via the Finder (which creates yet another piece of file-association metadata.)

In Apple's effort to be like the rest of the world, they abandoned a lot of this tech and therefore now suffer from the same problems that the rest of the world does - a document's type is identified by its name instead of explicit metadata, and applications frequently lose track of their own files if those files are moved or renamed.

If anyone here is still taking about alternate data streams / resource forks, I'd say the main problem is the lack of standardized APIs to support listing them. Just like the ISO C standard doesn't even have functions for listing files, the POSIX and C++11 standards (which do) don't offer anything for listing alternate streams and one has to rely on platform-specific stuff (FindFirstStreamW() on Windows, no idea on mac)...

There are two very well established APIs for this - one for Windows and one for Mac OS. The fact that ISO hasn't decided to invent their own version for POSIX or C doesn't mean there are no standards. It just means that there is no UNIX support and C programmers will need to use the appropriate OS-specific calls.

-

if Explorer wasn't such a fucking asshole about locking a file to try to generate its thumbnail or index it for searching

Now, don't be leaving AV programs out of this.Apple prefers to use disk images (the .dmg format)

Perhaps it's just me, but I look at.dmgand automatically read it as "damage". Not an extension I'd choose.

-

@anotherusername said:

if Explorer wasn't such a fucking asshole about locking a file to try to generate its thumbnail or index it for searching

Now, don't be leaving AV programs out of this.I don't use an antivirus that contributes to that problem, so if you do then use a better one I guess.

-

I don't, but I've seen reports of such things often happening with various AV programs.

My main annoyance with my current AV is that it doesn't run itself as a low-priority process, so if it happens to decide to do a disk scan while I'm trying to use the machine I have to go and manually set it to low priority so other things can get some of the disk too.