It's tools all the way down!

-

Wrestling with the “devops” stuff here I am getting a persistent feeling of being buried under a pile of tools. They are always multiple ways to do something, with just slightly different options where I'm never sure how they match.

For example now I am trying to build CI for some Azure FunctionApps. So there is a way to deploy them from Visual Studio (that's what the developer has been doing so far and we want to leave), there is a

funcutility and there is an ADOP task. The visual studio dialog has an option to delete other files in destination (the developer, unfortunately, relies on this being off), but I didn't see corresponding option in the ADOP task. But there is another option there—to install as a zip.The deployment request (well, in visual studio it calls it as needed) needs the function to be published first. And it can be zipped. The ADOP task for publish has an option to zip the published package, thought the

dotnet publishcommand does not. And of course, the documentation does not say whether this just calls zip on the directory (it looks like it does). Because Microsoft documentation is, well, still like in that Helicopter joke.Helicopter joke

So a guy flies a helicopter, but the weather is turning bad and fog starts to form and suddenly he's not sure where he is. But as he cautiously flies on, an office building appears from the fog, and fortunately there is somebody sitting by an open window.So he flies as close as possible and shouts at that person “Please, can you tell me where I am?” “In a helicopter!” comes a reply. So the pilot turns the helicopter around and in a couple of minutes lands on the nearest airport.

”How did you find it?” other pilots ask him after landing. “Well, from the response, that was completely accurate, but completely useless, I concluded it must be the Microsoft offices…”

Also there is another way to publish the project. By running

msbuildinstead ofdotnet. It is a msbuild project file underneeth, so the later almost surely runs the former. Except the options used seem to be black magic, or at least heavy wizardry. I've got some examples, from which I managed to get an incantation along the lines ofmsbuild something.sln -t:Build -p:DeployOnBuild=true -p:Configuration=Release -p:WebPublishMethod=FileSystemStrangely enough there is also a

Publishtarget (-t:Publish), but I couldn't get that to work. And a pack of scent hounds probably couldn't find documentation for which values the SDK recognizes in theWebPublishMethodproperty, or any other properties for that matter. Well, I certainly couldn't.And it's not like that problem was specific to Azure or Microsoft either. Kubernetes has even more tools built on top of it that almost, but not completely, solve the problem, and create as many new problems in the process as they solve.

Take for example

helm. It is great when you need to write the same value to a bunch of places in the manifests. Like for example the hostname a service should be visible at goes into the ingress (information for the reverse proxy to route that virtual host), once again in the TLS section of that ingress (specifying that the certificate shall be used for that virtual host) and once more in the synthesizedassets/config/config.jsonbecause the OpenID Connect library can't just pick the origin (or the front-end devs are too lazy to synthesize the config object). But its way of working with the values, and of propagating them to sub“chars” is so limited that the “charts” are not composable in practice anyway.It also almost remembers what was deployed, which includes the version and the parameters, but it does not include where it got the “chart” from, so you almost, but not completely, can tell what is installed. So you end up with tools like helmfile and helm controller and kapp-controller and argo-cd.

Except that creates a frog-and-pole problem. The tools for installing stuff have to, themselves, be installed. Depending on the tool either on your workstation or into the environment. And somewhat obviously they can't install themselves. So to simplify installing one thing you end up installing a bunch of other things and at the end of the day you still have installation instructions as long as your arm.

Is there a way to get out of the maze of twisty little tools, almost, but not exactly, all alike, and not go either insane, raving mad or batshit crazy in the process?

-

@Bulb said in It's tools all the way down!:

The tools for installing stuff have to, themselves, be installed. Depending on the tool either on your workstation or into the environment. And somewhat obviously they can't install themselves. So to simplify installing one thing you end up installing a bunch of other things and at the end of the day you still have installation instructions as long as your arm.

As a totally-not-helpful comment, that's why Mavenized builds are so good in Java: they're easy to set up to obtain not just the libraries you need, but also the various bits and pieces of support tooling. Yes, you end up downloading the whole internet (or so it feels like) but it does mean that you can actually have your build-and-deploy smarts almost entirely in one place.

Unfortunately, this level of automation seems to scare a lot of people. Instead, they stop short and use a bunch of home-brewed tooling combinations, and that leads into the horrible mess that you know all too well.

Is there a way to get out of the maze of twisty little tools, almost, but not exactly, all alike, and not go either insane, raving mad or batshit crazy in the process?

Let there be one way to make a deployable artefact, and one way to deploy it. Make that work, and work reliably. Drop any tools that aren't contributing to doing it that way, even if it looks like they might be convenient. That at least limits the amount of parallel-ways-of-working

and lets you keep a little sanity for later.

and lets you keep a little sanity for later.

-

@Bulb said in It's tools all the way down!:

Wrestling with the “devops” stuff here I am getting a persistent feeling of being buried under a pile of tools. They are always multiple ways to do something, with just slightly different options where I'm never sure how they match.

This sounds like an accurate description of every devops process and environment I’ve ever seen. The solution to any problem is more automation. It’s always more automation, more tools to learn and maintain. I’d swear half the devops folks do it solely to have new toys to play with, but the amount of bitching about the new tools makes me unsure.

Is there a way to get out of the maze of twisty little tools, almost, but not exactly, all alike, and not go either insane, raving mad or batshit crazy in the process?

Given that the last K8s deployment I touched had tools to build the files for Helm, to build the files… it really was tools all the way down.

The only advice I have is to try to apply the original Occam’s Razor: not multiplying your entities beyond necessity.

In my experience this means if you can write a small script or tool in place of introducing another actual tool, absolutely do that. The total surface of what needs to be managed and understood is smaller, even if some of it then has to be taught and documented.

People spout that “we’re using the industry tools” but they all seem to be used in varied ways even for notionally the same task such that any familiarity is either non-helpful or straight up counterproductive when transferring somewhere else.

If in doubt KISS. Devops folks would do fucking well to remember that. And sometimes, it is worth hammering out the odd rough edge from your whatever to make the CI process easier - something the devops folks tend to eschew in favour of complexity in the pipeline later.

-

@Arantor said in It's tools all the way down!:

it is worth hammering out the odd rough edge from your whatever to make

the CI processeverybody's life easier - somethingthe devopsfolks tend to eschew in favour of complexityin the pipeline later.FTFY

-

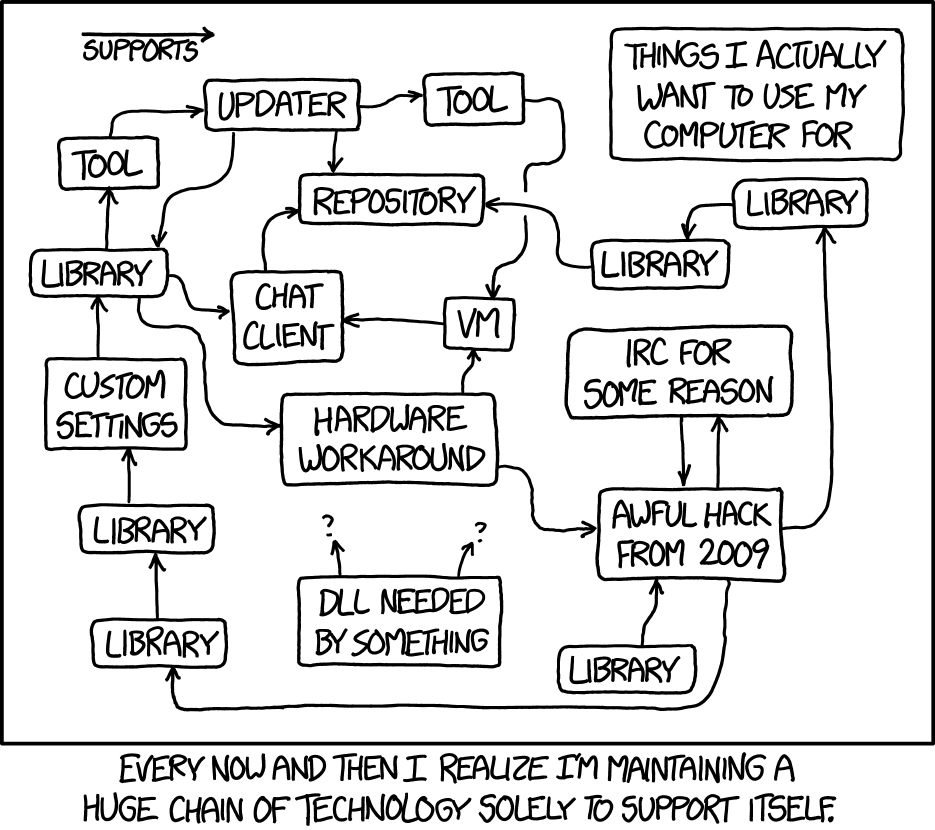

@error_bot xkcd tech loops

-

-

The sole purpose of the computing industry is computing industry.

-

@dkf said in It's tools all the way down!:

@Bulb said in It's tools all the way down!:

The tools for installing stuff have to, themselves, be installed. Depending on the tool either on your workstation or into the environment. And somewhat obviously they can't install themselves. So to simplify installing one thing you end up installing a bunch of other things and at the end of the day you still have installation instructions as long as your arm.

As a totally-not-helpful comment, that's why Mavenized builds are so good in Java: they're easy to set up to obtain not just the libraries you need, but also the various bits and pieces of support tooling. Yes, you end up downloading the whole internet (or so it feels like) but it does mean that you can actually have your build-and-deploy smarts almost entirely in one place.

That works well for the applications themselves, and maven isn't even best in that domain as it does not have an easy way to integrate custom build steps. gradle, npm or even dotnet (the last thanks to the msbuild underneath) are easier when you need to generate some source at the beginning of the build or even just collect some version info to link in the app.

Unfortunately, this level of automation seems to scare a lot of people. Instead, they stop short and use a bunch of home-brewed tooling combinations, and that leads into the horrible mess that you know all too well.

Unfortunately, a lot of the tools stop a bit short of actually solving the problem. My previous project was a service that runs on an embedded Linux. There are several target systems that have either ptxdist or yocto (bitbake) builds. These tools can pull all the dependencies, build everything and assemble the complete system image and it works fairly well… except the configuration is a bit mixed up, making it impossible to just check out a bunch of files and start working, so there was a (bash) script to set up the working directory.

Some of it was because the person setting it up didn't understand how the tool is supposed to be used when they've set it up, but some was because the tool simply does not offer standard way to define the complete setup (mainly, yocto does not have standard way to specify set of layers, and some configuration goes in “local.conf” that is actually projectwide).

Is there a way to get out of the maze of twisty little tools, almost, but not exactly, all alike, and not go either insane, raving mad or batshit crazy in the process?

Let there be one way to make a deployable artefact, and one way to deploy it. Make that work, and work reliably. Drop any tools that aren't contributing to doing it that way, even if it looks like they might be convenient. That at least limits the amount of parallel-ways-of-working

and lets you keep a little sanity for later.

and lets you keep a little sanity for later.There is one way to make a deployable artefact for each component. Generally some standard way of calling

docker buildwhen all the components can run in containers. Or whatever is a standard build command for their language if they don't.But that still leaves a lot of configuration to tie it all together. Several layers of configuration, which is what makes it so hard. One part is generic, describing which components to install. But that needs the environment (development (sometimes multiple)/test/staging/production) specific values filled in. So there is a tool that does that. Except it's complicated by multiple things:

- If the thing is not completely homogeneous—e.g. some resources are requested from, and managed by, a cloud provider, then there are services running under kubernetes, and maybe some running under another kubernetes on premise that for raisins need to live inside the intranet. So it isn't one tool, it is multiple tools.

- The environment-specific part of configuration can be versioned somewhere, except that's not really suitable for passwords, so you'd want to generate, propagate and update the passwords with some script, but due to the disparity of the tools involved, you probably have to cobble that together, which ends up changing one kind of complexity for another.

- Many of the tools are ugly hacks that do kinda-sorta the thing you want, but are just a nibble short of flexible enough. Like that helm thing: you can create a meta-chart that ties all your dependencies together, but you can't share to configuration between them, so it does not get you all the way where you'd like to be.

Cue 14 standards.

-

@Arantor said in It's tools all the way down!:

@Bulb said in It's tools all the way down!:

Wrestling with the “devops” stuff here I am getting a persistent feeling of being buried under a pile of tools. They are always multiple ways to do something, with just slightly different options where I'm never sure how they match.

This sounds like an accurate description of every devops process and environment I’ve ever seen. The solution to any problem is more automation. It’s always more automation, more tools to learn and maintain. I’d swear half the devops folks do it solely to have new toys to play with, but the amount of bitching about the new tools makes me unsure.

It is the pipenight dream of making the process resilient to operator error. And the problem of 14 competing standards. All the tools are lacking a bit here or there, each written to scratch someone's specific itch, so instead of doing one thing well, it does two things acceptably and third barely so. So someone writes a new tool to patch up the hole for themselves and then there is one more tool that is lacking a bit somewhere else and the cycle starts anew.

Is there a way to get out of the maze of twisty little tools, almost, but not exactly, all alike, and not go either insane, raving mad or batshit crazy in the process?

Given that the last K8s deployment I touched had tools to build the files for Helm, to build the files… it really was tools all the way down.

The only advice I have is to try to apply the original Occam’s Razor: not multiplying your entities beyond necessity.

It's the problem with helm. As good as it is, it is not sufficient, but at the same time it's hard to do without it because of things like ingress that need to have the same value substituted twice and can't reference a config map or a secret (most other places where one needs to substitute can).

In my experience this means if you can write a small script or tool in place of introducing another actual tool, absolutely do that. The total surface of what needs to be managed and understood is smaller, even if some of it then has to be taught and documented.

… and then the script grows to a behemoth in a couple of years, unfortunately. But you can't use multiple tools without some sort of script anyway, so there is no escape from that circle of hell.

People spout that “we’re using the industry tools” but they all seem to be used in varied ways even for notionally the same task such that any familiarity is either non-helpful or straight up counterproductive when transferring somewhere else.

If in doubt KISS. Devops folks would do fucking well to remember that. And sometimes, it is worth hammering out the odd rough edge from your whatever to make the CI process easier - something the devops folks tend to eschew in favour of complexity in the pipeline later.

I've already introduced standard build command on the one project utilizing Dockerfiles. But I am having problem with the deployment because of the layers of configuration, and inadequacy of helm.

-

@Bulb said in It's tools all the way down!:

So there is a way to deploy them from Visual Studio (that's what the developer has been doing so far

NO, just NO..... If you want consistency (and this has nothing to do with any specific set of tools, just an example), ALWAYS us an automation path. ALWAYS had a dedicated (Set of) account with permission to do the deploy. ALWAYS have the best reproducability you can (it will never be 100%).

Didn't people learn from the fiascos with doing Web deployment from Visual Studio????

-

@Bulb said in It's tools all the way down!:

That works well for the applications themselves, and maven isn't even best in that domain as it does not have an easy way to integrate custom build steps. gradle, npm or even dotnet (the last thanks to the msbuild underneath) are easier when you need to generate some source at the beginning of the build or even just collect some version info to link in the app.

There's that many plugins that I've never needed a truly custom build step. The closest I've come is driving ant from maven, and that was just to work around the shortcomings of a different tool (which should have automatically made its target directory but didn't for some stupid reason).

-

@TheCPUWizard said in It's tools all the way down!:

If you want consistency (and this has nothing to do with any specific set of tools, just an example), ALWAYS us an automation path.

This.

One of the first things I did when I started on my current project team was get the automated building (and testing) working and persuade my coworkers that that was always the definitive build. It's so much easier when you have everything scripted. (I so wish I could deploy everything so easily. Fortunately, most of the stuff like the custom OS can be handled that way, and in fact we've done that for ages.)

-

@TheCPUWizard said in It's tools all the way down!:

@Bulb said in It's tools all the way down!:

So there is a way to deploy them from Visual Studio (that's what the developer has been doing so far

NO, just NO..... If you want consistency (and this has nothing to do with any specific set of tools, just an example), ALWAYS us an automation path. ALWAYS had a dedicated (Set of) account with permission to do the deploy. ALWAYS have the best reproducability you can (it will never be 100%).

Didn't people learn from the fiascos with doing Web deployment from Visual Studio????

Well, they sort of did. Setting that automation up is why they added me to the project. So now I am at the phase I am trying to understand the mess they have and design the automation to some balance between providing the reproducibility and keeping some semblance of continuity.

-

@dkf said in It's tools all the way down!:

@TheCPUWizard said in It's tools all the way down!:

If you want consistency (and this has nothing to do with any specific set of tools, just an example), ALWAYS us an automation path.

This.

One of the first things I did when I started on my current project team was get the automated building (and testing) working and persuade my coworkers that that was always the definitive build. It's so much easier when you have everything scripted. (I so wish I could deploy everything so easily. Fortunately, most of the stuff like the custom OS can be handled that way, and in fact we've done that for ages.)

I agree. I like to have things automated as well. Unfortunately it seems you and I are rather rare sight—we have a couple more projects that need that kind of things taken care of, but none of the available people wants to do it.

And than there is the part that triggered this rant—that when designing the automation you have to pick from many apparently equivalent, but maybe slightly different options and tools.

Like just now I'm looking at how to publish a functionapp to Azure. First, there is (excluding the manual ones) two options:

- build myself and push a zip package, or

- let the “kudu” service pull from git and build it itself.

and then there is the whole confusion between functionapp and webapp. The one documentation page tells me that I can deploy with the

azcli using a the subcommandaz functionapp deployment source config-zip, while I also ran across, apparently equivalent, but who ,

, az functionapp deploy, but the poweshell version isPublish-AzWebappinAz.Websitesmodule, though there also is anAz.Functionsmodule and on the other sideaz webappsubcommand. So are they the same thing under two names or slightly different things that are sometimes interchangeable or what?… as if Microsoft and clarity ever went together I guess.

-

-

@error_bot said in It's tools all the way down!:

Is it AS_DESIGNED that nothing comes out of the repo?

-

@Bulb offtopic, but this is exactly my problem with commandline tools - without proper documentation they are useless.

GUI tools are self-documenting by default, at least to some minimal (usually pretty high) degree.

-

@Karla said in It's tools all the way down!:

Is it AS_DESIGNED that nothing comes out of the repo?

It doesn't support anything, so yes.

-

@sh_code said in It's tools all the way down!:

GUI tools are self-documenting by default

Maybe I've spent more time looking at things at the specialist end of that scale, where Show All The Things is often a dominant attitude (along with not really explaining any of it).

-

@sh_code said in It's tools all the way down!:

GUI tools are self-documenting by default, at least to some minimal (usually pretty high) degree.

The exact opposite. Unless perhaps you are talking about annotated video recordings. Additionally they can not be accurately reproduced at all. 100% automation, appropriate log files are documentation as to exactly what happened and when.

-

@sh_code said in It's tools all the way down!:

@Bulb offtopic, but this is exactly my problem with commandline tools - without proper documentation they are useless.

GUI tools are self-documenting by default, at least to some minimal (usually pretty high) degree.

Three unlabeled text boxes and a button titled "Do It?"

While it is no more than an hour of work to add a usage to a command-line tool.

-

@PleegWat said in It's tools all the way down!:

Three unlabeled text boxes and a button titled "Do It?"

While it is no more than an hour of work to add a usage to a command-line tool.But you get feedback if you make an error in the GUI. Yes, the computer will go beep, assuming you have something capable of making noise configured.

-

@dkf If you provide invalid input to

edhowever, it will print?. Which makes the error obvious to any experienced user even if no speaker or bell is connected.

-

@PleegWat The bell is just as informative!

-

@dkf I have this for CLI, stream filter

dinger.shinserting BEL per parameterization...

-

Who's the bigger tool, the tool or the tool that follows him?

-

@TheCPUWizard said in It's tools all the way down!:

Didn't people learn from the fiascos with doing Web deployment from Visual Studio????

No they didn't and every new "tool" that appears today seems to want to push the "developers" to deploy using cli tools from their local machine for some reason.

-

@CodeJunkie said in It's tools all the way down!:

@TheCPUWizard said in It's tools all the way down!:

Didn't people learn from the fiascos with doing Web deployment from Visual Studio????

No they didn't and every new "tool" that appears today seems to want to push the "developers" to deploy using cli tools from their local machine for some reason.

If only it was cli tools!

See, the benefit of cli tools is that you can call them both locally to test things and from the build server to make a proper process out of it for production. So cli tools are fine.

But I am just setting up a build and deployment process for a project that was until now doing … right, you guessed it, web¹ deployments from Visual Studio, directly into production (it's a fairly typical specimen of a prototype pushed into service).

¹ Well, functionapps, but that's a slightly bastardized kind of a webapps anyway.

-

@Bulb said in It's tools all the way down!:

web deployments from Visual Studio, directly into production

I've seen the same sort of thing being done with Eclipse, and it absolutely makes my teeth itch. What I want in my projects is for the only official, deployable builds to be the ones that come out of the CI pipeline. I've never quite got there (due to it being messy to move the build results to deployment server and CD is right out because of the complexity of parts of the deployment other than the code per se) but I'm pretty close; the production builds definitely follow exactly the build script from exactly the code that is checked into the version control system.

-

@Bulb @dkf -- Indeed... The one thing to do, if you can get the political clout is to prevent ALL humans from having access. Only the automated system [aka pipeline] has permission to the various environment, and NO human has the passwords for those accounts.

Getting this set up can be quite a (human) challenge, but if one can succeed, I can assure you of major benefits :)

-

@Bulb said in It's tools all the way down!:

There is one way to make a deployable artefact for each component. Generally some standard way of calling

docker buildwhen all the components can run in containers. Or whatever is a standard build command for their language if they don't.But then you've got Docker on you and a bunch of containers to wrangle.

-

@Bulb said in It's tools all the way down!:

… as if Microsoft and clarity ever went together I guess.

One thing I'll say for them: the MSDN API documentation for .NET is amazingly good, a model for how API documentation should be done.

-

@TheCPUWizard said in It's tools all the way down!:

if you can get the political clout

Political clout isn't a problem for me, but ensuring that the damn thing works is. There are a few special tricky cases because this is a project with special custom hardware involved; simply pushing stuff to a cloud instance doesn't work for either integration testing or deployment.

-

@PleegWat said in It's tools all the way down!:

@sh_code said in It's tools all the way down!:

@Bulb offtopic, but this is exactly my problem with commandline tools - without proper documentation they are useless.

GUI tools are self-documenting by default, at least to some minimal (usually pretty high) degree.

Three unlabeled text boxes and a button titled "Do It?"

While it is no more than an hour of work to add a usage to a command-line tool.

Yes, doing something right takes a bit more effort than doing it badly.

-

@dkf said in It's tools all the way down!:

What I want in my projects is for the only official, deployable builds to be the ones that come out of the CI pipeline.

Yes, that's what I want too. And usually have it. I will eventually get there in this project as well.

@Mason_Wheeler said in It's tools all the way down!:

But then you've got Docker on you and a bunch of containers to wrangle.

Containers are OK. If you avoid the Docker-against-Desktop instabreaker, the docker engine, podman, containerd and rancher-desktop all work reasonably well, and it does not leave much things for you to set—and mess up—during deployment.

Usually there is either kubernetes/helm or docker-compose manifest on top of them to tie them together, and if you fill in the exact hashes of the images (e.g. as emitted by kbld), you have a fully specified release package.

-

@Mason_Wheeler said in It's tools all the way down!:

@Bulb said in It's tools all the way down!:

… as if Microsoft and clarity ever went together I guess.

One thing I'll say for them: the MSDN API documentation for .NET is amazingly good, a model for how API documentation should be done.

MSDN documentation of Win32 API is pretty good indeed. The .NET one is a little bit worse, but still quite good. Documentation of the Azure things on the other hand is a mess.

-

@Mason_Wheeler said in It's tools all the way down!:

@PleegWat said in It's tools all the way down!:

@sh_code said in It's tools all the way down!:

@Bulb offtopic, but this is exactly my problem with commandline tools - without proper documentation they are useless.

GUI tools are self-documenting by default, at least to some minimal (usually pretty high) degree.

Three unlabeled text boxes and a button titled "Do It?"

While it is no more than an hour of work to add a usage to a command-line tool.

Yes, doing something right takes a bit more effort than doing it badly.

Command-line tool can be called from the CI/CD pipeline, a GUI one can't. Therefore there has to be a command-line tool. And there should be only one for consistency.

-

@dkf said in It's tools all the way down!:

There are a few special tricky cases because this is a project with special custom hardware involved; simply pushing stuff to a cloud instance doesn't work for either integration testing or deployment.

Never said anything about cloud or "simply pushing". I have doe what I described for various tooling including IBE/IBD : Vacuum Control, Robot/Motion Control, Ion Beam Control, et. al....

"Human" commits code into the first stage, and for there on everything (with approval gates) is 100% automated. No human (not even myself) has the passwords to the accounts to do this [we do have system level permissions to change passwords, but that would be immediately noticed as a password change done manually would break all automation].

-

@Bulb said in It's tools all the way down!:

Command-line tool can be called from the CI/CD pipeline, a GUI one can't.

Sure it can. If my choices are to either have a manual deployment process or rely on some user interface automation kludge, I'll take the second evil over the first any day.

-

@CodeJunkie said in It's tools all the way down!:

No they didn't and every new "tool" that appears today seems to want to push the "developers" to deploy using cli tools from their local machine for some reason.

It's a wise policy.

1579: Tech Loops - explain xkcd

1579: Tech Loops - explain xkcd