C stringsþÝ«ÌΉŠ‹ÿ

-

Definitely a mistake to let all those funny characters in.

Yeah, like ą. Thankfully @codinghorror didn't make this mistake.

-

...

'twas just a weak attempt at that badge. :-/

Alas:

@powerlord said:Extended ASCII

I.e., an extension of ASCII? ;-)

-

AAAAASSSSSCCCCCIIIIIIIIII

(Also, my body's fine, I'm not an invalid, I have no idea what that means?)

-

If you don't have schema (not necessarily as XML schema file, but an abstract concept about what data you accept), how can you even serialize it other than building stringly-typed element tree? And if you do have schema, what's the problem with making it a static type?

Specifically when dealing with XML:

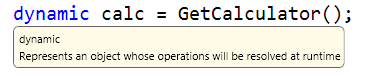

There are other usages that make sense. COM interop

Another set of usecases I've found myself using the dynamic type for:

There are times where it makes sense, as with any advanced language feature use with care.

-

Anything you can do, I can do with strong types. Rust with its enums (unions in disguise) helps alot with prettiness of resulting code, but isn't necessary.

...Okay, maybe I couldn't do COM. I don't know. Never used it, though I already hate it.

-

Stop being an unreasonable dick for two minutes. I gave you decent legit examples of when and why you would want to do that in C#.

I agree that you should take advantage of strong typing, but there are some places where dynamics types make sense ... like in the examples I gave you.

-

Turing completeness would seem to indicate that anything that can be done in a static language can be done in a dynamic language and [i]vice versa[/i]...

-

-

Ok, I'll stop being a dick and say exactly why I don't see dynamic types as beneficial. In case of XML, this is just simple deserialization. At any given position, there are three possible cases - either data matches your expectations and you save it in appropriate strongly-typed structure, or it doesn't and you error out, or you don't care and save it as string. I don't comment on COM because I don't know. C# DLR is for FFI with dynamic languages. Anonymous types is a bad move IMO.

My main argument against dynamic types is, if you operate on some object, you must know how this object looks like - otherwise, how could you even think of saving this character field as character? It might as well be a mutex! So, since you know something about your data, you can also write down your knowledge in formalized way of static types, because why not?

Dynamic objects are hashmaps in disguise. Hashmaps have static type.

-

Turing completeness would seem to indicate that anything that can be done in a static language can be done in a dynamic language and vice versa...

Right, and a MOD 6502 can emulate every other computer that has been or ever will be.

-

strong typing takes care of everything being safe

No. If strong types were able to guarantee that no runtime failures would ensue (ignoring external faults due to hardware issues) then they would not be fully computable, and you'd be unable to actually decide whether some classes of programs are well-typed or not. It's all isomorphic to the Halting Problem.

Strong types can help a lot in raising the class of problems you need to think about. Providing you're not integrating too many independently-developed things (when your strong types force you to spend effort writing loads of mapping shims between all the different modules). Types are abstractions, and like all abstractions, they help you tackle more problems but introduce the — significant — problem of what to do when you've got too much abstraction…

-

a MOD 6502 can emulate every other computer that has been or ever will be.

We can wait while it does, right?

-

We can wait while it does, right?

You can. I'll be over here waiting on the Motorola 68000.

-

I'll be over here waiting on the Motorola 68000.

Here's a nickel, kid. Get yourself an '040.

-

Motorola 68000.

Just a moment while this Z80 here works on the travelling salesman problem...

-

Meanwhile, this 6809 here will prove itself more obscure than your machines.

-

Being a Knight of the Static Typing Brigade, I am quite familiar with all that. The point was that type inference has a different purpose entirely. Enjoy your

.

.

-

@created_just_to_disl said:

Meanwhile, this 6809 here will prove itself more obscure than your machines.

Anyone who knew what those other processors were will know about that one, too.

-

-

This. The C char type is very poorly named. You probably wouldn't expect to use it to hold character data in any code written since 1999.

I hazard this depends on environment (OS?). I believe windows uses utf-16, so probably

wchar. However I am in a linux environment, where everything uses utf-8 inchar. And actually almost all occurrences ofcharin our codebase represent actual character data.

-

No. If strong types were able to guarantee that no runtime failures would ensue (ignoring external faults due to hardware issues) then they would not be fully computable, and you'd be unable to actually decide whether some classes of programs are well-typed or not. It's all isomorphic to the Halting Problem.

Isn't it more like, you can't prove that every safe program is safe, but you can prove that some specific subclass of programs is safe? Like, strong typing systems guarantees no runtime failure by banning any code that doesn't look to be safe, as opposed to banning code that isn't safe? Halting problem is about general solution - you can totally prove that all programs that don't use loops or gotos or recursion or anything that will redo previous instruction in any way, always halt.I hazard this depends on environment (OS?). I believe windows uses utf-16, so probably wchar. However I am in a linux environment, where everything uses utf-8 in char. And actually almost all occurrences of char in our codebase represent actual character data.

But an UTF-8 character can span severalchars, so no, you can't store a single character in singlechareven on Linux.Bonus WTF:

wchar_tsize is implementation-defined, and it's actually different on Windows (2 bytes) and Linux (4 bytes) - that's why we havechar16_tandchar32_tnow.

-

for extra fun, char is distinct from both signed char and unsigned char, making three distinct char types. Ho ho ho indeed.

In any given implementation it's only distinct from one of them. There are really only two char types with distinct behaviors (signed char and unsigned char); however, which of those you get when you specify plain unadorned char depends on your compiler.

-

So, as I understand it:

char is for (text) characters. This type is more useful as an array or char * to represent text.

Before multibyte characters were a thing, this was true. It really isn't any more. If you're programming in C, you're best advised to ignore the fact that "char" is a prefix of "character", and instead think of the char types as the smallest thing the compiler will let you create a pointer to.

-

Hahaha. char is a byte.

On machines without byte addressing, this is not necessarily so. POSIX says that char is 8 bits, but POSIX is not the C standards committee.

-

Since ASCII characters use code points 0-255, you'd expect the data type intended to store that to also be 0-255.

Nuh uh. ASCII is a 7 bit standard.

-

EBCDIC was also around when C was created and has always used code points past 127

I would be surprised to encounter a C compiler that both reads EBCDIC source code and makes char a signed type.

-

@OffByOne said:

char is for (text) characters. This type is more useful as an array or char * to represent text.

Before multibyte characters were a thing, this was true. It really isn't any more.

What do you suggest then? Keep in mind that multibyte != wide. More specifically: encodings like UTF-8 have variable-length characters.

It seems to me that those are best stored as char arrays (where "char" should be pronounced "byte").If you're programming in C, you're best advised to <em>ignore</em> the fact that "char" is a prefix of "character", and instead think of the char types as the smallest thing the compiler will let you create a pointer to.

Or you could eliminate all confusion with

#include <inttypes.h>and useuint8_tfor your byte-sized variables.

-

It seems to me that those are best stored as char arrays (where "char" should be pronounced "byte").

As long as you keep in mind that a character can occupy several spaces in string, you should be fine. But so many people forget that...

-

As long as you keep in mind that a character can occupy several spaces in string, you should be fine. But so many people forget that...

Sure, you need to use string functions that are UTF-8 aware or you'd get into all kinds of trouble.

-

Sure, you need to use string functions that are UTF-8 aware or you'd get into all kinds of trouble.

Only if you actually need to extract individual characters. strcpy() will work for any encoding where single zeroed byte means end of string.

-

Only if you actually need to extract individual characters. strcpy() will work for any encoding where single zeroed byte means end of string.

I was thinking more about functions like strlen(). With multibyte encodings, that's not just "count the number of bytes until \0" anymore. A \0 may be even a valid part of the encoding of a character.

-

Anyone who knew what those other processors were will know about that one, too.

But how many of them have written assembly code for an RCA 1802? Now there is an architecture worth waiting for!

-

NAME strlen - calculate the length of a string

SYNOPSIS

#include <string.h>size_t strlen(const char *s);DESCRIPTION

The strlen() function calculates the length of the string s, excluding the terminating null byte ('\0').RETURN VALUE

The strlen() function returns the number of bytes in the string s.

Everything works according to spec. Again, it's programmer's fault to assume the length of string is number of characters in it.

-

In any given implementation it's only distinct from one of them. There are really only two char types with distinct behaviors (<b>signed char</b> and <b>unsigned char</b>); however, which of those you get when you specify plain unadorned <b>char</b> depends on your compiler.

No, this is not true. There are several post discussing this already, but

charis a type distinct from bothsigned charandunsigned char(although it behaves like one of them).E.g.

char* a = 0; signed char* b1 = a; unsigned char* b2 = a;produces the following two warnings:

warning: pointer targets in initialization differ in signedness [-Wpointer-sign] signed char* b1 = a; ^ warning: pointer targets in initialization differ in signedness [-Wpointer-sign] unsigned char* b2 = a; ^

-

However I am in a linux environment, where everything uses utf-8 in char.

And how many bytes is a UTF-8 character? (Hint: not one. Another hint: not a static number at all. A third hint: your C code is broken and wrong and you're an idiot.)

-

What do you suggest then? Keep in mind that multibyte != wide. More specifically: encodings like UTF-8 have variable-length characters.

Depends, as ever, on a bunch of tradeoffs.

Do you want to work with full Unicode, and don't care much about space efficiency, and want to be able to index your strings efficiently? Then use a 32-bit integer type for characters.

Content to restrict yourself to the BMP but still care about making indexing quick and easy? Then use a 16-bit integer type for characters.

Most of your character data falls inside the BMP and you're willing to live with clumsy indexing? Use a 16-bit integer type for characters and encode them in UTF-16 (this is the tradeoff chosen by Windows and Java).

Most of your character data will be in English and space efficiency matters and you don't mind if indexing will often be clumsy and slow? Use an 8-bit integer type and encode characters in UTF-8.

Want interoperability with legacy systems using JIS? 16-bit integer types for you.

And so on. Treating C string literals (i.e. null-terminated arrays of char) as UTF-8-encoded character data is usually reasonable, as long as you remember that strlen() tells you how many non-null chars are in a literal rather than how many characters are encoded in it. Some people insist on interpreting string literals as ISO 8859-1 or CP-437 but we don't talk about folks like that in polite company.

-

Most of your character data falls inside the BMP and you're willing to live with clumsy indexing? Use a 16-bit integer type for characters and encode them in UTF-16 (this is the tradeoff chosen by Windows and Java).

Note the historical reasons here. At the time these systems were developed, UTF-8 did not exist and hadn't even been thought-up. If either were being built today, they undoubtedly would have picked UTF-8 from the start.

Linux is UTF-8 all over because they were user-hating assholes who didn't even bother to think about supporting non-ANSI languages until long after everybody else.

-

You did a nice job of making UTF-8 (or perhaps the decision to use it) a good idea in Widnows but a bad one in Linux.

-

Your post is gibberish.

-

Your train of thought is gibberish, Blakey. Unless you meant "Linux chose UTF-8 because it's the easiest way to upgrade from ASCII". But obviously you didn't since you haven't written that, and listening to my shoulder aliens instead of you is bad and I should feel bad.

-

Unless you meant "Linux chose UTF-8 because it's the easiest way to upgrade from ASCII".

That's not what I mean, and that's not what I typed.

Linux chose UTF-8 because they procrastinated so long on making their shit useful to anybody outside Europe and the Americas that, by the time they finally decided to stop fucking Japanese speakers over, UTF-8 had been invented was available for them to use.

Other products didn't have the option of using UTF-8 because at the time they were deciding not to fuck Japanese speakers over, UTF-8 hadn't yet been invented.

-

But wait. I just check the internet and it says UTF-8 was developed in 1992. That would mean it was available both for Windows and Linux right from the start. So the inexistence argument makes no sense.

-

Windows 3 came out in 1990, numbnuts.

That does raise questions about WTF the developers of Java were thinking, though.

-

This post is deleted!

-

Linux chose UTF-8 because they procrastinated so long on making their shit useful to anybody outside Europe and the Americas that, by the time they finally decided to stop fucking Japanese speakers over, UTF-8 had been invented was available for them to use.

WTF? You're missing a piece of history here, namely that it it was the *nix world (with some help from Plan9) that invented UTF-8 to begin with...(Prosser/Thompson/Pike, to be precise).

Besides, the decision to go with a 16-bit wide-character API is now turning around and biting Windows and Java programmers in the rump because almost nobody gets surrogate pairs right.

-

WTF? You're missing a piece of history here, namely that it it was the *nix world (with some help from Plan9) that invented UTF-8 to begin with...(Prosser/Thompson/Pike, to be precise).

Who invented it is irrelevant to my point.

-

Windows 3 came out in 1990, numbnuts.

And that's totally irrelevant because Windows 3 didn't have Unicode at all, numbnuts. I could say that WinNT was released in '93, after UTF-8, but it would be half-lie because development started in '89 and most probably they've already settled on UCS-2 back then.Who invented it is irrelevant to my point.

Except you're trying to prove that Linux went Unicode because they couldn't care less. If they really, didn't, they wouldn't go up with UTF-8 in the first place!

-

@tarunik, stop liking my every post. That's kinda creepy.

-

stop liking my every post. That's kinda creepy.

Stop making good points and start spewing shoulder-alien gibberish, then! </sarcasm>

-

Okay, I'll try. TRWTF is non-Latin alphabets?