How can we increase adoption of c/c++ alternatives?

-

Just assume x=100 and you're pretty much set.

You still make it sound like such a high barrier

-

I would like to think I am in the top x% of PHP programmers (not that that seems a very high bar) but even I'd be wary of working on system libraries in any language.

I hacked together a driver once to compile on newer kernels... Still only on my drive until I can get some proper testing done though, I don't want to be a cause of kernel panics if I can help it.

And yes, I understand what I did to a point. But if I said there weren't a few lines of code that are in "maybe if I do this..." category, I'd be lying.

-

If you put it out there with the caveats of 'it works for me, YMMV' I don't see a problem with that. It's only when it's put out as being 'stable' and isn't.

Like a certain forum software I know.

-

This is a good thing as it means they won't be doing any system programming, and any damage they do will be confined to whatever applications they are working on, as opposed to libraries, which get used everywhere.

Alternatively …This is a terrible thing, as it means they will be exposing their shitty code to the most hostile environment you can possibly imagine, instead of it lying about in a library nobody will ever use. Their incompetence will result in one or more websites being hacked, companies going under, and eventually, a job developing Discourse.

-

Too bad a lot of educational institutions - at least where I live - make the mistake of teaching C++ without mentioning anything about the pitfalls.

It's a huge WTF in my eyes. They should either teach a language like C++ thoroughly or use something less dangerous and inconsistent like Python instead.Everyone should learn Python.

-

See? Both pointers and non-pointers can be allocated on either the stack or the heap. The compiler handles all that for you. It's not something you need to micro-manage.

I would be interested to see how well it performs in a larger application (say, an MMORPG server for a game with lots of rigid bodies), and I doubt it would always make the correct choice. In spite of all the information available to them, RDBMS query optimizers still pick terrible plans sometimes, and DBAs have to get around them with a table hint or other voodoo. While compiler optimizations are a Good Thing, I doubt they will be truly optimal until at least the Halting Problem is solved, and probably not until the Read My Mind Problem is solved. At that point, you and I will be obsolete.

Micromanagement of pointers is the lifeblood of C++ developers. Without that, they would shrivel up and die.

Mmm, delicious pointers. Just as long as they're not raw.

But your class needs a destructor unless you're OK with default semantics, which is actually fairly close the weight of a using block.

I am fine with implementing a destructor (where one is needed) as the responsibility of cleaning up after the class should rest on the designer of the class. With

IDisposable, the responsibility of cleaning up after the class rests on the user.Unions aren't supported

I didn't know Go was a right-to-work state!

Actually, I suppose that, given the abundance of existing c(++) code, there will always be a need for good programmers; all we really need is to prevent any further adoption. And really, reducing the amount of noob C and C++ coders is good for everybody. There's just too much undefined behaviour for them to stumble into.

Agreed. That would make people who can tolerate the language(s) rarer, and possibly mean higher salaries for them. Of course, then you get the idiots who have dollar signs in their eyes, but that's a problem throughout the industry.

Here we have experienced linux kernel devs arguing in favor of dereferencing a null pointer (“that's what we've always done”) more than three years after an identical bug introduced a vulnerability into SELinux

Lovely appeal to tradition on their part. But after OpenSSL, these sorts of things are not really surprising, sadly.

Too bad a lot of educational institutions - at least where I live - make the mistake of teaching C++ without mentioning anything about the pitfalls.It's a huge WTF in my eyes. They should either teach a language like C++ thoroughly or use something less dangerous and inconsistent like Python instead.

They still taught C++ when I entered university, and they started to move to Java after I left. As much as I like C++, it's a terrible first language.

The absolute bottom-level intro course was in C. Yes, that's right, C was the first language they would teach to newcomers. However, the department was more interested in making students change their major than actually teaching them, but that's another story.

-

While compiler optimizations are a Good Thing, I doubt they will be truly optimal until at least the Halting Problem is solved

-

I am fine with implementing a destructor (where one is needed) as the responsibility of cleaning up after the class should rest on the designer of the class. With IDisposable, the responsibility of cleaning up after the class rests on the user.

It's really not as big a deal as you make out, and the flip-side about being very exacting about destruction is the “deallocate the world, bit-by-bit, on exit” behaviour. If the program is about to terminate, the enormous majority of objects in it can be just dropped on the floor; only those that correspond to external resources may require action (and then not always, as the OS can do a lot for you too).

-

Everyone should learn Python.

In their anger management class, when it turns out the code you meticulously copied and pasted from various sources floods your compiler output, because you used spaces and tabs together, and that apparently would bring out Cthulhu if it were allowed.

Agreed. That would make people who can tolerate the language(s) rarer, and possibly mean higher salaries for them.

Stop polluting my job market with noob programmers.

As much as I like C++, it's a terrible first language.

It's okay for the basic "square a number and put it on screen" stuff. You can write in it procedurally (unlike in Java or C#, where you'll inevitably have to introduce some OO cargo-cultism at the beginning), and you avoid the abstract printf syntax.

It's a horrible first OO language, that's for sure.

Yes, that's right, C was the first language they would teach to newcomers.

What's so wrong with that (aside from aforementioned printf syntax)? It's one of the simplest languages ever.

-

[C++ is] a horrible first OO language

It's not an OO language.

@Maciejasjmj said:[C is] one of the simplest languages ever.

By that measure, everyone should start by learning Lisp. Which, frankly, wouldn't be a bad thing.

-

In their anger management class, when it turns out the [Python] code you meticulously copied and pasted from various sources floods your compiler output, because you used spaces and tabs together, and that apparently would bring out Cthulhu if it were allowed.

Set your editor's tab width to 1 and freely intermix. Watch as everyone else rages when editing what you've written.

-

Set your editor's tab width to 1 and freely intermix. Watch as everyone else rages when editing what you've written.

Evil ideas thread is either that way

, or

, or  if you want to try and use the search, or

if you want to try and use the search, or  if Discourse decides to suggest it.

if Discourse decides to suggest it.

-

Evil ideas thread is either that way

, or

, or  if you want to try and use the search, or

if you want to try and use the search, or  if Discourse decides to suggest it.

if Discourse decides to suggest it.I thought Discourse would have suggested

or similar personally.

or similar personally.

-

I thought Discourse would have suggested

or similar personally.

or similar personally.No, it's as evil as using

and is considered Doing it Wrong™

and is considered Doing it Wrong™And don't even think of suggesting

either.

either.

-

I thought Discourse would have suggested

or similar personally.

or similar personally.http://what.thedailywtf.com/t/poll-do-you-have-discourse-syndrome/1158/150?u=pjh

-

By that measure, everyone should start by learning Lisp. Which, frankly, wouldn't be a bad thing.

First languages I was taught in college was Lisp followed by Scheme. I think it was a good choice.Learning how to solve the Towers of Hanoi recursively was mind-blowing.

-

In their anger management class, when it turns out the code you meticulously copied and pasted from various sources floods your compiler output, because you used spaces and tabs together, and that apparently would bring out Cthulhu if it were allowed.

So you're saying that it's your fault?

-

So you're saying that it's your fault?

Channeling Jeff again?

Filed under: Doing It Wrong since 1993

-

Channeling Jeff again?

Filed under: Doing It Wrong since 1993

Surely Jeff is not that young?

-

Surely Jeff didn't start being a crazy person until after I was conceived?

Disclaimer: I was not conceived by Jeff.

-

-

It's really not as big a deal as you make out, and the flip-side about being very exacting about destruction is the “deallocate the world, bit-by-bit, on exit” behaviour. If the program is about to terminate, the enormous majority of objects in it can be just dropped on the floor; only those that correspond to external resources may require action (and then not always, as the OS can do a lot for you too).

Fair point. I'm not terribly worried about shutdown performance in my project, though. It does take a few seconds to exit, but that's likely due to waiting on the various subsystems to join their worker threads*.

What's so wrong with that (aside from aforementioned printf syntax)? It's one of the simplest languages ever.

It is simple if the scope of the projects is printf/scanf, file I/O and basic control structures. Ask someone who just started programming to implement a linked list or red-black tree in C and watch how far they get. Having new, delete, constructors, and destructors significantly reduces the chance of error.

Surely Jeff didn't start being a crazy person until after I was conceived?

Any brave volunteers want to scan the codinghorror blog archives for the Critical Turning Point?

*Having day/night cycles means you can't use pre-baked shadows, but the rendering engine I use can periodically recompute the lightmaps asynchronously, which is kind of cool.

-

Any brave volunteers want to scan the codinghorror blog archives for the Critical Turning Point?

We're brave but we're not stupid enough to risk getting tainted by that particular level of crazy.

-

We're brave but we're not stupid enough to risk getting tainted by that particular level of crazy.

It shouldn't be that hard. They say that "those that can, do. those that can't, teach." JA is a good example of this. his writing was pretty solid, but his execution was lacking. so if you chart when he initially dropped off when it came to writing, you can find that point. he used to do it. constantly. he even wrote an article about writing a lot. then it slowed down to a trickle.

-

Having day/night cycles means you can't use pre-baked shadows

That's a lie!

You can have pre-baked radiosity for indoor parts and use a single cascaded shadow map for outdoor lighting.

-

Interesting. I'll have to read up more on the details when I have time. Seems like a decent compromise.

-

It shouldn't be that hard. They say that "those that can, do. those that can't, teach." JA is a good example of this. his writing was pretty solid, but his execution was lacking. so if you chart when he initially dropped off when it came to writing, you can find that point. he used to do it. constantly. he even wrote an article about writing a lot. then it slowed down to a trickle.

Yes but that still means actually looking at his blog unless you insulate yourself from it by writing scraping tools.

-

You can have pre-baked radiosity for indoor parts

... unless your buildings have windows.

-

I don't see a reason you need to only use one light source at a time...

-

I would have to get unsafeCoerce taken out of Haskell frist.

Is unsafeCoerce Haskell for reinterpret_cast?

-

You do realize this thread is over 11 billion milliseconds old, right?

-

I do now :<nbsp>|

I guess I'm off to google "unsafeCoerce" (with safesearch turned on..)

-

Discourse should have helpfully notified you. ;)

-

I was using my phone... :<nbsp>/

-

And I was being sarcastic. Carry on. <also, I have been drinking>

-

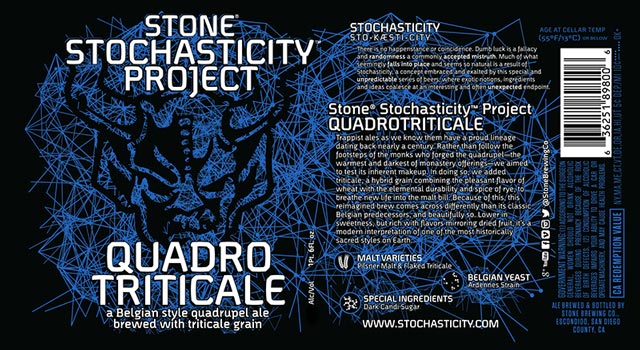

And I am being stochastic, Carry on.

**filed under: an opportunity to use a word I didn't have a chance to use in a real conversation.

-

stochastic

Thanks for the new word, that I also will probably never have a chance to use in conversation.

-

11 billion milliseconds

Sure, that sounds like a long time, but compared to the lifespan of, say, HD 140283, it's a mere blink of an eye...

-

@Intercourse said:

Thanks for the new word, that I also will probably never have a chance to use in conversation.

I had the opportunity to use that word often in college, at least for one term; I took a class in Stochastic Processes.

-

I had the opportunity to use that word often in college, at least for one term; I took a class in Stochastic Processes.

Oh boy, I remember that one. Well, technically it only included stochastic processes, but still.

150 people, 5 passing grades on the first term. Yours truly not included - I think it took me four tries to finally get over this one.

-

I got through it on the first try, but don't ask me to remember anything from it now — very heavy on math that I haven't used in 20+ years.

-

I had the opportunity to use that word often in college, at least for one term; I took a class in Stochastic Processes.

I remember using the word stochastic to a customer. They had been pressing me on technical details of an algorithm or something so eventually I talked to them like someone who knew what they were doing. I don't recall getting similar levels of questioning since.

-

They had been pressing me on technical details of an algorithm or something so eventually I talked to them like someone who knew what they were doing.

Such responses to technical inquiry are usually followed by a dumb look from the client, a hurried nod and "Uh-huh, sounds good." Then you are never bothered that much again...usually.

-

@Intercourse said:

Then you are never bothered that much again...usually.

Oh, I'm bothered plenty. They just trust me on the low level stuff now.

-

And I am being stochastic, Carry on.

<sub>**filed under: an opportunity to use a word I didn't have a chance to use in a real conversation.</sub>

-

Really? I talk about stochastic processes all the time. Especially the Wiener process.

-

-

-

stack semantics and deterministic destruction start to become a lot more appealing than every object being a heap allocation to be disposed of by some garbage collector that's "smarter than you."

Actually, as Martin Schoeberl has proven over and over again for the past 15 years, the JVM architecture is infinitely better for embedded systems design than the plain Von Neumann or Harvard architectures we've been using, for one gigantic reason: time predictable caching.

JOP features an abundance of small, highly specialized caches: a cache for constants, a WCET-analyzable object cache, a method cache (it is easy to determine exactly how much of the call graph will be able to fit), a stack cache (less of a cache, really, than a fixed-size stack in on-chip memory), an array cache (ok, IDK if this actually exists, but if not that's only because not enough people have been throwing money and undergrads at the project), as well as all of the static analysis tools needed to ensure that those caches affect not just average case, but worst case run times. Also it has a real-time garbage collector, but that's just gravy.

Honestly, the sooner we can move away from the free-form C way of doing things, where any part of your program could bump something else out of cache at any time, to something with well-defined memory access semantics, the better.

-

Honestly, the sooner we can move away from the free-form C way of doing things, where any part of your program could bump something else out of cache at any time, to something with well-defined memory access semantics, the better.

Why do you have a cache in an embedded system in the first place?

Is it because you have some sort of irrational fetish for throwing freaking applications processors at every. last. thing. that. you. see?!

It does not take a 500MHz ARM Cortex-A just to run a thermostat, for crying out loud!!!! (That's for you, Nest...)

Hint: raw speed doesn't matter nearly as much in embedded dev as you think it does -- it's all about time predictability, and even if you can guarantee that your caches are helping worst-case run times, that says nothing about the amount of timing jitter they are introducing...

Filed under: bit bangers beware!