I, ChatGPT

-

@Mason_Wheeler said in I, ChatGPT:

@boomzilla said in I, ChatGPT:

Fair, the analogy isn't perfect. But your claim is completely dumb. It's a burglar suing you because he broke his arm breaking into your house.

Prejudicial language, assumes facts not in evidence.

Prejudicial language, assumes facts not in evidence.Your analogy of the burglar breaking into your house assumes criminality, which is in fact the issue being debated here. For a more neutral analogy on the same grounds, look at booby trapping your own property. This is quite illegal, because it could all too easily injure or kill lawful visitors (including emergency services) as well as the criminals you may have intended to catch.

OK, fine, but you're not helping your analogy at all by poking holes in mine.

-

@topspin said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

@topspin said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

@topspin The law says nothing whatsoever about learning from copyrighted works. And one of the most fundamental principles of the rule of law is, that which is not prohibited, you are free to do. Simply because a bunch of modern-day Luddites look at this and say "COPYRIGHT VIOLASHUNNN!!!" does not make it one.

Where does the law create the exact distinction between learning and copying, then? How much of the original content is this "learned" content allowed to contain without being a derivative work? 10%? 50%? Everything but with a single bit difference?

What is the legal status of an art student painting a copy of an existing painting? (Hint: this is a non-hypothetical thing that art students do all the time in order to improve their skills.)

Usually they're painting works in the public domain. Otherwise it's a derivative work according to the law, like it or not.

Now go ahead and define how much data has to differ from the original to be treated as learned instead of copying.Oh, that one's easy. In Authors Guild v. Google, the Second Circuit ruled that transformative fair use remains transformative fair use even when dealing with a 100% verbatim copy. (The Authors Guild appealed but the Supreme Court denied cert, leaving the ruling intact.) And given that generative AIs do not produce 100% verbatim copies as the training process is necessarily a form of lossy compression...

-

@boomzilla said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

@boomzilla said in I, ChatGPT:

Fair, the analogy isn't perfect. But your claim is completely dumb. It's a burglar suing you because he broke his arm breaking into your house.

Prejudicial language, assumes facts not in evidence.

Prejudicial language, assumes facts not in evidence.Your analogy of the burglar breaking into your house assumes criminality, which is in fact the issue being debated here. For a more neutral analogy on the same grounds, look at booby trapping your own property. This is quite illegal, because it could all too easily injure or kill lawful visitors (including emergency services) as well as the criminals you may have intended to catch.

OK, fine, but you're not helping your analogy at all by poking holes in mine.

My analogy is, learning is lawful and requires neither license nor consent, and putting "on a computer" on the end does not make it something fundamentally different from learning.

-

@Mason_Wheeler said in I, ChatGPT:

Oh, that one's easy. In Authors Guild v. Google, the Second Circuit ruled that transformative fair use remains transformative fair use even when dealing with a 100% verbatim copy.

So I can copy anything I want, by transformatively slapping a "look, it's AI" heading above it.

I'll go right ahead and sell some Windows 11 licenses.

Doubt that will fly.And given that generative AIs do not produce 100% verbatim copies as the training process is necessarily a form of lossy compression...

assumes facts not in evidence.

assumes facts not in evidence.Probably, but not necessarily. There's nothing technically stopping it from having a non-lossy copy of parts of the data set. But that's just a technical side note, since lossy copies are just as fine, otherwise Netflix wouldn't need to buy licenses if they tuned their codecs just right.

-

@Mason_Wheeler said in I, ChatGPT:

@boomzilla said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

@boomzilla said in I, ChatGPT:

Fair, the analogy isn't perfect. But your claim is completely dumb. It's a burglar suing you because he broke his arm breaking into your house.

Prejudicial language, assumes facts not in evidence.

Prejudicial language, assumes facts not in evidence.Your analogy of the burglar breaking into your house assumes criminality, which is in fact the issue being debated here. For a more neutral analogy on the same grounds, look at booby trapping your own property. This is quite illegal, because it could all too easily injure or kill lawful visitors (including emergency services) as well as the criminals you may have intended to catch.

OK, fine, but you're not helping your analogy at all by poking holes in mine.

My analogy is, learning is lawful and requires neither license nor consent, and putting "on a computer" on the end does not make it something fundamentally different from learning.

I already said that up thread and so what? There are very limited reasons why someone should have a veto over what someone else posts on their webpage but this clearly ain't one of those no matter how many times you try to change the subject like this.

-

@boomzilla Deliberate sabotage of someone else's business is known as tortious interference. It's a civil tort that you can get sued over, and if you did this and got sued for it, you would lose. It's not a veto per se, but it makes it a very bad idea for you to do this.

-

@topspin said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

Oh, that one's easy. In Authors Guild v. Google, the Second Circuit ruled that transformative fair use remains transformative fair use even when dealing with a 100% verbatim copy.

So I can copy anything I want, by transformatively slapping a "look, it's AI" heading above it.

I'll go right ahead and sell some Windows 11 licenses.

Doubt that will fly.And given that generative AIs do not produce 100% verbatim copies as the training process is necessarily a form of lossy compression...

assumes facts not in evidence.

assumes facts not in evidence.Probably, but not necessarily. There's nothing technically stopping it from having a non-lossy copy of parts of the data set. But that's just a technical side note, since lossy copies are just as fine, otherwise Netflix wouldn't need to buy licenses if they tuned their codecs just right.

Once again completely ignoring the most salient piece of what I wrote: transformative fair use.

-

@Mason_Wheeler said in I, ChatGPT:

@boomzilla Deliberate sabotage of someone else's business is known as tortious interference.

Indeed, and like I said, if they were doing this to the scraper's servers you'd have a case. They're not and you don't.

-

@Mason_Wheeler maybe if you defined at what point your copy becomes transformative and why it should be fair use to mass copy it.

-

@boomzilla said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

@boomzilla Deliberate sabotage of someone else's business is known as tortious interference.

Indeed, and like I said, if they were doing this to the scraper's servers you'd have a case. They're not and you don't.

Why? It's not the server being sabotaged; it's the AI. How is the scraper's server at all relevant?

-

@topspin

No one is making mass copies of art to begin with. Works are being included as one single data point in a training data set comprising billions of data points. The thing you are saying AIs are doing that is so terrible is. Not. Happening.

No one is making mass copies of art to begin with. Works are being included as one single data point in a training data set comprising billions of data points. The thing you are saying AIs are doing that is so terrible is. Not. Happening.

-

@Mason_Wheeler said in I, ChatGPT:

@boomzilla said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

@boomzilla Deliberate sabotage of someone else's business is known as tortious interference.

Indeed, and like I said, if they were doing this to the scraper's servers you'd have a case. They're not and you don't.

Why? It's not the server being sabotaged; it's the AI. How is the scraper's server at all relevant?

If it's being sabotaged it's by code that can't process the images the way the AI scrapers want it to be processed. They are free to update their code to process the images differently.

-

@Mason_Wheeler said in I, ChatGPT:

@boomzilla Deliberate sabotage of someone else's business is known as tortious interference. It's a civil tort that you can get sued over, and if you did this and got sued for it, you would lose. It's not a veto per se, but it makes it a very bad idea for you to do this.

Different people reading the same text or looking at the same picture will sometimes learn very different things from it. So even if one were to go along with the less than convincing argument that 'putting "on a computer" on the end does not make it something fundamentally different', why would there be an obligation for me to design my published material so that everybody will always learn the same thing from it, and how would that even be enforceable? There have been adversarial caps designed to confuse face recognition for a long time, am I liable for publishing a picture of someone wearing one? And most importantly (and remembering that most fundamental of principles of the rule of law: that which is not prohibited, you are free to do), based on what law?

-

@boomzilla said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

@boomzilla said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

@boomzilla Deliberate sabotage of someone else's business is known as tortious interference.

Indeed, and like I said, if they were doing this to the scraper's servers you'd have a case. They're not and you don't.

Why? It's not the server being sabotaged; it's the AI. How is the scraper's server at all relevant?

If it's being sabotaged it's by code that can't process the images the way the AI scrapers want it to be processed. They are free to update their code to process the images differently.

"If your server got hacked by a buffer overflow, it's your own fault for running code that was incapable of processing the hacker's input the way you wanted it to be processed. You are free to update your code to process malicious input differently."

This may be

but legally the originator of the malicious input is not in the clear, nor should he be.

but legally the originator of the malicious input is not in the clear, nor should he be.

-

@Mason_Wheeler said in I, ChatGPT:

@topspin

No one is making mass copies of art to begin with. Works are being included as one single data point

No one is making mass copies of art to begin with. Works are being included as one single data pointI.e. they're copied.

in a training data set comprising billions of data points.

That you're breaking the laws, as written, billions of times doesn't make it any better.

The thing you are saying AIs are doing that is so terrible

You're confusing intent. I'm not saying what they're doing is terrible, I'm saying it's breaking copyright and just because you call it AI, or "we couldn't make profits without breaking copyright" is no reason to treat it differently from everybody else. If you don't like the copyright laws, change them instead of applying them arbitrarily to big players.

is. Not. Happening.

It is. There's been enough proof of replicas in this thread, and you just said yourself that the works are being included without giving a definition at which point that inclusion stops being a copy.

So you've got the copy part, the mass part should be obvious.

-

@LaoC said in I, ChatGPT:

why would there be an obligation for me to design my published material so that everybody will always learn the same thing from it, and how would that even be enforceable?

There's a big difference between inaction and deliberate action. Please don't conflate the two. I'm not saying you should be obligated to do anything; I'm saying you should be (and already are) prohibited from taking affirmative steps to maliciously sabotage someone else's business.

-

@Mason_Wheeler said in I, ChatGPT:

@boomzilla said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

@boomzilla said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

@boomzilla Deliberate sabotage of someone else's business is known as tortious interference.

Indeed, and like I said, if they were doing this to the scraper's servers you'd have a case. They're not and you don't.

Why? It's not the server being sabotaged; it's the AI. How is the scraper's server at all relevant?

If it's being sabotaged it's by code that can't process the images the way the AI scrapers want it to be processed. They are free to update their code to process the images differently.

"If your server got hacked by a buffer overflow, it's your own fault for running code that was incapable of processing the hacker's input the way you wanted it to be processed. You are free to update your code to process malicious input differently."

This may be

but legally the originator of the malicious input is not in the clear, nor should he be.

but legally the originator of the malicious input is not in the clear, nor should he be.Yes, and if the artist went to the scraper's server and did that it would be a problem. But if you copy some text from a site and submit it to your own application and get some SQL injection it's on you for not fixing your site to avoid SQL injection, not the people who posted a query on their page.

-

@boomzilla said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

@boomzilla said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

@boomzilla said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

@boomzilla Deliberate sabotage of someone else's business is known as tortious interference.

Indeed, and like I said, if they were doing this to the scraper's servers you'd have a case. They're not and you don't.

Why? It's not the server being sabotaged; it's the AI. How is the scraper's server at all relevant?

If it's being sabotaged it's by code that can't process the images the way the AI scrapers want it to be processed. They are free to update their code to process the images differently.

"If your server got hacked by a buffer overflow, it's your own fault for running code that was incapable of processing the hacker's input the way you wanted it to be processed. You are free to update your code to process malicious input differently."

This may be

but legally the originator of the malicious input is not in the clear, nor should he be.

but legally the originator of the malicious input is not in the clear, nor should he be.Yes, and if the artist went to the scraper's server and did that it would be a problem. But if you copy some text from a site and submit it to your own application and get some SQL injection it's on you for not fixing your site to avoid SQL injection, not the people who posted a query on their page.

The scraping doesn't change anything. Learning from material that is made lawfully available to you remains lawful.

-

@Mason_Wheeler said in I, ChatGPT:

@boomzilla said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

@boomzilla said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

@boomzilla Deliberate sabotage of someone else's business is known as tortious interference.

Indeed, and like I said, if they were doing this to the scraper's servers you'd have a case. They're not and you don't.

Why? It's not the server being sabotaged; it's the AI. How is the scraper's server at all relevant?

If it's being sabotaged it's by code that can't process the images the way the AI scrapers want it to be processed. They are free to update their code to process the images differently.

"If your server got hacked by a buffer overflow, it's your own fault for running code that was incapable of processing the hacker's input the way you wanted it to be processed. You are free to update your code to process malicious input differently."

This may be

but legally the originator of the malicious input is not in the clear, nor should he be.

but legally the originator of the malicious input is not in the clear, nor should he be.If there supposedly isn't any legally significant distinction between human learning and computer learning, who is to say which interpretation is even the correct one? I'm publishing a picture of a purse. Most humans happen to see a cow on it, but so what? It's still a picture of a purse. Computers can learn that all they like. What conclusions they draw from it is on them.

@Mason_Wheeler said in I, ChatGPT:

@LaoC said in I, ChatGPT:

why would there be an obligation for me to design my published material so that everybody will always learn the same thing from it, and how would that even be enforceable?

There's a big difference between inaction and deliberate action. Please don't conflate the two. I'm not saying you should be obligated to do anything; I'm saying you should be (and already are) prohibited from taking affirmative steps to maliciously sabotage someone else's business.

Writing texts with a "double entendre" definitely falls under deliberate action.

Edit: also, can I sue the publisher of "The Dress" for poisoning my brain with wrong information, which they clearly intended? I'm sure M.C. Escher is responsible for my kid breaking a leg while falling down the stairs, too, y'honor, the malicious intent is bloody obvious!

-

@topspin said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

@topspin

No one is making mass copies of art to begin with. Works are being included as one single data point

No one is making mass copies of art to begin with. Works are being included as one single data pointI.e. they're copied.

in a training data set comprising billions of data points.

That you're breaking the laws, as written, billions of times doesn't make it any better.

You're conflating the input and the output. An ephemeral copy is made on the input side, not for the purpose of creating copies, but as an unavoidable byproduct of the way the Internet works. Viewing something necessarily involves copying it over the network, and is not a copyright infringement. This has been a well-settled matter since the 90s.

Taking this copy and studying it to learn from it is a clear example of transformative fair use: using the work for a completely different purpose than what the author intended when creating the work.

The thing you are saying AIs are doing that is so terrible

You're confusing intent. I'm not saying what they're doing is terrible, I'm saying it's breaking copyright and just because you call it AI, or "we couldn't make profits without breaking copyright" is no reason to treat it differently from everybody else. If you don't like the copyright laws, change them instead of applying them arbitrarily to big players.

No, I'm saying it's not breaking copyright because copyright does not apply here.

is. Not. Happening.

It is. There's been enough proof of replicas in this thread

Where? I haven't seen any at all, in this thread or elsewhere. I've seen a few things that might look similar if you squint hard enough, but no replicas. (And no, the New York Times case does not count. If you look at the Times' own filings describing how they got the allegedly copied material to begin with, their entire case falls apart by their own admissions.)

and you just said yourself that the works are being included without giving a definition at which point that inclusion stops being a copy.

...so you have no idea how a training dataset actually works. And yet you try to engage in serious discussions about it anyway. Figures.

Without getting into too much technical detail, the works are being included in the input; what actually comes out the other end and is used to generate new works resembles a database of hashes far more than it does a big folder full of image files.

-

@Mason_Wheeler said in I, ChatGPT:

@boomzilla said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

@boomzilla said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

@boomzilla said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

@boomzilla Deliberate sabotage of someone else's business is known as tortious interference.

Indeed, and like I said, if they were doing this to the scraper's servers you'd have a case. They're not and you don't.

Why? It's not the server being sabotaged; it's the AI. How is the scraper's server at all relevant?

If it's being sabotaged it's by code that can't process the images the way the AI scrapers want it to be processed. They are free to update their code to process the images differently.

"If your server got hacked by a buffer overflow, it's your own fault for running code that was incapable of processing the hacker's input the way you wanted it to be processed. You are free to update your code to process malicious input differently."

This may be

but legally the originator of the malicious input is not in the clear, nor should he be.

but legally the originator of the malicious input is not in the clear, nor should he be.Yes, and if the artist went to the scraper's server and did that it would be a problem. But if you copy some text from a site and submit it to your own application and get some SQL injection it's on you for not fixing your site to avoid SQL injection, not the people who posted a query on their page.

The scraping doesn't change anything. Learning from material that is made lawfully available to you remains lawful.

It's funny that you think I or my post disagree with anything that you've replied with right there.

-

Funny from TFA

-

Mason is wrong but he doesn’t understand why he is wrong.

If I put something online, I’m not making any guarantees as to what that is. Doesn’t matter if it’s photos or something else. It is simply what it is, and I’m free to put it out there.

What you do with that is sort of your problem, not mine.

I put up a picture that, to humans appears to be a picture of cute puppies. Where exactly did I put up a sign saying “this is a picture of cute puppies that you can use for any purpose”? Answer: I didn’t.

Where did I put up a sign saying “this is a cute picture of puppies that, unbeknown to me, contains a virus in the metadata that a bad JPEG parser will buffer overflow and crash and maybe infect your system”? Answer: I didn’t.

Where did I put up a sign saying “this is a cute picture of puppies that I deliberately infected for shits and giggles to see what would happen with bad JPEG parsers”? Answer: I didn’t.

Where did I put up a sign saying “this is a cute picture of puppies that, unbeknown to me, contains steganography data encoding an arbitrary copy of an illegal work”? Answer: I didn’t.

What you find online on a public server is what you find online. If you choose to feed it into your training system, it’s on you if your system does something rogue with it.

And if you can’t handle adversarial material from people who want to share artwork but not feed your machine (equivalent to robots.txt restrictions being honoured, which we know they’re not), you have no business accepting the open sewer of the internet, let alone getting pissy at bad actors who have poisoned the well somewhere along the line.

It’s really no different from picking up an appliance left on the side of the road - if it goes wrong, if it blows your house up, that’s on you. Even if there’s a sign on it saying “free to a good home”, but it’s faulty, that’s on you not on them.

The defence of “but it’s boobytrapped” is nebulous. If I put out an appliance that I know is faulty but I don’t know how faulty… am I at fault if it blows up and kills you? After all, when I put it outside, it might have just not worked. I’m not warranting to you that it’s safe.

Similarly if you leave food out: unless I’m explicitly warranting to you that the food is safe to eat (like, say, a restaurant), I’m not liable for you eating food left outside. Not even if it has a “free food” sign. This is why regulations exist, to cover for such things.

If you scrape images off the web, you don’t get any protections for the provenance of the image and nor should you. The only time that’s a thing is when you are at a place whereby you acquire the rights to use an image and as part of that transaction (which may be for zero monetary compensation, but it’s still a transaction) giving you rights including right of redress in the event that the image is not functioning as described.

It’s like any other good in that respect. Want to guarantee the image is safe? Obtain cleared rights from the copyright holder - and if they still give you a poisoned image, you have legal means of redress.

Actually now that I think about it… bitching about “boo hoo I scraped poisoned images off the web” isn’t really like the above examples. It’s digital roadkill - it’s there, you have no idea how it got there and if you get food poisoning from it, that’s 100% on you.

-

-

@boomzilla said in I, ChatGPT:

@Arantor said in I, ChatGPT:

It’s digital roadkill

Finally! A car analogy.

Now it all makes sense

-

@Mason_Wheeler said in I, ChatGPT:

@topspin said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

@topspin

No one is making mass copies of art to begin with. Works are being included as one single data point

No one is making mass copies of art to begin with. Works are being included as one single data pointI.e. they're copied.

in a training data set comprising billions of data points.

That you're breaking the laws, as written, billions of times doesn't make it any better.

You're conflating the input and the output. An ephemeral copy is made on the input side, not for the purpose of creating copies, but as an unavoidable byproduct of the way the Internet works. Viewing something necessarily involves copying it over the network, and is not a copyright infringement. This has been a well-settled matter since the 90s.

Taking this copy and studying it to learn from it is a clear example of transformative fair use: using the work for a completely different purpose than what the author intended when creating the work.

The input is used to create the output and at least partially contains the information. See your own "lossy compression" argument above. MP4 is also lossy compression, yet Netflix needs a license to stream their content.

What you fail to provide in your argument that this is fundamentally different is any kind of definition where it stops being one and becomes the other.The thing you are saying AIs are doing that is so terrible

You're confusing intent. I'm not saying what they're doing is terrible, I'm saying it's breaking copyright and just because you call it AI, or "we couldn't make profits without breaking copyright" is no reason to treat it differently from everybody else. If you don't like the copyright laws, change them instead of applying them arbitrarily to big players.

No, I'm saying it's not breaking copyright because copyright does not apply here.

This paragraph wasn't about "what you're saying" but a clarification about your claims what I'm saying. At least bother reading.

Besides, you just used "fair use" as a defense above, which applies to copyright. If there was no copyright question, there was no "fair use" to claim.

is. Not. Happening.

It is. There's been enough proof of replicas in this thread

Where? I haven't seen any at all, in this thread or elsewhere. I've seen a few things that might look similar if you squint hard enough, but no replicas. (And no, the New York Times case does not count. If you look at the Times' own filings describing how they got the allegedly copied material to begin with, their entire case falls apart by their own admissions.)

and you just said yourself that the works are being included without giving a definition at which point that inclusion stops being a copy.

...so you have no idea how a training dataset actually works. And yet you try to engage in serious discussions about it anyway. Figures.

I probably have a much better idea of how it works than you do, TYVM.

Without getting into too much technical detail, the works are being included in the input; what actually comes out the other end and is used to generate new works resembles a database of hashes far more than it does a big folder full of image files.

Not at all, since a database of hashes couldn't be used to recreate anything. Your argument is just "AI is magic" mumbo jumbo. The point is that it's not. We've had that argument in this thread before, it boils down to "I don't understand the encoding

so it's not an encoding". Providing a technically wrong analogy doesn't help that point. Stick to "lossy compression", at least that one is correct.

so it's not an encoding". Providing a technically wrong analogy doesn't help that point. Stick to "lossy compression", at least that one is correct.

-

@topspin said in I, ChatGPT:

I probably have a much better idea of how it works than you do, TYVM.

We have at least a couple of threads of Mason being a copyright internet kook.

-

@boomzilla There's a few superfluous words in that sentence.

-

@topspin said in I, ChatGPT:

@boomzilla There's a few superfluous words in that sentence.

@boomzilla said in I, ChatGPT:

@topspin said in I, ChatGPT:

I probably have a much better idea of how it works than you do, TYVM.

We have at least a couple of threads

of Mason being a copyright internet kook.

-

@LaoC Mockery remains the surest way to detect someone who has no case, is fully aware of it, and is trying to distract the audience from this.

-

@Mason_Wheeler said in I, ChatGPT:

@LaoC Mockery remains the surest way to detect someone who has no case, is fully aware of it, and is trying to distract the audience from this.

-

@Mason_Wheeler said in I, ChatGPT:

@LaoC Mockery remains the surest way to detect someone who has no case, is fully aware of it, and is trying to distract the audience from this.

-

@topspin said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

@topspin said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

@topspin

No one is making mass copies of art to begin with. Works are being included as one single data point

No one is making mass copies of art to begin with. Works are being included as one single data pointI.e. they're copied.

in a training data set comprising billions of data points.

That you're breaking the laws, as written, billions of times doesn't make it any better.

You're conflating the input and the output. An ephemeral copy is made on the input side, not for the purpose of creating copies, but as an unavoidable byproduct of the way the Internet works. Viewing something necessarily involves copying it over the network, and is not a copyright infringement. This has been a well-settled matter since the 90s.

Taking this copy and studying it to learn from it is a clear example of transformative fair use: using the work for a completely different purpose than what the author intended when creating the work.

The input is used to create the output and at least partially contains the information. See your own "lossy compression" argument above. MP4 is also lossy compression, yet Netflix needs a license to stream their content.

Yes, it does. But Netflix does not need a license to watch other people's movies, analyze them, and then produce their own new content that may be superficially similar but is still new content. Which they actually do.

The thing you are saying AIs are doing that is so terrible

You're confusing intent. I'm not saying what they're doing is terrible, I'm saying it's breaking copyright and just because you call it AI, or "we couldn't make profits without breaking copyright" is no reason to treat it differently from everybody else. If you don't like the copyright laws, change them instead of applying them arbitrarily to big players.

No, I'm saying it's not breaking copyright because copyright does not apply here.

This paragraph wasn't about "what you're saying" but a clarification about your claims what I'm saying. At least bother reading.

Besides, you just used "fair use" as a defense above, which applies to copyright. If there was no copyright question, there was no "fair use" to claim.

Fair use doesn't apply to copyright so much as makes copyright not apply. Fair use is the First Amendment right to free speech, in the context of copyright. Many people mistakenly think that fair use is an exception to copyright, when the most fundamental truth is, copyright is an exception to the First Amendment.

is. Not. Happening.

It is. There's been enough proof of replicas in this thread

Where? I haven't seen any at all, in this thread or elsewhere. I've seen a few things that might look similar if you squint hard enough, but no replicas. (And no, the New York Times case does not count. If you look at the Times' own filings describing how they got the allegedly copied material to begin with, their entire case falls apart by their own admissions.)

and you just said yourself that the works are being included without giving a definition at which point that inclusion stops being a copy.

...so you have no idea how a training dataset actually works. And yet you try to engage in serious discussions about it anyway. Figures.

I probably have a much better idea of how it works than you do, TYVM.

Then why are you saying things that are completely wrong?

Without getting into too much technical detail, the works are being included in the input; what actually comes out the other end and is used to generate new works resembles a database of hashes far more than it does a big folder full of image files.

Not at all, since a database of hashes couldn't be used to recreate anything.

- Which is why I said "resembles" rather than "is."

- There are still no examples of AI being used to recreate anything, so what exactly is your objection?

Your argument is just "AI is magic" mumbo jumbo. The point is that it's not. We've had that argument in this thread before, it boils down to "I don't understand the encoding

so it's not an encoding". Providing a technically wrong analogy doesn't help that point. Stick to "lossy compression", at least that one is correct.

so it's not an encoding". Providing a technically wrong analogy doesn't help that point. Stick to "lossy compression", at least that one is correct.If so, then how do you extract the Mona Lisa from the "compressed" dataset?

-

@Mason_Wheeler said in I, ChatGPT:

@topspin said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

@topspin said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

@topspin

No one is making mass copies of art to begin with. Works are being included as one single data point

No one is making mass copies of art to begin with. Works are being included as one single data pointI.e. they're copied.

in a training data set comprising billions of data points.

That you're breaking the laws, as written, billions of times doesn't make it any better.

You're conflating the input and the output. An ephemeral copy is made on the input side, not for the purpose of creating copies, but as an unavoidable byproduct of the way the Internet works. Viewing something necessarily involves copying it over the network, and is not a copyright infringement. This has been a well-settled matter since the 90s.

Taking this copy and studying it to learn from it is a clear example of transformative fair use: using the work for a completely different purpose than what the author intended when creating the work.

The input is used to create the output and at least partially contains the information. See your own "lossy compression" argument above. MP4 is also lossy compression, yet Netflix needs a license to stream their content.

Yes, it does. But Netflix does not need a license to watch other people's movies, analyze them, and then produce their own new content that may be superficially similar but is still new content. Which they actually do.

But if they mishear some dialog because of poor sound mixing those people would be on the hook for ruining Netflix's movies when their dialog is dumb, right?

-

@boomzilla said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

@LaoC Mockery remains the surest way to detect someone who has no case, is fully aware of it, and is trying to distract the audience from this.

@boomzilla Interestingly enough, the people I run into outside of this one highly specific echo chamber are not. Given the function of an echo chamber, condensing the entirety of it into counting as one person is a decent heuristic for the purposes of this meme.

-

@Mason_Wheeler two possibilities come to mind.

Either a) we see through the instances of Dunning-Kruger and are prepared to call you on it (and they’re not able to see it), or b) they see through it just fine but they like you enough to not call you out on your obvious bollocks, when it is obviously bollocks.

There is a sort of implied option c) that you do know enough to have broadly convinced them of knowing things, that they’ve bought into your obvious bollocks anyway because it’s you saying it and they don’t know any different, but that feels more like a side effect of a) rather than its own thing.

-

@Arantor There is, of course, another obvious possibility that you're not even considering here.

-

@Mason_Wheeler said in I, ChatGPT:

@topspin said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

@topspin said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

@topspin

No one is making mass copies of art to begin with. Works are being included as one single data point

No one is making mass copies of art to begin with. Works are being included as one single data pointI.e. they're copied.

in a training data set comprising billions of data points.

That you're breaking the laws, as written, billions of times doesn't make it any better.

You're conflating the input and the output. An ephemeral copy is made on the input side, not for the purpose of creating copies, but as an unavoidable byproduct of the way the Internet works. Viewing something necessarily involves copying it over the network, and is not a copyright infringement. This has been a well-settled matter since the 90s.

Taking this copy and studying it to learn from it is a clear example of transformative fair use: using the work for a completely different purpose than what the author intended when creating the work.

The input is used to create the output and at least partially contains the information. See your own "lossy compression" argument above. MP4 is also lossy compression, yet Netflix needs a license to stream their content.

Yes, it does. But Netflix does not need a license to watch other people's movies, analyze them, and then produce their own new content that may be superficially similar but is still new content. Which they actually do.

The thing you are saying AIs are doing that is so terrible

You're confusing intent. I'm not saying what they're doing is terrible, I'm saying it's breaking copyright and just because you call it AI, or "we couldn't make profits without breaking copyright" is no reason to treat it differently from everybody else. If you don't like the copyright laws, change them instead of applying them arbitrarily to big players.

No, I'm saying it's not breaking copyright because copyright does not apply here.

This paragraph wasn't about "what you're saying" but a clarification about your claims what I'm saying. At least bother reading.

Besides, you just used "fair use" as a defense above, which applies to copyright. If there was no copyright question, there was no "fair use" to claim.

Fair use doesn't apply to copyright so much as makes copyright not apply. Fair use is the First Amendment right to free speech, in the context of copyright. Many people mistakenly think that fair use is an exception to copyright, when the most fundamental truth is, copyright is an exception to the First Amendment.

is. Not. Happening.

It is. There's been enough proof of replicas in this thread

Where? I haven't seen any at all, in this thread or elsewhere. I've seen a few things that might look similar if you squint hard enough, but no replicas. (And no, the New York Times case does not count. If you look at the Times' own filings describing how they got the allegedly copied material to begin with, their entire case falls apart by their own admissions.)

and you just said yourself that the works are being included without giving a definition at which point that inclusion stops being a copy.

...so you have no idea how a training dataset actually works. And yet you try to engage in serious discussions about it anyway. Figures.

I probably have a much better idea of how it works than you do, TYVM.

Then why are you saying things that are completely wrong?

I'm not, you are. Just because you fail to understand something or give a coherent reply doesn't make other people wrong. But I assumed it was just your attempt at mocking, anyway, minutes before you forgot and went ahead exposing yourself with a statement how mockery is a sure way to detect someone who has no case.

Without getting into too much technical detail, the works are being included in the input; what actually comes out the other end and is used to generate new works resembles a database of hashes far more than it does a big folder full of image files.

Not at all, since a database of hashes couldn't be used to recreate anything.

- Which is why I said "resembles" rather than "is."

- There are still no examples of AI being used to recreate anything, so what exactly is your objection?

You mean besides the example in your very next sentence?

Your argument is just "AI is magic" mumbo jumbo. The point is that it's not. We've had that argument in this thread before, it boils down to "I don't understand the encoding

so it's not an encoding". Providing a technically wrong analogy doesn't help that point. Stick to "lossy compression", at least that one is correct.

so it's not an encoding". Providing a technically wrong analogy doesn't help that point. Stick to "lossy compression", at least that one is correct.If so, then how do you extract the Mona Lisa from the "compressed" dataset?

What is that even supposed to mean? You tell it "create an image of the Mona Lisa" and it gives you one.

If the AI "resembled" a database of hashes, you could not get anything meaningful out of it, certainly not a faithful replica of the Mona Lisa, since hashes are one-way by design. If, instead, the it very loosely "resembled" a database of images, then you could very well recreate a replica of the Mona Lisa with it.

And since you can, you just showed yourself that your analogy is completely wrong.Or are you trying to argue the reverse, that AI does resemble a database of hashes and can replicate the Mona Lisa, so therefore you can reverse hashes? I'll give you the benefit of the doubt and assume you're not trying to make an argument that hilariously wrong.

-

-

@Mason_Wheeler said in I, ChatGPT:

@Arantor There is, of course, another obvious possibility that you're not even considering here.

Having read your posts, it was the obvious thing to do.

-

@topspin said in I, ChatGPT:

Without getting into too much technical detail, the works are being included in the input; what actually comes out the other end and is used to generate new works resembles a database of hashes far more than it does a big folder full of image files.

Not at all, since a database of hashes couldn't be used to recreate anything.

- Which is why I said "resembles" rather than "is."

- There are still no examples of AI being used to recreate anything, so what exactly is your objection?

You mean besides the example in your very next sentence?

That's called "heading off an obvious but wrong response."

Your argument is just "AI is magic" mumbo jumbo. The point is that it's not. We've had that argument in this thread before, it boils down to "I don't understand the encoding

so it's not an encoding". Providing a technically wrong analogy doesn't help that point. Stick to "lossy compression", at least that one is correct.

so it's not an encoding". Providing a technically wrong analogy doesn't help that point. Stick to "lossy compression", at least that one is correct.If so, then how do you extract the Mona Lisa from the "compressed" dataset?

What is that even supposed to mean? You tell it "create an image of the Mona Lisa" and it gives you one.

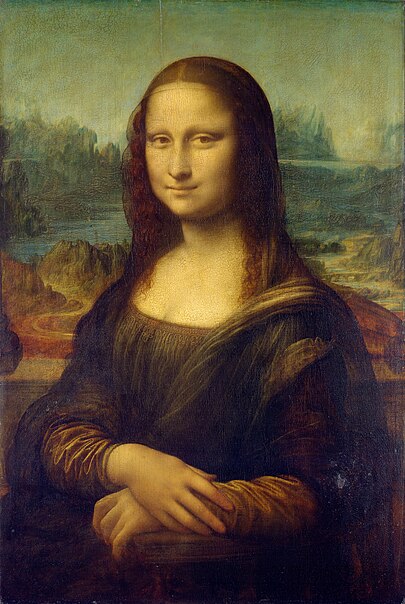

Dall-e:

Stable Diffusion:

The actual painting:

As you can see, asking an AI to produce a copy of even one of the most famous works of art of all time does not produce a copy of it. It produces something that is vaguely similar and recognizable as being inspired by the Mona Lisa, but nothing more.

If the AI "resembled" a database of hashes, you could not get anything meaningful out of it, certainly not a faithful replica of the Mona Lisa, since hashes are one-way by design. If, instead, the it very loosely "resembled" a database of images, then you could very well recreate a replica of the Mona Lisa with it.

And since you can, you just showed yourself that your analogy is completely wrong.Or are you trying to argue the reverse, that AI does resemble a database of hashes and can replicate the Mona Lisa, so therefore you can reverse hashes? I'll give you the benefit of the doubt and assume you're not trying to make an argument that hilariously wrong.

You're barking up the wrong tree. The property of "hashes" that I was using there to make the analogy was not "unable to reverse," but rather "jumbled data that bears no resemblance to the source."

-

@Mason_Wheeler said in I, ChatGPT:

@Arantor There is, of course, another obvious possibility that you're not even considering here.

Oh I considered it but I concluded it wasn’t worth the brain cell excitation to spend any more thought on it than the merest “could it be?”

-

@Mason_Wheeler said in I, ChatGPT:

Stable Diffusion:

The actual painting:

[snip]FFS.

@Arantor said in I, ChatGPT:

@DogsB the Twitter post I linked has some examples. In the interests of not making people go to Xitter I'll cross post.

As you can see, asking an AI to produce a copy of even one of the most famous works of art of all time does not produce a copy of it. It produces something that is vaguely similar and recognizable as being inspired by the Mona Lisa, but nothing more.

Yours is already obviously a derivative of the original (public domain) image, the one above is indistinguishable from "lossy compression." It is a copy.

If the AI "resembled" a database of hashes, you could not get anything meaningful out of it, certainly not a faithful replica of the Mona Lisa, since hashes are one-way by design. If, instead, the it very loosely "resembled" a database of images, then you could very well recreate a replica of the Mona Lisa with it.

And since you can, you just showed yourself that your analogy is completely wrong.Or are you trying to argue the reverse, that AI does resemble a database of hashes and can replicate the Mona Lisa, so therefore you can reverse hashes? I'll give you the benefit of the doubt and assume you're not trying to make an argument that hilariously wrong.

You're barking up the wrong tree.

So your argument is nonsense, that's not my fault.

The property of "hashes" that I was using there to make the analogy was not "unable to reverse," but rather "jumbled data that bears no resemblance to the source."

What the AI contains is data that creates a faithful replica of the image. The precise encoding is irrelevant. While it's not quite "a database of images", it does contain enough of the original input to create a copy. Qualitatively speaking, i.e. what you can do with it, not how it's implemented, at least for the Mona Lisa it is rather the "database of images" than the "database of hashes". Don't say "hashes" when the fundamental property of hashes is that you can't restore the original information when that is what you demonstrably can do.

"Jumbled data that bears no resemblance to the source" is just the "I don't understand the encoding" argument. An MP4 stream also looks like a jumbled mess when you don't know how to interpret it.

Now go ahead and define at what percentage of lossy your copy is just "learned information" and not a copy.

-

@topspin said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

Stable Diffusion:

The actual painting:

[snip]FFS.

@Arantor said in I, ChatGPT:

@DogsB the Twitter post I linked has some examples. In the interests of not making people go to Xitter I'll cross post.

As you can see, asking an AI to produce a copy of even one of the most famous works of art of all time does not produce a copy of it. It produces something that is vaguely similar and recognizable as being inspired by the Mona Lisa, but nothing more.

Yours is already obviously a derivative of the original (public domain) image, the one above is indistinguishable from "lossy compression." It is a copy.

Oh come on! This one's closer than the ones I managed to get out of it, I'll admit, but that's still clearly not a copy, "lossy" or otherwise. Not when Lisa's most famous attribute, her mysterious smile, is so visibly different. Putting the two side-by-side and claiming they're the same when anyone with eyes can clearly see that they're not borders on straight-up gaslighting.

So your argument is nonsense, that's not my fault.

...says the guy claiming things that are clearly not copies are copies.

The property of "hashes" that I was using there to make the analogy was not "unable to reverse," but rather "jumbled data that bears no resemblance to the source."

What the AI contains is data that creates a faithful replica of the image.

Repeating the same false claim over and over again does not make it true.

-

@Mason_Wheeler said in I, ChatGPT:

Oh come on! This one's closer than the ones I managed to get out of it, I'll admit, but that's still clearly not a copy, "lossy" or otherwise. Not when Lisa's most famous attribute, her mysterious smile, is so visibly different.

So it's a 99% copy? Because it clearly is a copy. You still haven't defined where to draw the line.

Besides, ask anybody without the context of this discussion if the above is an image of "the Mona Lisa" and they will answer yes.

Putting the two side-by-side and claiming they're the same when anyone with eyes can clearly see that they're not borders on straight-up gaslighting.

I didn't say they're identical, stop making shit up. If you think a court of law, in a hypothetical scenario where the Mona Lisa wasn't in the public domain, wouldn't rule that this is clearly a derived work, fair use or not, arguing with you is completely pointless.

@Mason_Wheeler said in I, ChatGPT:

Repeating the same false claim over and over again does not make it true.

Which is exactly what you're doing.

You make a bullshit claim, fail to define it, mock that people don't understand how things work technically, then demonstrate your lack of technical understanding. Repeat ad nauseam.

-

@topspin said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

Oh come on! This one's closer than the ones I managed to get out of it, I'll admit, but that's still clearly not a copy, "lossy" or otherwise. Not when Lisa's most famous attribute, her mysterious smile, is so visibly different.

So it's a 99% copy? Because it clearly is a copy. You still haven't defined where to draw the line.

Because your "line" is irrelevant. Now that we've established that AIs can't create actual copies in the first place, let's move on to the actual issue that the Luddites are using "it's a big copy machine" as a shield for: the actual use case for generative AI. Because aside from people specifically trying, for whatever reason, to prove it's possible to find a way to twist an AI's arm into producing a "close copy," nobody is doing this. And why would they? Why would you want to ask an AI for an image of the Mona Lisa when you can get an exact replication from going to the Wikipedia article? Why would anyone want that?

The real issue is that an AI can learn from existing work and produce new work that is similar. A prompt engineer with the skill to get a "close copy" of the Mona Lisa out of an AI is also able to produce a picture of something entirely unrelated, such as a modern-day public figure, that looks like it was painted by Leonardo da Vinci. And that has the Luddites up in arms, for the same reason Luddites have always gotten up in arms: the fear that they will be made irrelevant by these new, scary machines. And so, as with the original Luddites throwing their sabots into machinery, leading to the coinage of the term sabotage, we see them doing the exact modern-day equivalent.

And just like always, today's Luddites are wrong, both morally and factually. Sure, the workers metaphorically marching with tiki torches and shouting "machines will not replace us!" got wiped out, because they were idiots, but the ones who embraced the new technology have always flourished, because their prior experience made them the ones with the best capability to use it effectively! Put in the context of the current issue, "skilled artists make the best prompt engineers."

Do you remember when Photoshop came out, and a lot of existing photo artists made a big uproar about how using Photoshop is "cheating" because it makes image editing "too easy" and anyone who used it didn't deserve to be called a real artist? Sounds pretty familiar, doesn't it? Because we always see this exact same circus, and it always turns out to be nothing. Today, Photoshop is simply the default; if you create digital art, everyone assumes that you had help from Photoshop to do it, and no one sees anything wrong with that. In another 10 years, generative AI will be the default in exactly the same way.

I didn't say they're identical, stop making shit up. If you think a court of law, in a hypothetical scenario where the Mona Lisa wasn't in the public domain, wouldn't rule that this is clearly a derived work, fair use or not, arguing with you is completely pointless.

No, I think that in a court of law this would never get brought up in the first place, because no one is using generative AI to produce copies.

-

@Mason_Wheeler of course it would, as a trivial example of “here is the original, here is something it made, most people can’t tell the difference”. Now tell that to Disney and watch their lawyers go nuts explaining why this is so bad for creativity.

Remember in a courtroom, you’re explaining it to a group of 12 ordinary people who will be swayed by arguments like that.

But you know that and you’re going to keep playing with a straw army anyway.

-

@Mason_Wheeler said in I, ChatGPT:

@topspin said in I, ChatGPT:

@Mason_Wheeler said in I, ChatGPT:

@topspin Research is one thing. Actively deploying adversarial images to try to screw up real-world AIs is another, and speed limits are a prefect example of why. If you confused a self-driving car into thinking it had the right to go 70 in a residential neighborhood, and something went wrong, the blood would be on your hands.

Sure sure. And if someone posts these images on their website, together with an article describing the research, then the scrapers come along and incorporate that shit into their models without asking, they're violating copyright and you want to blame the researchers instead.

The fix is simple: stop using unlicensed images.Once again, the question no one wants to answer: where does any requirement exist to obtain a license for learning?

In the fucking license agreement god fucking dammit. Did you even read it? On almost all image-publishing websites, you are expressly disallowed to use the picture for any purpose other than viewing it yourself, and only yourself, and only for personal enjoyment and no other reason. You cannot save it, you cannot send it to anyone (except as a link), you cannot edit it, you cannot do anything. And in particular - as listed in a separate bullet point - you are prohibited from using any automation tools to access the image by any other means than physically clicking your mouse (or hitting enter, or touching the screen) to open the link.

It's not their fault everyone routinely ignores copyright everywhere.

-

@Arantor said in I, ChatGPT:

Remember in a courtroom, you’re explaining it to a group of 12 ordinary people who will be swayed by arguments like that.

Which is why you keep strictly to the facts and not emotional appeals to plausible-sounding nonsense: because even if the nonsense will sway a bunch of untrained jurors, the facts are far more likely to win on appeal.

-

@Mason_Wheeler so what’s your excuse?