Can I borrow an apology?

-

If you think Rust apologists are bad, you've never seen a C++ apologist.

I'll skip over the fatal misconceptions they have and focus on just the outright lies.

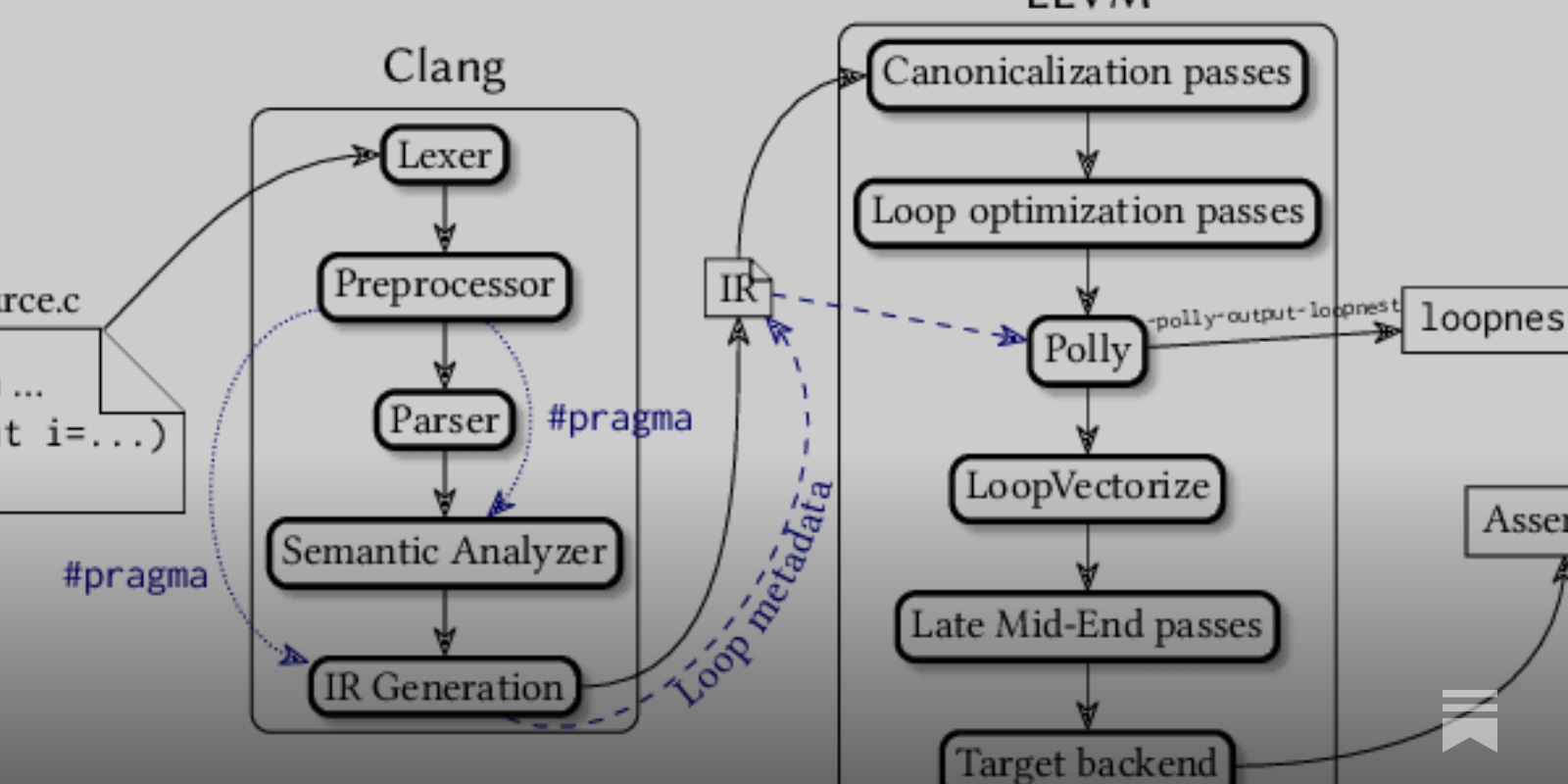

In principle, both Rust and C++ are compiled languages that use 95% of the LLVM compiler infrastructure. Rust and C++ are translated into IR, where most (arguably, all) optimizations are made. (...) But this is where the similarities end.

A parenthesis here [one lie]. Rust claims [two lies] that there are “Rust-specific optimizations” done at MIR level that will, in the future, impact performance significantly [three lies]. I have found a list [four lies] of such transformations, but I could not assess nor prove [five lies] that the performance impact is real or just rhetorical [and a false dichotomy on top].

Rust tends to be more strict than C++ - it is its raison d’etre - and that means more real-time checks. Although integer underflows and overflows are only checked in debug mode, memory accesses in arrays are constantly checked for bounds unless you’re in unsafe mode, which defeats the purpose.

C, its antecessor, was created with the use case of it being a low level language, just a tad bit above assembly.

C’s pointers have a simple and intuitive meaning, allowing even junior developers to grasp and even write statements that would be extremely hard to understand in assembly.

This means that a good developer who knows how to take advantage of cache/locality, will have a good time implementing such algorithms and data structure with C++ and will very likely struggle with Rust for the same task.

Rust is an offspring of the LLVM project - and so is Julia, Kotlin and Scala. [my favorite xDDDD wrong VM, dude]

Without LLVM Rust would not have existed.

Rust mandates the use of its own compiler - rustc - which is a top driver of the LLVM stack, pretty much as clang is for C++. C++ on the other hand, has dozens of good quality compilers available in many platforms.

Although the scenario is changing rapidly, the pool of engineers with C++ background is much larger than the pool of Rust developers. Also, the quantity of teaching material and resources available to learn the language is currently overwhelmingly more abundant on the C++ side.

So if I want to bet safe in the development of a new system, I have to go with C++.

In many years of coding C++, very rarely I experienced a stack overflow or segmentation fault. It is literally not an issue in every codebase I have worked with. [yes, this is definitely a lie]

The large majority of C++ applications are non-public facing. So much that most datacenter machines run with mitigations turned off since there is absolutely no possibility of contact of those machines with bad actors.

First, crashes can happen in any language, with the same frequency.

However segfaults can be caught with a signal trap and handled cleanly like any Java/C# exceptions.

the safety benefits are not really that pressing for most applications

its infamous long and hard learning curve

And our grand total comes to exactly twenty. You need twenty lies if you want to argue C++ is better than Rust.

-

@Gustav He clearly knows nothing about Rust and not much more about C++.

-

-

If you think Rust apologists are bad, you've never seen a C++ apologist.

That itself has to be some kind of fallacy or other, on your part.

I'll skip over the fatal misconceptions they have and focus on just the outright lies.

A bunch of those aren't particularly convincing, but also not lies. For example, what's supposed to be the lie about this?

C, its antecessor, was created with the use case of it being a low level language, just a tad bit above assembly.

Or this one (Rust is certainly infamous for it, whether deserved or not):

its infamous long and hard learning curve

But instead of arguing where you're wrong that the article is lying, let's instead look at the more hilariously wrong parts. We can start right with the one above, because C++'s learning curve is even more ridiculous. If you claim you know C++, you're either writing papers for the standard or are somewhere in Dunning Kruger land.

However segfaults can be caught with a signal trap and handled cleanly like any Java/C# exceptions.

Back in the day I had a coworker - ten years senior to me when I had just started - who compiled his code with

/EHaso he couldcatch(...)all the segfaults he produced. Absolutely horrible programmer.

The quote above is enough to disregard everything else in the article.C’s pointers have a simple and intuitive meaning, allowing even junior developers to grasp and even write statements that would be extremely hard to understand in assembly.

That one is hilarious because it's what the C programmers tell you why C is nice and beautiful, and C++ is horrible. It's simple and maps nicely to machine code. (The truth is that both are horrible, but at least C++ lets you create useful abstractions, while C still has string bugs and memory leaks and is overall just terrible.) It actually took the Rust people who otherwise whine that APIs are defined by C to also point out that, no, the semantics of using pointers in C (and by extension C++) aren't actually "simple". They're somewhere between complex and not even well defined. (Cue provenance)

-

For example, what's supposed to be the lie about this?

C, its antecessor, was created with the use case of it being a low level language, just a tad bit above assembly.

C exists because Ken Thompson couldn't port Fortran to PDP-7. Low-level has never been a design goal for C, they made it as high-level as they could get within technological limits. It only became low-level after everything even-lower-level died out.

Not really relevant to the larger point, but he's just so wrong here. People who don't know history should stop talking about history.

-

Low-level has never been a design goal for C, they made it as high-level as they could get within technological limits

Interesting point if true. But it's certainly not (only) this guy's fault, then, because the "portable assembler" nonsense has been parroted for ages.

couldn't port Fortran to PDP-7

ETA: do you have a story about that? I'm curious. Fortran shouldn't be ported to anything, but the grammar is actually simple compared to today's languages.

-

Low-level has never been a design goal for C, they made it as high-level as they could get within technological limits

Interesting point if true. But it's certainly not (only) this guy's fault, then, because the "portable assembler" nonsense has been parroted for ages.

couldn't port Fortran to PDP-7

ETA: do you have a story about that? I'm curious. Fortran shouldn't be ported to anything, but the grammar is actually simple compared to today's languages.

porting might also be a library/collection move, sort of like how a jre will emulate the javascript library to operate within a separate use case from its standard

-

ETA: do you have a story about that? I'm curious. Fortran shouldn't be ported to anything, but the grammar is actually simple compared to today's languages.

From The Development of the C Language by Dennis Ritchie, presented in 1993 and later published by the ACM:

[Ken] Thompson was faced with a hardware environment cramped and spartan even for the time: the DEC PDP-7 on which he started in 1968 was a machine with 8K 18-bit words of memory and no software useful to him. While wanting to use a higher-level language, he wrote the original Unix system in PDP-7 assembler. At the start, he did not even program on the PDP-7 itself, but instead used a set of macros for the GEMAP assembler on a GE-635 machine. A postprocessor generated a paper tape readable by the PDP-7.

...

Challenged by [Doug] McIlroy's feat in reproducing TMG, Thompson decided that Unix—possibly it had not even been named yet—needed a system programming language. After a rapidly scuttled attempt at Fortran, he created instead a language of his own, which he called B. B can be thought of as C without types; more accurately, it is BCPL squeezed into 8K bytes of memory and filtered through Thompson's brain.

-

couldn't port Fortran to PDP-7

ETA: do you have a story about that? I'm curious. Fortran shouldn't be ported to anything, but the grammar is actually simple compared to today's languages.

Wikipedia says so

Thompson tried to port Fortran but couldn't, so instead he went for a subset of BCPL and called it B. He and Ritchie kept adding features until B became C. Granted, this was early 70s, so distinction between high level and low level wasn't much.

Thompson tried to port Fortran but couldn't, so instead he went for a subset of BCPL and called it B. He and Ritchie kept adding features until B became C. Granted, this was early 70s, so distinction between high level and low level wasn't much.

-

@_deathcollege said in WTF Bites:

plug it into something that's actually encrypted properly with ssl

That's what the service mesh … will one day be for. A service mesh, of course, will have its own PKI. See, it's PKIs all the way down. It always is.

-

@Gustav Bah, Zig is the new hotness.

-

@Gustav Bah, Zig is the new hotness.

That’s not even made by Google, so it can’t be as terrible as it sounds.

-

-

In the end, seriously, among the slew of "new" programming languages, is Rust worth learning? (and if not Rust, which one?)

I don't want to fall too behind technologically, since I'd rather not be fired and replaced by an upcoming youth in twenty-plus years when I'm five years away from retirement.

-

Thompson tried to port Fortran but couldn't,

Or at least couldn't get it to do what he wanted it to.

-

@Medinoc no, no, no. You don't learn new technology in order to keep up. You learn very old technology like COBOL that still powers most of the banking software and nobody wants to port it because it works, but there's less and less people who could actually maintain it.

-

-

couldn't port Fortran to PDP-7

ETA: do you have a story about that? I'm curious. Fortran shouldn't be ported to anything, but the grammar is actually simple compared to today's languages.

Wikipedia says so

Thompson tried to port Fortran but couldn't, so instead he went for a subset of BCPL and called it B. He and Ritchie kept adding features until B became C. Granted, this was early 70s, so distinction between high level and low level wasn't much.

Thompson tried to port Fortran but couldn't, so instead he went for a subset of BCPL and called it B. He and Ritchie kept adding features until B became C. Granted, this was early 70s, so distinction between high level and low level wasn't much.But I think this is maybe a case of

. Yeah, his first attempt was FORTRAN, probably because why reinvent the wheel if you don't have to. Though based on the story (as linked by

. Yeah, his first attempt was FORTRAN, probably because why reinvent the wheel if you don't have to. Though based on the story (as linked by  ):

):Challenged by [Doug] McIlroy's feat in reproducing TMG, Thompson decided that Unix—possibly it had not even been named yet—needed a system programming language. After a rapidly scuttled attempt at Fortran, he created instead a language of his own, which he called B. B can be thought of as C without types; more accurately, it is BCPL squeezed into 8K bytes of memory and filtered through Thompson's brain.

It's not clear here why that attempt was scuttled, and certainly no indication that it couldn't be ported.

-

In the end, seriously, among the slew of "new" programming languages, is Rust worth learning?

Absolutely. For one, unlike Python, JS, Go or Kotlin, it was actually designed. That immediately gives it +1000 points to usability and user experience. Second, it's designed by people who know what they're doing, and it shows - it's really well thought out and writing code is quite pleasant. Third, even if you end up not using it, you'll still learn a lot of concepts that are useful in general (unless you used Haskell before then you already know it, but you don't strike me as a Haskell guy).

-

As in hot pile of garbage

I know, right? I bet it even compiles fast and doesn't generate gigabytes of temporary files, like The Best Language. :tropical_crab:

-

@Zecc I opened Wikipedia article on Zig and it says "duck typing". Now, can you tell me something about Zig that doesn't deep throat Satan's balls?

-

Absolutely. For one, unlike Python, JS, Go or Kotlin, it was actually designed. That immediately gives it +1000 points to usability and user experience. Second, it's designed by people who know what they're doing, and it shows - it's really well thought out and writing code is quite pleasant. Third, even if you end up not using it, you'll still learn a lot of concepts that are useful in general (unless you used Haskell before then you already know it, but you don't strike me as a Haskell guy).

Counterpoint: it has the serious side-effect on making you obsessed with spreading Rust

-

@Zerosquare I almost wrote this sentence myself. Having a day job of coding in languages other than Rust really sucks once you know Rust, and I'm being completely serious here.

-

-

@Zerosquare it's actually a very similar reason to why I refuse to buy quality audio equipment. I'm content with my cheap crap USB speakers, and I'm afraid if I ever switch to anything high-end, I'll never be able to go back - and there goes tens of thousands of dollars over my lifetime.

I take that back. @Medinoc you should ABSOLUTELY NEVER EVER EVER EVER pick up Rust. It will turn your life into absolute suffering. Not when you're using it, but when you're not.

-

@Zecc I opened Wikipedia article on Zig and it says "duck typing". Now, can you tell me something about Zig that doesn't deep throat Satan's balls?

That would requiring knowing Zig, which I don't.

I had no idea it had duck typing. I thought it was meant as a C replacement. Does the typing work like the weird way Go does it?

-

I had no idea it had duck typing. I thought it was meant as a C replacement.

C is the ultimate duck-typed language. You construct a void pointer from an arbitrary integer and cast it into a struct of your choosing, and it all Just Works™. All C programmers abuse the hell out of it everywhere they go. They even built unions into the core language syntax to make the process easier. Of course anything that aims to be C replacement will have duck typing.

Does the typing work like the weird way Go does it?

As far as I can tell, it's even worse.

-

@homoBalkanus i see your cobol and raise you its strong-type listing & calculations twin, virtual basic

-

I had no idea it had duck typing. I thought it was meant as a C replacement.

C is the ultimate duck-typed language. You construct a void pointer from an arbitrary integer and cast it into a struct of your choosing, and it all Just Works™. All C programmers abuse the hell out of it everywhere they go. They even built unions into the core language syntax to make the process easier. Of course anything that aims to be C replacement will have duck typing.

Does the typing work like the weird way Go does it?

As far as I can tell, it's even worse.

The union is an essential component of the tagged union, which is generally a struct containing an enumeration and a union.

-

@PleegWat why waste precious bits on tag though? You are in func1, you know this argument must have the 2nd union variant if you're here. Or 4th, but this can't be the case because that global variable is false. Either way, tagging is for pussies.

- the thought process of programmers who wrote the C code I had to deal with, probably

-

@Gustav Thanks, I'll give Rust a look (though I still plan to keep C# as main since it's what I do for a living).

-

-

I wish CAs would have stated policies about what certs they'll sign. For example, I'm happy to trust US government CAs to make statements about US government entities, but wouldn't trust them so much about Singaporean entities. Its a bit of a fail in the policy space that that sort of thing is not bound into the CA self-asserions.

There is a

nameConstraintsproperty (added by some extension) that can encode some such policy in the CA certificate. I even used it in some internal certificates. But none of the public ones use it. Plus such policy can only be tied to the domain name, so it can restrict an authority to only sign certificates for the.govdomain, but it can't restrict it to only sign certificates for Singaporean entities even though they are using.comdomain with everybody else.

-

@Zecc I opened Wikipedia article on Zig and it says "duck typing".

You already wrote a 21 point treatise on Zig, with a bunch of negative and a few positive (async) points, so you shouldn't have too google it. Already forgot?

Here's your

and welcome to the CRS club.

and welcome to the CRS club.

-

@topspin I genuinely don't remember any of that.

-

@topspin: are you sure it wasn't written by another, completely unrelated goose?

-

For one, unlike Python, JS, Go or Kotlin, it was actually designed.

No. Each of those languages was initially designed, and each of them then evolved and had to work around earlier decisions, which is already happening to Rust as well, mainly around async, e.g. in interaction of

BoxandPin(due to whichBox<Future<…>>does not implementFuture) or thatTaskcould use a slightly differently definedSend(so thatTaskwould be likeThread), but that would break the uses of!Sendthat come from using thread-local variables.

-

@topspin I genuinely don't remember any of that.

Neither did I, but @Parody linked to a relevant thread:

(I know, I've said it before.)

-

In the end, seriously, among the slew of "new" programming languages, is Rust worth learning?

Absolutely. For one, unlike Python, JS, Go or Kotlin, it was actually designed. That immediately gives it +1000 points to usability and user experience. Second, it's designed by people who know what they're doing, and it shows - it's really well thought out and writing code is quite pleasant. Third, even if you end up not using it, you'll still learn a lot of concepts that are useful in general (unless you used Haskell before then you already know it, but you don't strike me as a Haskell guy).

This really reads like a meme shitpost copypasta.

I'll just sit here with my vaporeon.

-

@Zecc I opened Wikipedia article on Zig and it says "duck typing".

That's … somewhat misleading. It is still statically typed (and the Wikipedia article does say that in the first line), so this “duck typing” is similar to how C++ templates or Go interfaces (but not Rust traits) work.

Now, can you tell me something about Zig that doesn't deep throat Satan's balls?

For what little I know, Zig brings two interesting new features:

- You write compiletime-evaluated code manipulating types and runtime-evaluated code manipulating values using the same syntax and basic structure, leading to extremely flexible, though rather unusual, generic code a bit like List macros.

- Implicit “context” function arguments—environment that is usually global, like allocators, filesystem access, current directory and such, are declared by functions that they need them, and can be overridden for scope, implicitly passed to functions called from that scope. That allows better control over resources, because you know parts of the code that don't declare using corresponding context won't be using them.

But the Rust borrow checker is probably still a more useful new feature than these two. I really hope the next new language will combine all three.

-

C is the ultimate duck-typed language. You construct a void pointer from an arbitrary integer and cast it into a struct of your choosing, and it all Just Works™.

That's not what “duck typing” means. This is weak typing. It allows you to treat a chunk of memory as a different type than before.

“Duck typing” rather means that you specify requirements on a type in generic code by properties of the type rather than its place in the type hierarchy. Thus talking about duck typing in C does not even make sense, because it does not have generic code.

C++ does use duck typing in statically generic code where if all expressions in the template type-check, the type is accepted, but not in dynamically generic code, which uses base classes and virtual methods. Go has duck typing in dynamically generic code, because anything that has methods with the right signatures can be cast to an interface type, and I suppose in statically generic code too now that it gained it.

And of course dynamically typed languages all allow duck typing, because everything is just

Anyand unless you do explicit type-check, the code works as long as all member references resolve. For suitable value of “works”, because it's a good way to get weird bugs.Note that dynamically typed languages are strongly typed—you can't choose to treat a value as something else than it was created as—as are most garbage-collected languages like Java or C#. C is weakly typed via unchecked pointer casts, C++ via

reinterpet_castand Rust viamem::transmute.

-

For one, unlike Python, JS, Go or Kotlin, it was actually designed.

No. Each of those languages was initially designed

JS was famously implemented in 3 weeks start to finish. How long was the design phase? Was there one at all?

Less famously, Python also acquired features as they go, without much rhyme and reason to them. There was no overarching language design until 3.0 (and even then it was arguable).

Go's entire (ENTIRE) design doc was "C but better", and they figured out what it actually means along the way. Most of the language-defining decisions were made years after 1.0 release. Same story with Kotlin, except it was Java instead of C.

Rust actually had the design team sketch out the goals for the language before they committed to 1.0 release. There were 13 pre-alpha releases, and 0.10 was a massively different language than 0.13. It lost a built-in GC, for example. The next 8 years of development combined changed less in the language than those 3 pre-alphas. Code written in 2015 is still more or less up to modern Rust standards.

and each of them then evolved and had to work around earlier decisions, which is already happening to Rust as well, mainly around async, e.g. in interaction of

BoxandPin(due to whichBox<Future<…>>does not implementFuture)Pinhas always been meant as a temporary hack until they can get the real solution figured out. They knew up front it's bad, and they were already thinking about how to get rid of it before they finished implementing it. They implemented it because it was deemed better to have somewhat shitty async/await than no async/await at all.or that

Taskcould use a slightly differently definedSend(so thatTaskwould be likeThread), but that would break the uses of!Sendthat come from using thread-local variables.That I know nothing about. Do you have some links? Sounds interesting.

-

Note that most dynamically typed languages are strongly typed—you can't choose to treat a value as something else than it was created as—...

There are other ways of doing type logic that are just as well founded but have very different consequences.

-

They implemented it because it was deemed better to have somewhat shitty async/await than no async/await at all.

The saddest thing about Rust is how little the core developers of it are aware of other programming languages. They know C++ and OCaml, but not Fortran or Lisp or various scripting languages; that's resulted in some really big errors (such as in the async code or the floating point handling) where they weren't just reacting to the situation in C++.

The authors of Go, for all that the language is nasty, have at least demonstrated that they're properly read about the literature on language design.

Raw talent isn't enough. Need some actual learning too.

-

or that

Taskcould use a slightly differently definedSend(so thatTaskwould be likeThread), but that would break the uses of!Sendthat come from using thread-local variables.That I know nothing about. Do you have some links? Sounds interesting.

There was some discussion linked from last TWIR … lemme check … Non-Send Futures When? linked in TWIR 525.

-

They implemented it because it was deemed better to have somewhat shitty async/await than no async/await at all.

The saddest thing about Rust is how little the core developers of it are aware of other programming languages. They know C++ and OCaml, but not Fortran or Lisp or various scripting languages; that's resulted in some really big errors (such as in the async code or the floating point handling) where they weren't just reacting to the situation in C++.

The authors of Go, for all that the language is nasty, have at least demonstrated that they're properly read about the literature on language design.

Raw talent isn't enough. Need some actual learning too.

I'm always impressed by your ability to sound wise while saying absolutely nothing of substance. Which errors are you talking about, and which parts of "Fortran or Lisp or various scripting languages" should've they copied to fix them? And I categorically disagree the authors of Go had read any literature whatsoever, especially anything about language design. You could've picked any language as an example and you'd get a doubt from me, but you specifically choose the only one that's not just ignorant of the advances in computing theory, but actively hostile to them.

-

I'm always impressed by your ability to sound wise while saying absolutely nothing of substance.

-

WTF Bite: Arguing in a Bites thread again. Idiots.

-

JS was famously implemented in 3 weeks start to finish. How long was the design phase? Was there one at all?

I think it was … in JWZ's head, basically.

Less famously, Python also acquired features as they go, without much rhyme and reason to them. There was no overarching language design until 3.0 (and even then it was arguable).

I think there is some rhyme and reason, because Python still has just one principal architect. On the other hand that means that principal architect might, and does, have a lot of the design principles in his head and not written out anywhere.

Go's entire (ENTIRE) design doc was "C but better", and they figured out what it actually means along the way. Most of the language-defining decisions were made years after 1.0 release.

The team that implemented the 1.0 release probably did have something more among themselves, but they were not doing it in public, so most of it won't be to be seen anywhere.

And I would totally agree the design isn't good. I had two major problems with it when I first saw it and then added a third one:

- Special support for static typing of two complex data structures—the associative array and the message queue—while restricting any other to dynamic typing feels horribly inconsistent to me. They finally added generics not long ago, but that does not complete the inconsistency completely since the old special cases must remain in place for backward compati(de)bility.

- Likewise, the decision to make structs value types, but arrays always reference types likewise feels horribly inconsistent.

- The signature

gostatement emphasizes all that's wrong with threads to the point we now have an article go statement considered harmful—you don't even have any handles for ‘goroutines’ you could join.

Also, they totally failed to make “C but better”, because, being reliant on garbage collector, it does not qualify for a lot of cases where C is (still) used today. It only is a valid replacement for Java, not for C.

Same story with Kotlin, except it was Java instead of C.

Again, the team at JetBrains probably did some design among themselves, but not publicly. And they were quite constrained by Java, because it isn't a replacement for Java, only for Java syntax.

Rust actually had the design team sketch out the goals for the language before they committed to 1.0 release. There were 13 pre-alpha releases, and 0.10 was a massively different language than 0.13. It lost a built-in GC, for example. The next 8 years of development combined changed less in the language than those 3 pre-alphas. Code written in 2015 is still more or less up to modern Rust standards.

Unlike most other languages, Rust was designed in public and with fairly large contributor base. Being public is the big difference, and yes, it does make the design better.

-

rust is spreading by itself

rust is spreading by itself