In other news today...

-

@izzion said in In other news today...:

@ObjectMike said in In other news today...:

@DogsB Why was that lead comparing points across teams? They mean nothing outside your team.

Can't compare epeen without slapping down your team's story points on the table.

I guess you could compare velocity stability, velocity increase over the last TIME_UNIT, etc. But if you're using story points, even if you both start with 1 on the low end and X for a "break these up" value, you still can't compare the values. There will be subtle enough differences between say 2's and 3's since a 3 is conceptually 1.5x bigger than a 2, but in reality it's not strict math. Though strange enough teams do seem to converge on what the numbers actually mean.

-

@ObjectMike said in In other news today...:

Yeah, I've never heard of CI including merging.

Well, it should usually involve merging from master so you don't get big conflicts when the feature is finalized. But merging to master, yeah, I'd seriously question the sanity of that.

-

@Bulb said in In other news today...:

@ObjectMike said in In other news today...:

Yeah, I've never heard of CI including merging.

Well, it should usually involve merging from master so you don't get big conflicts when the feature is finalized. But merging to master, yeah, I'd seriously question the sanity of that.

It's probably something dating from when people used VCSes that made branching and merging difficult.

-

@ObjectMike said in In other news today...:

@DogsB Why was that lead comparing points across teams? They mean nothing outside your team.

The points were fed into charts which were used in planning by business. The points were made up by individual teams but the work would be shifted around different teams depending on capacity. Capacity was about 35 points per team. It was scrum at its dumbest.

-

@DogsB said in In other news today...:

. It was scrum at its dumbest.

That seems like a low bar to clear.

-

@Arantor said in In other news today...:

@DogsB said in In other news today...:

. It was scrum at its dumbest.

That seems like a low bar to clear.

Some of Scrum isn’t a bad idea but the implementation is usually dodgy. Pointing is always stupid and some of the ceremonies are pointless because you don’t act on their output.

A hard and fast rule seems to be: if you’re two months in and spend more time talking about the process than actually using it then its probably doomed to failure.

-

@BernieTheBernie said in In other news today...:

People keep telling me they're harmless but they keep doing this.

The infamous Beargler strikes again!

-

Can anyone who knows Linux stuff a bit better than I do verify this? On the one hand it feels appropriately "truthy," in line with what we saw with Heartbleed where Linus's Law says anyone could have and should have caught this crap but no one actually looked.

On the other hand, this is a bit of a grandiose claim that "The comment says it scales with CPU count and the comment is incorrect. I wonder whether kernel developers are aware of that mistake as they are rewriting the scheduler! Official comments in the code says it’s scaling with log2(1+cores) but it doesn’t. All the comments in the code are incorrect. Official documentation and man pages are incorrect. Every blog article, stack overflow answer and guide ever published about the scheduler is incorrect."

-

@Mason_Wheeler the article cites a bit of code where it gets the CPU count and dumps the output of min(CPUs, 8) into the variable it uses for the scaling calculation.

Seems to me that it could be legit but then why has no one in the big big supercomputer category noticed? Or are they using something else for this part of the code?

-

@Mason_Wheeler If it is true (it's the context of what that exact kernel parameter does that I've not got a good feeling for) then it must have only been affecting people with large desktop machines or servers with very large numbers of CPUs in direct use, but the real high-CPU-count uses have all been in VM servers, and those have definitely been working (because it would definitely have been spotted if those hadn't been hitting their utilization targets).

-

@dkf Yeah, that's what I was wondering about. This seems like something that would have been noticed!

-

@Arantor said in In other news today...:

why has no one in the big big supercomputer category noticed

They use really big clusters of computers running MPI. Individually, the computers aren't particularly high powered, but they're packed very tightly (heat dissipation is a key limiting factor) and have an excellent interconnect (the other big limiting factor).

-

@Mason_Wheeler said in In other news today...:

@dkf Yeah, that's what I was wondering about. This seems like something that would have been noticed!

Hypervisors aren't normal kernels at all, and I don't think there's many tenants that get more than 8 cores anyway.

-

@Mason_Wheeler said in In other news today...:

Can anyone who knows Linux stuff a bit better than I do verify this? On the one hand it feels appropriately "truthy," in line with what we saw with Heartbleed where Linus's Law says anyone could have and should have caught this crap but no one actually looked.

On the other hand, this is a bit of a grandiose claim that "The comment says it scales with CPU count and the comment is incorrect. I wonder whether kernel developers are aware of that mistake as they are rewriting the scheduler! Official comments in the code says it’s scaling with log2(1+cores) but it doesn’t. All the comments in the code are incorrect. Official documentation and man pages are incorrect. Every blog article, stack overflow answer and guide ever published about the scheduler is incorrect."

I think someone would have noticed but it is just the kernal. is userland affected? I can’t imagine there are many instances where the kernal would need to full tilt on more than eight cores.

-

@Mason_Wheeler said in In other news today...:

Can anyone who knows Linux stuff a bit better than I do verify this? On the one hand it feels appropriately "truthy," in line with what we saw with Heartbleed where Linus's Law says anyone could have and should have caught this crap but no one actually looked.

On the other hand, this is a bit of a grandiose claim that "The comment says it scales with CPU count and the comment is incorrect. I wonder whether kernel developers are aware of that mistake as they are rewriting the scheduler! Official comments in the code says it’s scaling with log2(1+cores) but it doesn’t. All the comments in the code are incorrect. Official documentation and man pages are incorrect. Every blog article, stack overflow answer and guide ever published about the scheduler is incorrect."

I have personally seen linux servers use more than 16 seconds of user CPU time per wallclock second. These were physical servers which were not used to host VMs.

I've also known multithreaded computational loads to effectively scale far beyond 8 worker threads. These threads were not performing system calls at all.

I call bollocks.

-

@Mason_Wheeler said in In other news today...:

Can anyone who knows Linux stuff a bit better than I do verify this? On the one hand it feels appropriately "truthy," in line with what we saw with Heartbleed where Linus's Law says anyone could have and should have caught this crap but no one actually looked.

On the other hand, this is a bit of a grandiose claim that "The comment says it scales with CPU count and the comment is incorrect. I wonder whether kernel developers are aware of that mistake as they are rewriting the scheduler! Official comments in the code says it’s scaling with log2(1+cores) but it doesn’t. All the comments in the code are incorrect. Official documentation and man pages are incorrect. Every blog article, stack overflow answer and guide ever published about the scheduler is incorrect."

Giving him the benefit of the doubt, let's just assume he's correct in that this is still in there.

This doesn't mean what the headline makes one naively think it means. As the text states, this just affects the scaling of time slices with core count, i.e. instead of the original 5ms time slices, you get longer slices because presumably you don't need as short slices for interactivity with more cores. And from what he states, that should have scaled logarithmically but instead is capped at a max.

Now, on one hand, this doesn't mean anything at all like "linux uses only 8 cores max". He doesn't claim that, but it's what you might go away with by just reading the headline. That would not just be disastrous, but would've been caught the very first minute someone used a CPU with more cores. I regularly use machines with 20+ cores all saturated, and it definitely works.

So the only thing actually affected is the length of the time slices. Maybe there's a tiny bit of performance left on the table for not having longer slices? Who knows, you'd need to benchmark that for very specific high compute scenarios.

And then there's the thing in the headline about "accidentally hardcoded to a maximum of 8 cores": that one is obviously wrong. It's not "hardcoded to 8 cores", it uses the minimum of the actual number of cores and 8. Doing that is definitely something that is done intentionally. Maybe the rationale behind that even is still valid, maybe it's not. But it wasn't done accidentally.I'll give this a 2/10 on the severity scale, but then I'm just an arm-chair expert.

-

@DogsB said in In other news today...:

Probably more money and less overtime in enterprise development.

"cloud engineers make $132,060-$185,380; DevOps engineers $140,740-$210,800; and software developers $130,200-$186,930. That's not chicken feed."

That has to be silly valley money, either that or companies are pissing away money on people that seem to be universially terrible and are costing them more than their inhouse infrastructure.

The low end of the ranges certainly are. You won't start making that kind of money outside of there and maybe NYC. But the upper ranges sound plausible to me in other metro areas besides those two.

-

@boomzilla said in In other news today...:

You won't start making that kind of money outside of there and maybe NYC.

A few other global financial centers too, but only if you're working for the right sort of corp.

-

@topspin Thanks, that's exactly the sort of analysis I was looking for.

-

“Every once in a while, a revolutionary product comes along that changes everything,” said Steve Jobs, the Apple founder, as he walked on stage to launch the iPhone in 2007.

That was the app store. The iphone would have flopped badly like every other touchscreen dohoggle at the time without it.

But a surge of interest in artificial intelligence (AI) – driven by the launch of chatbots such as OpenAI’s ChatGPT – has some in the tech sector believing that a post-smartphone age is on the brink of becoming reality.

This not going to end well for me.

The gadget, which will cost $699 in addition to a monthly subscription of $24, clips onto your shirt or jacket and responds to voice queries, such as asking it to play music or make a phone call. Some have compared it to a communicator badge from Star Trek.

I have a €350 watch that does that and I won’t look like a complete twat or have to pay a subscription.

The company is marketing it as a “standalone” device, meaning you do not need to link it to your phone, freeing users from their screens.

I have questions... Is it a speaker? How do I connect my headphones. How do I get contacts in? Most communication is textual, how do I read them?

With no display, it is instead controlled by voice commands, gestures, or by a projector that beams a tiny set of controls onto the palm of your hand. A front-mounted camera can take pictures and videos, while tapping the “pin” activates its voice control functions.

I can smell toast. Is that bad?

Almost in homage to Apple, the reveal of the AI Pin was suitably Jobsian. In its launch video, husband and wife team Imran Chaudhri and Bethany Bongiorno, both former Apple employees, are clad in minimalist black reminiscent of the late Apple founder, while delivering grandiose statements such as: “It is our aim at Humane to build for the world not as it exists today, but as it could be tomorrow.”

I remember thinking Jobs was a twat but was he this much of a twat?

Still, Om Malik, a Silicon Valley based technology writer and investor, lauds the “audacity and magnitude of their idea”. “Humane is proposing another very different idea of personal computing for the post-smartphone era,” he writes.

Like Apple, the AI Pin’s development has been cloaked in secrecy and mystique. The New York Times reports Chaudhri and Bongiorno ran their ideas past a Buddhist Monk, called Brother Spirit, who introduced them to the billionaire Salesforce boss, Marc Benioff, an early investor.

While voice assistants such as Siri or Alexa have been around for years, the AI Pin seeks to build on advances in the technology to provide almost conversational interactions. Neat tricks will include features such as live translation for wearers. Humane also demonstrated more advanced functions – such as having the pin scan a handful of almonds, and returning an estimate of how much protein they contained.

Christ has thernos sold you their pr person?

Still, some in Silicon Valley have welcomed the arrival of Humane as a potential breakthrough that could herald an era beyond smartphones – offering a new kind of interface that relies on voice control and an advanced chatbot. Humane has raised £192m ($240m) for its idea, winning the backing of Sam Altman, the influential co-founder of OpenAI.

Oh God! Years of reading stupid shit has brought on a stroke. Aran3, tell Aran5 the details for my doge are in the cookie jar under the sink.

-

@DogsB said in In other news today...:

the details for my doge are in the cookie jar under the sink

You lie. I already looked there.

-

@dkf said in In other news today...:

@Bulb said in In other news today...:

@ObjectMike said in In other news today...:

Yeah, I've never heard of CI including merging.

Well, it should usually involve merging from master so you don't get big conflicts when the feature is finalized. But merging to master, yeah, I'd seriously question the sanity of that.

It's probably something dating from when people used VCSes that made branching and merging difficult.

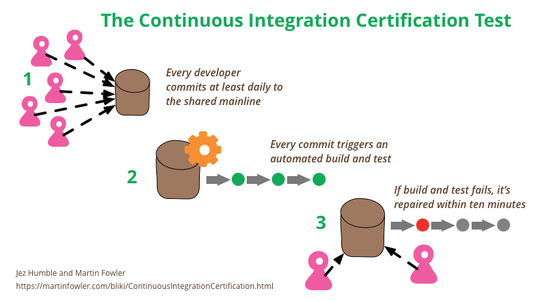

Here's the question in question:

and it has some references in it, which ultimately lead to

and the image clearly says

Every developer commits at least daily to the shared mainline

and that article has a date of 18 January 2017, at which time git, and workflow using feature branches that are reviewed, and possibly also tested, before integrating in the shared mainline, were already popular.

-

@dkf said in In other news today...:

Individually, the computers aren't particularly high powered

We have had a 72-core server around here for … well, around 10 years IIRC. That's still off-the-shelf board. Yes, it currently runs Linux.

-

@DogsB said in In other news today...:

I remember thinking Jobs was a twat but was he this much of a twat?

-

@topspin said in In other news today...:

I'll give this a 2/10 on the severity scale

I wonder if you want to change the scheduling granularity that much more anyway. At some point, running tasks for longer (minimum?) time slices has diminishing returns. Not clear if it affects latency that much -not familiar enough with the scheduler- but if it does, you wouldn't want to increase that too much.

-

@cvi said in In other news today...:

@topspin said in In other news today...:

I'll give this a 2/10 on the severity scale

I wonder if you want to change the scheduling granularity that much more anyway. At some point, running tasks for longer (minimum?) time slices has diminishing returns. Not clear if it affects latency that much -not familiar enough with the scheduler- but if it does, you wouldn't want to increase that too much.

That's why I assume it's intended to be log-scale. But still, above a certain threshold, you don't gain anything anymore by longer time slices, while interactivity might start to suffer again even if you have very many cores. Though 100 core machines aren't exactly used for interactive jobs.

-

@topspin said in In other news today...:

Giving him the benefit of the doubt, let's just assume he's correct in that this is still in there.

I damn sure hope it's still there (it is), because …

This doesn't mean what the headline makes one naively think it means. As the text states, this just affects the scaling of time slices with core count, i.e. instead of the original 5ms time slices, you get longer slices because presumably you don't need as short slices for interactivity with more cores. And from what he states, that should have scaled logarithmically but instead is capped at a max.

It can be set to scale logarithmically or linearly. It is also capped at a maximum. You can still tune it to whatever you want, if you want, anyway, because it is just some sysctl settings.

So the only thing actually affected is the length of the time slices. Maybe there's a tiny bit of performance left on the table for not having longer slices? Who knows, you'd need to benchmark that for very specific high compute scenarios.

Anybody who bothers doing a benchmark can tune it to whatever works well for their benchmark. But the default settings need to work for wide range of workloads and if the slice length was allowed to scale too high, it wouldn't.

I'll give this a 2/10 on the severity scale, but then I'm just an arm-chair expert.

I'll give this a 0/10 on the severity scale. It's definitely intentional.

-

@Bulb said in In other news today...:

and the image clearly says

Every developer commits at least daily to the shared mainline

and that article has a date of 18 January 2017, at which time git, and workflow using feature branches that are reviewed, and possibly also tested, before integrating in the shared mainline, were already popular.

To the timeline or the principal branch? (Is time a linear or branching thing in your underlying mental model of the world?) Which ones get testing? When you're running tests against all branches, it's not so important whether those branches get returned to the main branch.

What you want to avoid is people going a long time doing their own thing without visibility of whether they're in contact with what the rest of the team is up to. What that means is tricky in some cases; you can't do all changes on short-life branches that endure less than a day, as not all features can be done in micro-steps (unless you do lots of

#ifdeffeature flags, but that doesn't make things better! Quite the reverse).

-

@topspin said in In other news today...:

@DogsB said in In other news today...:

I remember thinking Jobs was a twat but was he this much of a twat?

And we do it. We do it through iTunes. Again, you go to iTunes and you set it up.

And you set up what you want, sync to your iPhone. Just like an iPod, charge and sync. So sync with iTunes.It's missing a few billions and billions here and there, but now I'm imagining Trump doing that presentation. I'm sure it'd be hilarious.

-

@dkf said in In other news today...:

@Bulb said in In other news today...:

@ObjectMike said in In other news today...:

Yeah, I've never heard of CI including merging.

Well, it should usually involve merging from master so you don't get big conflicts when the feature is finalized. But merging to master, yeah, I'd seriously question the sanity of that.

It's probably something dating from when people used VCSes that made branching and merging difficult.

This morning?

-

-

@DogsB Jobs was absolutely that much of a twat, as you put it.

But the iPhone debuted without an App Store, they were adamant that web apps would be tue way of the future. Then the developer backlash came.

(The introduction of the store was summer 2008, a year after the phone debut, and the initial store had maybe 500 apps total.)

-

@boomzilla said in In other news today...:

@DogsB said in In other news today...:

Probably more money and less overtime in enterprise development.

"cloud engineers make $132,060-$185,380; DevOps engineers $140,740-$210,800; and software developers $130,200-$186,930. That's not chicken feed."

That has to be silly valley money, either that or companies are pissing away money on people that seem to be universially terrible and are costing them more than their inhouse infrastructure.

The low end of the ranges certainly are. You won't start making that kind of money outside of there and maybe NYC. But the upper ranges sound plausible to me in other metro areas besides those two.

Let's just say those numbers are low for the upper range of software developers in Silly Valley. How do I know? (looks at paystub)

-

@topspin said in In other news today...:

@topspin said in In other news today...:

@DogsB said in In other news today...:

I remember thinking Jobs was a twat but was he this much of a twat?

And we do it. We do it through iTunes. Again, you go to iTunes and you set it up.

And you set up what you want, sync to your iPhone. Just like an iPod, charge and sync. So sync with iTunes.It's missing a few billions and billions here and there, but now I'm imagining Trump doing that presentation. I'm sure it'd be hilarious.

It's a beautiful phone. The best ever made. Everyone says so. Nobody had ever seen anything like it till I brought it to the table with my own two hands. Evil Google can't make anything like that.

-

@Applied-Mediocrity said in In other news today...:

@topspin said in In other news today...:

@topspin said in In other news today...:

@DogsB said in In other news today...:

I remember thinking Jobs was a twat but was he this much of a twat?

And we do it. We do it through iTunes. Again, you go to iTunes and you set it up.

And you set up what you want, sync to your iPhone. Just like an iPod, charge and sync. So sync with iTunes.It's missing a few billions and billions here and there, but now I'm imagining Trump doing that presentation. I'm sure it'd be hilarious.

It's a beautiful phone. The best ever made. Everyone says so. Nobody had ever seen anything like it till I brought it to the table with my own two hands. Evil Google can't make anything like that.

The really funny part is that the v1 demo iPhone was hilariously unstable, and the order of things is sufficiently scripted so as the thing didn't run out of memory and reboot mid-demo.

-

@Arantor said in In other news today...:

the initial store had maybe 500 apps total

Still more than Microsoft's store...

-

@Tsaukpaetra I mean think about it: Apple's developer ecosystem kicked up a stink that they weren't getting the ability to sell through an app store.

When did MS's developer ecosystem ever do that?

-

@Arantor said in In other news today...:

the iPhone debuted without an App Store, they were adamant that web apps would be tue way of the future.

And now we have web apps that demand you download a carbon copy of their web app running in a dedicated browser from the app store.

-

@kazitor at least the philosophy killed Flash off, right?

-

@Arantor You say that like it's a good thing...

-

@Mason_Wheeler It is. I had an ActionScript course in college. It was full-house horrible, from unreliable timestep to scaling issues to glorified Powerpoint piece-of-shit IDE that ran like a dog. Its literal only advantage at the time was that it could "ooh, pwetty gwaficks" when nothing else really could.

-

@Applied-Mediocrity let’s not talk about all the security issues the runtime had, such that Adobe gave up trying to fix them all and just EOL’d the thing.

-

@Mason_Wheeler said in In other news today...:

@Arantor You say that like it's a good thing...

Security issues, battery draining issues and good taste issues aside, it is. It forced devs to actually go back to the drawing board and not just wrap shit in a black box that was easy to use.

Accessibility improved overnight with Flash content going away. Though not as much as I’d have liked.

-

@Arantor said in In other news today...:

@Applied-Mediocrity let’s not talk about all the security issues the runtime had, such that Adobe gave up trying to fix them all and just EOL’d the thing.

At least that part would be solved with the JS runtime you mentioned. Well, now that it's dead anyway...

-

@Arantor It is my understanding that Adobe never really bothered to fix anything. They just plugged the holes as they came along, but never went as little as "maybe we should grep for malloc and take a closer look at each of them".

-

@topspin yeah but the archival people want to resurrect old games made in Flash so they made a JS runtime to emulate Flash.

At least I thought Ruffle was JS, my bad, it’s actually Rust -> WebAssembly.

Though swf2js is as the name implies, JS.

-

@Arantor said in In other news today...:

@topspin yeah but the archival people want to resurrect old games made in Flash so they made a JS runtime to emulate Flash.

Does it actually work now? I remember trying it some years ago (maybe just when Flash got truly EOL'ed) and it completely failed to run the couple of games I tried.

I guess the other issue is that nowadays it's going to be more difficult to get my hands on those original Flash games (that was still fairly easy when Flash was just about dying but since then...).

-

@remi don’t know, haven’t tried.

-

@Arantor that garbage never worked properly in any non official implementation, I'm happy it's dead

at least a third party could port webkit

-

@loopback0 said in In other news today...:

@dkf said in In other news today...:

@DogsB That's one way for

AmericaOracle to get the edge in the race.It can't be a coincidence that the letters in the word orca are the first 4 letters in Oracle

Although if the orcas go rogue then Larry really will need a new yacht.

Speaking of...

(I like how they clipped the photo for maximum effect)