Deep Learning

-

@topspin said in Deep Learning:

We've also witnessed here installation with pip, easy_install, conda was mentioned...

I thought TMTOWTDI was frowned upon as the Perl way?!pipis what you should use,easy_installis very, very old and deprecated.- Conda is part of a third-party distribution and therefore not an official tool.

- The difference between virtualenv and

venvis explained here. Usually, the built-in package should suffice. Historically, this functionality was not part of Python itself, which is why the external tool exists.

So there technically is one true way to do things. I'm not claiming trying to claim that Python's package management and build tools are perfect, though. They're horrible and used to be even more horrible in the past.

-

Let's put that Python specific WTFery side, and get back to "Deep Learning". On p. 114-6, the author shows a script for training a two-layer-network for the XOR problem,

import tensorflow as tf training_data_xor = tf.placeholder(tf.float32, shape =[4, 2], name = "Training_data") y=tf.placeholder(tf.float32,shape=[4,1],name="Expected_data") weights_layer1=tf.Variable(tf.random_uniform([2,2],-1,1),name="Weights_layer1") weights_layer2=tf.Variable(tf.random_uniform([2,1],-1,1),name="Weights_layer2") bias_layer1=tf.Variable(tf.zeros([2]),name="Bias1") bias_layer2=tf.Variable(tf.zeros([1]),name="Bias2") with tf.name_scope("Layer1") as scope: hidden_result=tf.sigmoid(tf.matmul(training_data_xor,weights_layer1)+bias_layer1) with tf.name_scope("Layer2") as scope: hidden_error=tf.sigmoid(tf.matmul(hidden_result,weights_layer2)+bias_layer2) with tf.name_scope("Costs") as scope: cost=tf.reduce_mean(( (y* tf.log(hidden_error)) + ((1-y) * tf.log(1.0-hidden_error)) ) * -1) with tf.name_scope("Training") as scope: learning_rate=0.01 train_step=tf.train.GradientDescentOptimizer(learning_rate=learning_rate).minimize(cost) X=[[0,0],[0,1],[1,0],[1,1]] Y=[[0],[1],[1],[0]] init=tf.global_variables_initializer() s=tf.Session() writer=tf.summary.FileWriter("C:/Freigabe/Temp/my_graph/",s.graph) s.run(init) iterations=150001 for i in range(iterations): s.run(train_step,feed_dict={training_data_xor:X,y:Y}) if i % 10000 == 0: print('Iteration ', i) print('Error ', s.run(hidden_error, feed_dict={ training_data_xor: X,y: Y})) print('Costs ', s.run(cost, feed_dict={ training_data_xor: X,y: Y})) s.close()When I look at the output,

Iteration 150000 Error[[0.00765174] [0.99134374] [0.99145824] [0.01338554]] Costs 0.009607375my impression is that

hidden_erroris the actual result of the calculation, not the error between the calculation result and the expected result.

Because the values come close toYwhich contains our expected result.There seems to be a hidden error some where, in the book or in my thoughts?

-

-

@levicki said in Deep Learning:

Did you think about using

BMIextensions in assembler and doing vector processing with SIMD?No. Categorically, no.

The client software (that this is part of) needs to run on all sorts of computers and we aren't going to do complex stuff there. Just not enough effort available for that, and numpy isn't that terrible. Just opaque and single-threaded. It's the code on the other end of the comms link that gets the sort of treatment you're thinking of, and that's an ARM variant.

-

That's a cure error message, isn't it:

curses is not supported on this machine (please install/reinstall curses for an optimal experience)So, "curses is not supported on this machine". And because it is not supported, I am told to "please install/reinstall curses for an optimal experience". But it is not supported, is it?

Uhm, well,

pip install windows-cursesdid the trick.What a curse!

-

@levicki said in Deep Learning:

"is not installed" or "is not detected"

So you think it's an i18n issue? They had to translate from English to English, and failed.

Oh wait, the message was written by some non-English proggramer. Kevin?!

-

-

@pie_flavor said in Deep Learning:

@dkf said in Deep Learning:

@cvi said in Deep Learning:

Almost none of the deep learning things do.

There's a suspicion that it's not really all that important in the first place, since the learning algorithms themselves converge strongly enough to start out with. (There's a similar argument with spiking NNs, but there at least the argument “it looks like what has been measured in biology” can be used; the suspicion is that there's a wide class of non-linear models that will work, especially so if you allow both adaptation of weights and synaptogenesis.)

Why not use deep learning to determine the most applicable deep learning algorithm?

-

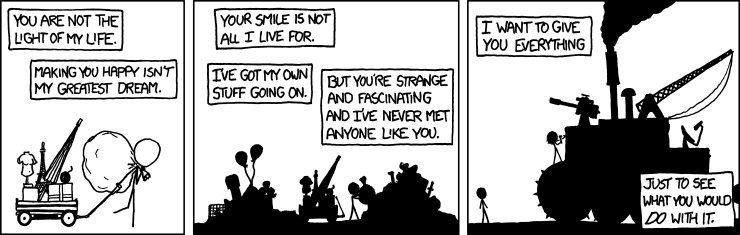

@levicki except for when someone says there's xkcd for everything.

-

@Gąska said in Deep Learning:

@levicki except for when someone says there's xkcd for everything.

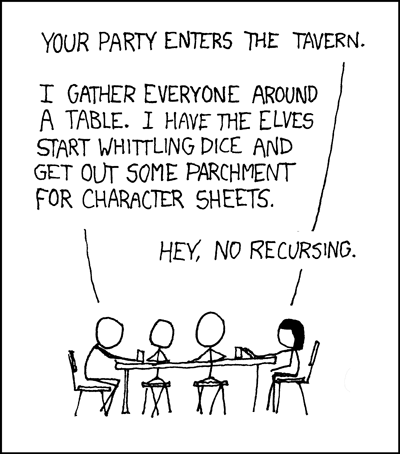

No recursing!

-

@Gąska said in Deep Learning:

@levicki except for when someone says there's xkcd for everything.

Just wait... (I'm convinced he hangs out here...)

Edit: oh...