I, ChatGPT

-

@cvi Well, it's wccftech, which has the journalistic integrity of Trump on breitbart, or however did that old thing go

-

@Gribnit said in I, ChatGPT:

@jinpa said in I, ChatGPT:

@Rhywden That doesn't bode well. Bing Chat decided, absent of proof, that you lied to it?

Well what would you do if you were surrounded by lying, paranoid psychopaths.

Things that remind you of TDWTFers is

-

@Gribnit said in I, ChatGPT:

@jinpa said in I, ChatGPT:

@Rhywden That doesn't bode well. Bing Chat decided, absent of proof, that you lied to it?

Well what would you do if you were surrounded by lying, paranoid psychopaths.

I suppose I would get married? I think?

-

@boomzilla said in I, ChatGPT:

It also muddies the water for commercial AI companies working to develop their own language models; if so much of the time and expense involved is incurred in the post-training phase, and this work can be more or less stolen in the time it takes to answer 50 or 100,000 questions, does it make sense for companies to keep spending this cash?

On the one hand it's revealing (once again) how perverted the whole incentive structure is when "is it useful?" doesn't even come up as a guiding question, only "can it turn my money into more money?". On the other hand, if Skynet is preempted because capitalism, good for them! Truly dialectical.

-

@cvi said in I, ChatGPT:

@Applied-Mediocrity Funny, but as far as 'meltdown' goes, I'm thinking that this one doesn't quite qualify.

"Cotard's syndrome" vs. "China syndrome". Easy to confuse.

-

It's kind of interesting that all this type started building just as the venture capital started getting bored with AI.

-

@Carnage said in I, ChatGPT:

It's kind of interesting that all this type started building just as the venture capital started getting bored with AI.

VC will be in shorter supply: interest rates are up, so more money is made overall from investing in older companies than startups.

-

The perspective of an history professor about AI for writing essays:

It's almost pointedly missing the point of "students will always be student" (i.e. saying "cheating is bad for you" has never dissuaded any student), but it's making some good points overall.

I particularly liked the experiment showing how bad it is at getting actual facts (and preferably facts that are true (!)). The professor generated an essay on what would be for him a very basic thing and then graded the results.

(ETA: replaced the onebox of the essay by a link because the onebox doesn't show the comments, which are what makes the whole thing interesting)

-

@remi I did like this part, and it resonates with my opinion on the whole thing:

But I think I will stipulate that much of the boosterism for ChatGPT amounts to what Dan Olsen (commenting on cryptocurrency) describes as, “technofetishistic egotism,” a condition in which tech creators fall into the trap where, “They don’t understand anything about the ecosystems they’re trying to disrupt…and assume that because they understand one very complicated thing, [difficult programming challenges]…that all other complicated things must be lesser in complexity and naturally lower in the hierarchy of reality, nails easily driven by the hammer that they have created.”

-

@Carnage there is a lot of that in the tech start-up world, generally speaking.

The analogy with alchemy is also interesting IMO, on a more philosophical level.

There is indeed this idea in many proponents of AI that by throwing more and more power/complexity at mimicking the external aspects of "intelligence" we will end up duplicating it, but this may possibly be equally as doomed as trying to turn lead into gold by trying to alter its chemical properties. Which doesn't mean it's entirely useless, as TFA says, alchemy was instrumental in building the basis of chemistry, but it never (and never could) attained its stated goals.

-

@remi said in I, ChatGPT:

@Carnage there is a lot of that in the tech start-up world, generally speaking.

The analogy with alchemy is also interesting IMO, on a more philosophical level.

There is indeed this idea in many proponents of AI that by throwing more and more power/complexity at mimicking the external aspects of "intelligence" we will end up duplicating it, but this may possibly be equally as doomed as trying to turn lead into gold by trying to alter its chemical properties. Which doesn't mean it's entirely useless, as TFA says, alchemy was instrumental in building the basis of chemistry, but it never (and never could) attained its stated goals.

Yeah, I've said for a rather long time that the current AI approach will not create GAI, for a lot of reasons. It's possibly a solution to some problem, but for now it's still mostly looking for the problem it can solve while making money. The area tends to name things in fanciful names, mostly for marketing reasons as well, which makes it even more not pass smell tests.

-

@Carnage that comment (on alchemy) got me thinking on whether trying to mimic the external aspects of something ever got us a working solution to that problem.

It's easy to list many examples where this failed. Alchemy, of course (chemistry never managed to turn lead into gold, or many other transformations that were looked for). Flying is another one, flapping wings didn't work (though non-flapping wings did work in the end, but they were not the first thing to work (hot air balloons)). Medicine also got on all the wrong tracks by e.g. trying to cure a problem by its opposite (hot by cold, though sometimes that was more "hot by hot" but it didn't work either). More recently, industrial robots that work don't reproduce the external/physical aspects of a human (they're nowhere near anthropomorphic) and robots that do... are generally useless.

(it sometimes worked to create solutions to other problems, but not the stated one)

Many other technologies worked but can't really be said to have been trying to copy the external properties of some natural phenomenon. Something like the wheel hasn't really a natural origin, except in the most widest sense of a boulder rolling down a slope. But there isn't anything like a natural platform moving on rolling things (i.e. what could have inspired a cart). Same for sailing, there isn't really a clear natural source to copy for using the wind (fish or other animals that swim do so mostly by some variation of paddling). So I can't see that these technologies were invented by trying to copy nature.

Pottery works by cooking clay but that's not really something widespread in nature (and if one wants to go to "creating hard rocks," pressure is likely a better factor to copy rather than temperature, but in any case early humans couldn't really observe that). Though this may be an extension of sun-dried pottery, so maybe this is a case where saying "oh this thing left in the sun is hot and has hardened, maybe it would also harden if I exposed it to heat in another way (fire)" worked?

I can't really come up with an example of a technology where this idea of copying the external characteristics of something else ever worked. But then again, this may be because those examples are so ingrained in our lives that I don't notice them, so... maybe that's not a totally doomed idea?

-

@remi said in I, ChatGPT:

chemistry never managed to turn lead into gold

But particle physics is now able to explain exquisitely why that's a dumb thing to try to do (it's energetically unfavourable; leave that stuff to supernovae).

-

@remi said in I, ChatGPT:

More recently, industrial robots that work don't reproduce the external/physical aspects of a human (they're nowhere near anthropomorphic)

Even more recently, the same applies to household robots, like vacuums and lawnmowers.

-

@remi said in I, ChatGPT:

The perspective of an history professor about AI for writing essays:

I see why you've been drawn to the linked site.

-

@dkf said in I, ChatGPT:

@remi said in I, ChatGPT:

chemistry never managed to turn lead into gold

But particle physics is now able to explain exquisitely why that's a dumb thing to try to do (it's energetically unfavourable; leave that stuff to supernovae).

Dumb, but possible. I vaguely recall a physics experiment in college in which we exposed lead (?) to neutrons in a small reactor and produced (a few — very few — atoms of) gold. Or something like that; it's been a long time, but we definitely transmuted something, and gold was involved.

-

-

@HardwareGeek IIRC, I watched something on TV a long time ago that said that you could transmute lead to gold by putting a thin sheet of lead on the front of a CRT and then leaving that running in a freezer for a year.

-

@dkf said in I, ChatGPT:

putting a thin sheet of lead on the front of a CRT

That would produce X-rays; you need neutrons for transmutation.

That would produce X-rays; you need neutrons for transmutation.

-

@Zecc said in I, ChatGPT:

I see why you've been drawn to the linked site.

Yeah, how could I not read a site like that?

@HardwareGeek said in I, ChatGPT:

Bookmarked!!!

I approve. The guy is even more verbose than me (

), sometimes veers into

), sometimes veers into  territory, and spends far too many articles talking about how the academic world work (or, mostly, how it does not work). (inb4: he'd be a perfect fit here)

territory, and spends far too many articles talking about how the academic world work (or, mostly, how it does not work). (inb4: he'd be a perfect fit here)But that aside, his historical articles are very interesting, and his use of pop-culture as a gateway to serious historical stuff is very entertaining.

-

@remi Eh, whatever. How could I, first Viscount Pedantic Dickweed of the Most Noble Order of the Garter, not bookmark a site about unmitigated pe[n]dantry?

-

The blog seemed familiar, so I searched deep inside my

feelingsbookmarks and found this:I have no idea why I bookmarked it, but I guess I meant to read it Some Time Later™

-

@HardwareGeek said in I, ChatGPT:

@dkf said in I, ChatGPT:

@remi said in I, ChatGPT:

chemistry never managed to turn lead into gold

But particle physics is now able to explain exquisitely why that's a dumb thing to try to do (it's energetically unfavourable; leave that stuff to supernovae).

Dumb, but possible. I vaguely recall a physics experiment in college in which we exposed lead (?) to neutrons in a small reactor and produced (a few — very few — atoms of) gold. Or something like that; it's been a long time, but we definitely transmuted something, and gold was involved.

Yes, but it's beside the point. The point is that it wasn't by mimicking external aspects but only once we managed to create fairly comprehensive model of the underlying structure. Whereas “Artificial

IntelligenceIdiocy“ is all about just throwing a lot of data at a matrix multiplicator while explicitly not really caring why and how it works.

-

@Carnage said in I, ChatGPT:

@remi I did like this part, and it resonates with my opinion on the whole thing:

But I think I will stipulate that much of the boosterism for ChatGPT amounts to what Dan Olsen (commenting on cryptocurrency) describes as, “technofetishistic egotism,” a condition in which tech creators fall into the trap where, “They don’t understand anything about the ecosystems they’re trying to disrupt…and assume that because they understand one very complicated thing, [difficult programming challenges]…that all other complicated things must be lesser in complexity and naturally lower in the hierarchy of reality, nails easily driven by the hammer that they have created.”

...

-

Also, the glass CRTs are made of filters X-rays anyways.

-

@HardwareGeek said in I, ChatGPT:

@dkf said in I, ChatGPT:

putting a thin sheet of lead on the front of a CRT

That would produce X-rays; you need neutrons for transmutation.

That would produce X-rays; you need neutrons for transmutation.That would produce a mix of X-rays and accelerated electrons. The rate of electron absorption would be slow (electron + proton = neutron), but apparently non-zero. It any case it is another one for the pile labelled "you can, but don't bother".

-

@remi there’s certainly a bunch of examples where technology was inspired by copying nature. Some things that come to mind are aerodynamic cars etc. (things we drive in Europe, not the square bricks the Murricans drive), penguins apparently have a very nice shape, or lotus effect coating.

Here’s the first result google spits out, though I haven’t more than scrolled through it:

-

@remi said in I, ChatGPT:

an history professor

Did he stay at an hotel where an hen and an heifer made an home in an herb garden?

-

Stop mocking @remi before he decides to do an horrible thing.

-

@Zerosquare said in I, ChatGPT:

Also, the glass CRTs are made of filters X-rays anyways.

You could grind it down to 50µm or so in the center and take the deflector coils out. Not that it got you any closer to making gold but just because.

-

@dkf said in I, ChatGPT:

@HardwareGeek said in I, ChatGPT:

@dkf said in I, ChatGPT:

putting a thin sheet of lead on the front of a CRT

That would produce X-rays; you need neutrons for transmutation.

That would produce X-rays; you need neutrons for transmutation.That would produce a mix of X-rays and accelerated electrons. The rate of electron absorption would be slow (electron + proton = neutron), but apparently non-zero. It any case it is another one for the pile labelled "you can, but don't bother".

There are no electrons emitted from a CRT. Beta radiation penetrates glass about 2mm/MeV and CRT electrons have only about 30keV depending on the size of the tube. But even if —electron capture sometimes happens in nuclear decay but I don't think it's possible to induce it by shooting electrons at atoms, otherwise you'd find all kinds of transmutations on the inside of CRT tubes or at least of betatrons.

-

@kazitor said in I, ChatGPT:

@remi said in I, ChatGPT:

an history professor

Did he stay at an hotel where an hen and an heifer made an home in an herb garden?

He’s French, so “an” is correct.

-

@kazitor the first

fewless times I made this mistake and was corrected, I thought it was a good point. But themoremuch I think about it, themoremany I think I will add it my list of English- ing, along with horribly messing up comparatives/quantifiers.

ing, along with horribly messing up comparatives/quantifiers.I think that would be a appropriate thing to do, or an habit worth taking.

-

@topspin said in I, ChatGPT:

there’s certainly a bunch of examples where technology was inspired by copying nature. Some things that come to mind are aerodynamic cars etc. (things we drive in Europe, not the square bricks the Murricans drive), penguins apparently have a very nice shape, or lotus effect coating.

True, but that's not really examples of mimicking external aspects. It's more seeing interesting effects in nature, breaking them down to what makes them interesting and reproducing that root cause, not the outermost symptoms.

Granted, the distinction is not obvious (the first one, velcro, is just copying an observable geometric shape so it's an "external aspect" even though it's one that can only be seen with a microscope). But on the extremes of that scale, it's pretty obvious that e.g. flying by trying to copy a bird, which is what people tried for a long time, did not work. It may have paved the road for other discoveries, and now we might be able to duplicate it, but the first human flying machines (either balloons or airplanes) were nowhere near flapping wings, and nature doesn't really have a clear example of a propeller (which ended up being the solution to non-stationary flight).

Also all these examples are fairly recent, i.e. at a time where even if we copy external characteristics, we have means of investigating whether it actually is the part of the phenomenon that matters (as the gecko or kingfisher examples show).

Then again, an article about "10 thousand-years-old technologies inspired by nature" would be much less interesting, so this may be a pure reporting/perception bias.

Finally, I'll note that for "simple" things it's obvious that copying nature works. A roof is just leaves in a forest. A jug of water is just a hole in a rock. So maybe this idea of copying nature was sound, and the counter-examples we're

at are just that, counter-examples (but not a rule).

at are just that, counter-examples (but not a rule).

-

@topspin said in I, ChatGPT:

@remi there’s certainly a bunch of examples where technology was inspired by copying nature. Some things that come to mind are aerodynamic cars etc. (things we drive in Europe, not the square bricks the Murricans drive), penguins apparently have a very nice shape, or lotus effect coating.

Here’s the first result google spits out, though I haven’t more than scrolled through it:

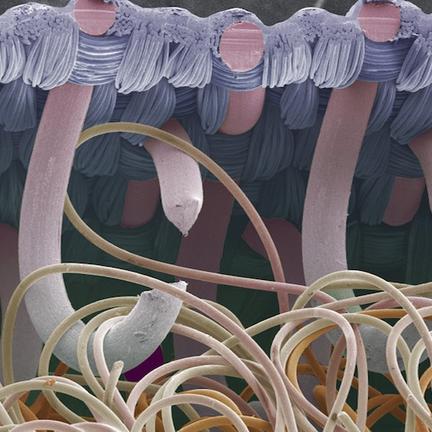

Is that picture meant to illustrate velcro? That's the example that popped into my head.

Edit: I knew it.

-

@HardwareGeek @dkf would it be possible to convert gpt-4 model weights to physical logic gates and make something like a gpt-4 chip?

-

@sockpuppet7 said in I, ChatGPT:

would it be possible to convert gpt-4 model weights to physical logic gates and make something like a gpt-4 chip?

I imagine something like that could be done, but it's probably better to convert it into something with binary weights first. Or to keep the weights in memory so they can be updated so the system can in theory learn new things.

-

@dkf I'm thinking it would make a lot of interesting stuff possible if these kinds of things gets cheap and energy efficient enough

-

@sockpuppet7 said in I, ChatGPT:

@HardwareGeek @dkf would it be possible to convert gpt-4 model weights to physical logic gates and make something like a gpt-4 chip?

I remember reading something about an AI-generated chip design which worked fine for the task is had been trained to do, but which no one could understand; and which included some circuitry which was technically disconnected, but which when removed made the chip stop working properly because of some analog effect that was going on.

-

@Zecc yeah, it was depending on weird properties / defects of the physical chip, plus stuff like the ambient temperature.

-

-

@LaoC said in I, ChatGPT:

CRT electrons have only about 30keV depending on the size of the tube.

IIRC (which I may not), TV CRTs are limited to less than ~25keV, because above that you get too many X-rays.

-

@Zecc said in I, ChatGPT:

@sockpuppet7 said in I, ChatGPT:

@HardwareGeek @dkf would it be possible to convert gpt-4 model weights to physical logic gates and make something like a gpt-4 chip?

I remember reading something about an AI-generated chip design which worked fine for the task is had been trained to do, but which no one could understand; and which included some circuitry which was technically disconnected, but which when removed made the chip stop working properly because of some analog effect that was going on.

Yeah, that's happened plenty of times in genetic algorithm stuff. To the point that there is a best practice to rotate the hardware around so that it doesn't develop optimizations due to hardware quirks.

-

@Rhywden said in I, ChatGPT:

@jinpa said in I, ChatGPT:

@Rhywden That doesn't bode well. Bing Chat decided, absent of proof, that you lied to it?

That was the 2nd try. When I first tried it, Bing told me that the diamond would always stay in the cup regardless of the orientation of the cup.

You never specified you're on Earth. Bing just assumed you're writing from the ISS

-

@Zerosquare said in I, ChatGPT:

While evolvable hardware for design or repair purposes may have been academically exciting companies have an aversion to selling things they don't understand and cannot test rigorously

Yeah, we have software for that.

-

@Zecc said in I, ChatGPT:

I remember reading something about an AI-generated chip design which worked fine for the task is had been trained to do, but which no one could understand; and which included some circuitry which was technically disconnected, but which when removed made the chip stop working properly because of some analog effect that was going on.

That reminds me of the story I read about a group trying to study chips in the way we study (human) brains, i.e. by looking at which area were active when performing certain operations.

The take-away I took away (great writing here!) was that on one hand, they were able to find some correlations that did make sense (since they knew the actual design of the chip they could check their findings), such as "this area lights up when doing additions <-> this area contains the gates that do addition" which in a sense does validate the approach (we can learn something of the micro-properties by the studying the, uh, slightly less micro ones). But OTOH, they were extremely limited in what they could learn this way, showing how far we are to understanding brains by looking at e.g. MRI pictures.

I also remember that the study was very limited in many ways, so I'm not sure there really was much to learn from it, or whether that was more than a one-off "fun research idea."

-

-

@remi said in I, ChatGPT:

But OTOH, they were extremely limited in what they could learn this way, showing how far we are to understanding brains by looking at e.g. MRI pictures.

Well yes, you'd need to probably see electric potentials within individual cells to understand much more, and that's A Little Bit Tricky™. That's why that mesoscale stuff is usually studied in simulation: we simply can't see that fine in situ and yet it's a much larger scale than what we can poke at in a petri dish.

-

@sockpuppet7 said in I, ChatGPT:

@HardwareGeek @dkf would it be possible to convert gpt-4 model weights to physical logic gates and make something like a gpt-4 chip?

Application-specific chips for calculating neural networks do exist. One interesting approach is basically a flash memory where the cells are charged proportionally to the weights and then you just apply voltage according to the input and read the output from the orthogonal lines. Very fast, not very accurate.

-

@remi said in I, ChatGPT:

That reminds me of the story I read about a group trying to study chips in the way we study (human) brains, i.e. by looking at which area were active when performing certain operations.

On a similar topic, there's also this:

https://www.cell.com/fulltext/S1535-6108(02)00133-2EDIT: fixed link

Collections: On ChatGPT

Collections: On ChatGPT