Science and Replication

-

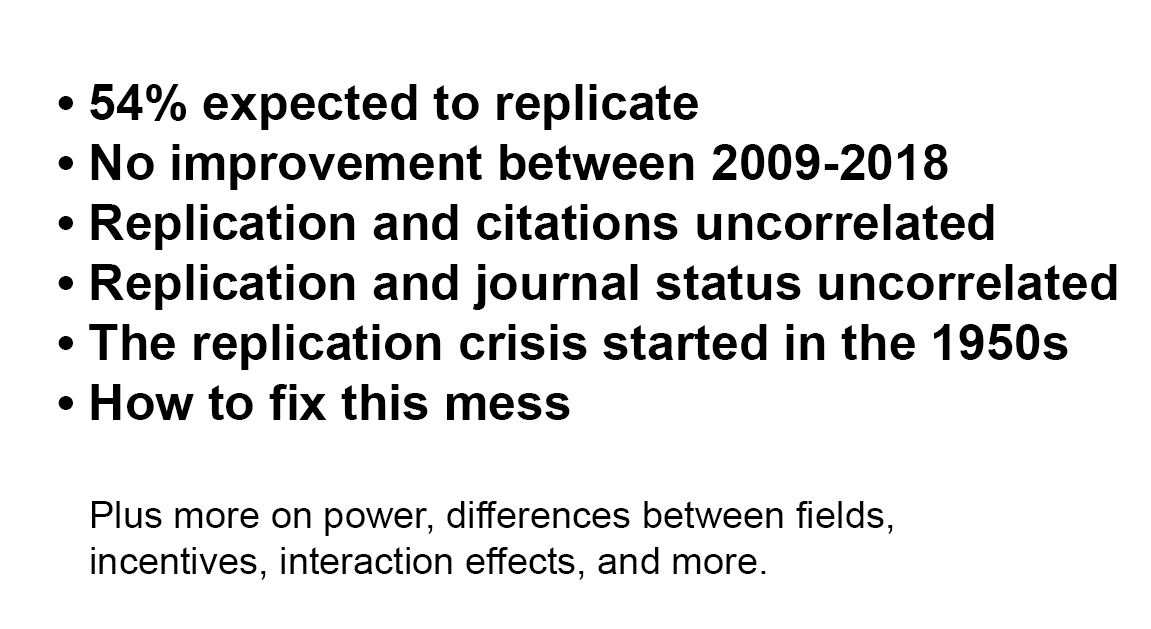

Here is an absolutely savage critique of the state of social science research. Not political in any way, and not disparaging the ends. Just brutally bashing the quality of papers (not even the content) and giving suggestions for doing better.

-

@Benjamin-Hall

This is the inevitable result of applying Taylorist management principles to academia. Once you treat scientists as workers in a factory producing scientific literature, and institute KPIs like number of cited papers per year, you will indeed get a lot of papers and citations, but this will not advance the state of human knowledge in any way. It's akin to paying programmers maybe not per line of code, but per number of libraries or downloads from github. I'm afraid this problem also infects STEM proper at this point, especially medicine and related disciplines. The Chinese essentially gamed the system with brute copy-paste. As for social science, it never was properly scientific from the start, it was more like a modern replacement for clergy.

-

@sebastian-galczynski said in Science and Replication:

I'm afraid this problem also infects STEM proper at this point, especially medicine and related disciplines.

Oh absolutely. But at least there's some pretense there of applying to objective reality. But you get lots of crap papers--papers chopped up into MPUs to inflate publication counts, lots of publication bias, very few attempts to replicate other work, and crimes against statistics up the wazoo.

Medicine also has to fight the problem that people are horrifically complex systems about which we know a fair amount at the lowest levels (the molecular and maybe even the cellular) and at the highest levels (gross physiology and anatomy) and almost nothing in between. And all the interesting parts happen at the in-between level. And people also have strong mind/body feedback loops--knowing that they're being studied changes their behavior, which alters how their physiology responds. Plus, people are not like protons, indistinguishable particles. And you can't do some of the tests you'd like to be able to, due to those pesky ethical principles people have.

-

@Benjamin-Hall said in Science and Replication:

And you can't do some of the tests you'd like to be able to, due to those pesky ethical principles people have.

Well, there's an obvious solution to that problem. You could probably find some tips in the writings of Dr. Mengele.

-

This post is deleted!

-

@Benjamin-Hall said in Science and Replication:

almost nothing in between

There's lots known in between (physiology has been around a long time and has made major progress) but applying it to the clinical practice is insanely difficult. Or rather not while keeping the patient alive at the same time.

-

-

Questionable Euphemism Practices

I like that term.

[…] most social scientists go through 4 years of undergrad and 4-6 years of PhD studies without ever encountering ideas like "identification strategy", "model misspecification", "omitted variable", "reverse causality", or "third-cause".

I would be inclined to believe this. People go study social sciences because they don't get maths. With modern research relying heavily on statistics, and statistics being a fairly complex bit of maths, that predictably can't end well.

Fields like nutrition and epidemiology are in an even worse state, [but let's not get into that right now].

Sounds rather relevant for current year, unfortunately.

-

@sebastian-galczynski said in Science and Replication:

This is the inevitable result of applying Taylorist management principles to academia. Once you treat scientists as workers in a factory producing scientific literature, and institute KPIs like number of cited papers per year, you will indeed get a lot of papers and citations, but this will not advance the state of human knowledge in any way.

Unfortunately that's exactly what all the grant agencies basing their decisions on scientometry are doing.

There was even a period where citation counts and impact factors were the only criteria around here. They've realized they overdid it already, but still.

-

Article in OP said:

A pile of "facts" does not a progressive scientific field make.

#trolleybusoutofcontext

-

@Bulb said in Science and Replication:

Unfortunately that's exactly what all the grant agencies basing their decisions on scientometry are doing.

The trick is to weight the publication venues (journals, conferences, etc.) by impact factor. That stops a lot of the nonsense. It has a lot of downsides though (e.g., it tends to overreward a few people) but any metric has problems because using any metric as a reward control results in the metric becoming ruined.

-

@dkf said in Science and Replication:

@Bulb said in Science and Replication:

Unfortunately that's exactly what all the grant agencies basing their decisions on scientometry are doing.

The trick is to weight the publication venues (journals, conferences, etc.) by impact factor. That stops a lot of the nonsense. It has a lot of downsides though (e.g., it tends to overreward a few people) but any metric has problems because using any metric as a reward control results in the metric becoming ruined.

Yeah, maybe, but if you

(RTFA) he talks about people continuing to cite retracted papers, let alone citing garbage that they don't seem to bother to read.

(RTFA) he talks about people continuing to cite retracted papers, let alone citing garbage that they don't seem to bother to read.

-

@boomzilla said in Science and Replication:

@dkf said in Science and Replication:

@Bulb said in Science and Replication:

Unfortunately that's exactly what all the grant agencies basing their decisions on scientometry are doing.

The trick is to weight the publication venues (journals, conferences, etc.) by impact factor. That stops a lot of the nonsense. It has a lot of downsides though (e.g., it tends to overreward a few people) but any metric has problems because using any metric as a reward control results in the metric becoming ruined.

Yeah, maybe, but if you

(RTFA) he talks about people continuing to cite retracted papers

(RTFA) he talks about people continuing to cite retracted papersLike vaccines causing autism.

let alone citing garbage that they don't seem to bother to read.

Like ... ah, I'm repeating myself.

-

I don't know what's the background of the author, but I feel a bit saddened that he seems to believe it's a problem specific to social sciences (toby fair, he doesn't say much about "hard" sciences, but there still seems to be that implication).

-

@boomzilla said in Science and Replication:

Yeah, maybe, but if you

(RTFA)

(RTFA)

he talks about people continuing to cite retracted papers, let alone citing garbage that they don't seem to bother to read.

That's just bad scholarship, and needs the tar and feathers.

-

@topspin said in Science and Replication:

@boomzilla said in Science and Replication:

@dkf said in Science and Replication:

@Bulb said in Science and Replication:

Unfortunately that's exactly what all the grant agencies basing their decisions on scientometry are doing.

The trick is to weight the publication venues (journals, conferences, etc.) by impact factor. That stops a lot of the nonsense. It has a lot of downsides though (e.g., it tends to overreward a few people) but any metric has problems because using any metric as a reward control results in the metric becoming ruined.

Yeah, maybe, but if you

(RTFA) he talks about people continuing to cite retracted papers

(RTFA) he talks about people continuing to cite retracted papersLike vaccines causing autism.

He also complains that it took something like 12 years (IIRC) to get that junk retracted.

-

@dkf said in Science and Replication:

@Bulb said in Science and Replication:

Unfortunately that's exactly what all the grant agencies basing their decisions on scientometry are doing.

The trick is to weight the publication venues (journals, conferences, etc.) by impact factor. That stops a lot of the nonsense. It has a lot of downsides though (e.g., it tends to overreward a few people) but any metric has problems because using any metric as a reward control results in the metric becoming ruined.

TFA says:

Naïvely you might expect that the top-ranking journals would be full of studies that are highly likely to replicate, and the low-ranking journals would be full of p<0.1 studies based on five undergraduates. Not so! Like citations, journal status and quality are not very well correlated: there is no association between statistical power and impact factor, and journals with higher impact factor have more papers with erroneous p-values.

So, no, that ‘trick’ does nothing.

@dkf said in Science and Replication:

That's just bad scholarship, and needs the tar and feathers.

Yes, but at this point everybody is doing that, and scientometry has its share of the blame in this – if you cite many people, you increase the chance they'll cite you in return and that improves everyone's rating; actually reading the work is absolutely not necessary for this purpose.

Unless citing retracted and poor quality papers starts counting against researcher's reputation, nothing's gonna change.

-

@remi said in Science and Replication:

I don't know what's the background of the author, but I feel a bit saddened that he seems to believe it's a problem specific to social sciences (toby fair, he doesn't say much about "hard" sciences, but there still seems to be that implication).

He only says economy fares better, though still far from perfect. He probably never looked on any branches of physics, chemistry or biology.

Also, he focuses on problems of using shoddy statistics and conducting experiments with insufficient power specifically, and that probably is less prevalent in domains that involve more maths and therefore researchers who understand it (which includes the economy example). There are still many dishonest practices for fudging the results, and he even does have a section titled Just Because a Paper Replicates Doesn't Mean it's Good.

-

@Bulb said in Science and Replication:

Also, he focuses on problems of using shoddy statistics and conducting experiments with insufficient power specifically, and that probably is less prevalent in domains that involve more maths and therefore researchers who understand it

Oh you sweet summer child...

I mean, yes, sure, it's probably "less" prevalent, in the same way as getting hit by a car hurts "less" than being hit by a truck. True, you have a higher chance of surviving the former than the later but that doesn't really make the former "good" in any way.

And the "people cite papers without reading them" bit, well...

-

@remi said in Science and Replication:

I mean, yes, sure, it's probably "less" prevalent, in the same way as getting hit by a car hurts "less" than being hit by a truck.

That's not too far from what I meant too. Plus it can just mean they fudge it less conspicuously, because with understanding of statistics also comes understanding of how to lie with it.

It also depends on what counts as ‘hard’ sciences. In the ‘really hard’ sciences one generally does not go hunting for random correlations, because they do have solid theories to start from. But once you get to chaotic systems, like meteorology and climatology, not to mention anything with biology, and statistics starts taking the place of the main research tool, it gets pretty ‘soft’ and ‘squishy’ fast.

-

@remi I think he was just focused on the social ones because that's what he was reading for the project. The hard sciences were out of scope.

-

@Bulb said in Science and Replication:

It also depends on what counts as ‘hard’ sciences. In the ‘really hard’ sciences one generally does not go hunting for random correlations, because they do have solid theories to start from

Yes, and to expand, lack of theory was one theme of TFA.

-

@remi said in Science and Replication:

he seems to believe it's a problem specific to social sciences (toby fair, he doesn't say much about "hard" sciences, but there still seems to be that implication)

Also, do you count epidemiology as a “hard” science? Because that (and nutrition) are explicitly mentioned as being in an even worse state (but off topic for the article).

-

@Bulb said in Science and Replication:

@remi said in Science and Replication:

he seems to believe it's a problem specific to social sciences (toby fair, he doesn't say much about "hard" sciences, but there still seems to be that implication)

Also, do you count epidemiology as a “hard” science? Because that (and nutrition) are explicitly mentioned as being in an even worse state (but off topic for the article).

That's a good question. In theory, it's just a branch of medicine, and I think I agree with that. The problem is that it's almost all observational and the objectives in typical studies aren't geared towards that. So you get stuff like, "30% higher risk," which from an observational study is garbage (for reference, smokers are something like 2,500% more likely to get lung cancer than non-smokers).

-

@boomzilla Some parts of medicine are now well founded in molecular biology and should probably count as hard science, but many parts are still just large collections of observations, and that's the same wild-hunt-for-correlations-in-heaps-of-random-observations stage as social sciences.

-

@Bulb yeah. And it's not that it's not useful to find those things, but they are (usually) just telling you which questions to ask, not answering anything significant themselves. But also mostly they're just spurious correlations.

-

@Bulb said in Science and Replication:

Some parts of medicine are now well founded in molecular biology

The fundamental problem with medicine is that there's so many interacting parts and it is insanely difficult to run controls without spoiling the results and/or ending up in court. A lot is actually known, but there are so many layers of complexity in between; linking molecular biology to physiology is just about possible, but you'll never ever understand a patient with just molecular biology as your tool. The basic chemistry inside cells matters, but so to does their spatial arrangement, the forces applied to them, the history of events that have occurred, etc.

It's like saying we know how a transistor works so we therefore know exactly how the whole internet works.

-

@dkf I know all that. The point is that there are now pretty good models on the lower levels, but, because of that complexity, the higher levels don't have much in the way of them. So every time they find out how something works on the cell level, they immediately start thinking how to affect it to cure this or that condition and it sometimes works, but it's still a lot of trial and error and surprising outcomes. Well, better than trying everything on everything, at least they start from knowing it's somehow related.

-

@Bulb said in Science and Replication:

the higher levels don't have much in the way of them

That's wrong. There's plenty of higher level models too. It's just that we've not yet managed to connect bottom-up models with top-down models. We're close to succeeding at that with simpler organs such as bones and muscles.

-

@dkf said in Science and Replication:

It's like saying we know how a transistor works so we therefore know exactly how the whole internet works.

I remember reading a study (probably posted somewhere on TDWTF) a couple of years ago where they used (a model of?) a chip to perform some basic operations, and recorded (modelled?) at the same time which parts of the chip were activated, to see if looking at the later could actually yield some insight into the former, kind of like we try to understand the brain by looking at MRI scans and such.

IIRC, it wasn't a great success, and yet they were working with a trivially simple system (compared to a human brain).

-

@Bulb said in Science and Replication:

Unfortunately that's exactly what all the grant agencies basing their decisions on scientometry are doing.

This is a deeper problem. Because these agencies are organs of the state, they must (in most civilized countries) follow the rule of law. They can't just appoint a smart guy to decide to fund good stuff, and defund bad stuff, instead they must follow some objective, publicly accessible rules. Now obviously there's nothing wrong with rule of law when applied to criminal justice and the like, but when we deal with inherently risky and uncertain activity it quickly becomes more of a problem than a solution. You can't just make a rule saying that only research resulting in in important breakthroughs gets funded, because the whole point of doing research is that we don't know the results. So instead you use some artificial metric, which will quickly be gamed by bad actors. I don't really see a solution to this.

The same problem by the way results in these ridiculous EU grants for "innovations", where some shady guys get a million for literal "facebook but for cat owners", because their application checked the correct boxes. To some extent the various public procurement laws (requiring a bid for everything) work in the same direction, resulting in more expensive and worse results than any fool could buy by just googling what's on the market, even adjusting for a a 10% bribe. Because now the buyer can't just stand up and say "come on, that's a ridiculous, f*** off, i'll just hire X for half the price", because mr X didn't participate in the bid, and price-fixing schemes are extremely difficult to prove.

-

@sebastian-galczynski I think it's a more general problem even than that.

"Data-driven" management (aka "following the science") really is metric-driven management. And the metrics themselves may or may not be driven by the data (often not). And they're certainly not direct measurements of quality--they're measurements of things that are related to quality.

So we really need to apply scientific techniques to the metrics themselves to determine if they're good metrics. But that requires metrics, and ERR_STACK_OVERFLOW.

In any scientific endeavor, actually gathering the data isn't the tricky part most of the time. Labor-intensive, annoying, difficult. But figuring out what data to gather and what (if anything) it means afterward are the parts that require significant mental effort and strong domain knowledge.

But management metrics are mostly set by what's easiest to measure. And measuring things with ill-defined domains...

Yeah. It's a cluster all the way down. And not a solvable one in the generic case. At most you could pull it out of the public funding realm and merely set problems and prizes for solving the problem. Because you can measure quality better for solutions than you can for research itself. But that would upset lots of apple carts and threaten a lot of iron rice bowls. So it's a non-starter.

-

@sebastian-galczynski It is indeed a deeper problem, though I don't agree it is about rule of law. Law, in and off itself, is free to just say this committee allocates that amount of money as it sees fit.

The real problem is that as the organizations get more complex – and this applies to private corporations just as it does to the state, it's just worse for the state because its incentives are worse aligned – they start creating rules that prevent the individual officers from abusing their powers, because finding and punishing such abuses gets harder. But the downsides are that the growing set of rules makes it less and less efficient and that it creates metrics that skew the incentives away from the actual goals.

Fortunately they did manage to fix at least the worst bugs of the previous system here and it does now give some power to the committee and some trust to the people who showed they can have good results in building their teams and directing the future work.

@sebastian-galczynski said in Science and Replication:

The same problem by the way results in these ridiculous EU grants for "innovations", where some shady guys get a million for literal "facebook but for cat owners", because their application checked the correct boxes.

Yeah, I've seen the other side too, because our company does have some dealing with the academy. It's insane amount of paperwork to get all the boxes checked and because it is often top-down effort driven by some politician deciding “we should improve area X” instead of people trying to actually do X asking for something, specific, to help them, it ends up with vague goals, vague success criteria and useless results.

But this kind of ‘innovation’ is a bit different from basic research. Applications like that “facebook but for cat owners” (if it was “facebook, but for cats”, it might have worked

) are product development and that would better be funded by venture capital (the travel agency for soft toys worked out great—there was a TV show some years back where people presented their more or less crazy projects and some potential investors chose whether they'll back it and IIRC this was one of the successful entries). But EU is a bureaucratic institution and bureaucrats won't give up their control, so they'll rather give out inefficient grants than actually support efficient private investments.

) are product development and that would better be funded by venture capital (the travel agency for soft toys worked out great—there was a TV show some years back where people presented their more or less crazy projects and some potential investors chose whether they'll back it and IIRC this was one of the successful entries). But EU is a bureaucratic institution and bureaucrats won't give up their control, so they'll rather give out inefficient grants than actually support efficient private investments.

-

@remi said in Science and Replication:

IIRC, it wasn't a great success, and yet they were working with a trivially simple system (compared to a human brain).

At the scale of a single neuron, there's some pretty good models. Stuff gets a bit tricky with some neuron types (because nature gets crazy complicated with how dendritic trees interconnect with themselves and other things) but we can mostly model a cell pretty well. There's not many people looking at going above that scale into small brain regions except at colossal slowdowns with respect to reality; typical neural communication patterns don't work well with the usual extended Von Neumann or Harvard architectures used in most computers.

The number of platforms able to run such neural simulations in real time is very small. Maybe just one or two. (Intel's Loihi would be able to do it, but only with unrealistically simple neural models; the hardware acceleration they've done isn't quite suitable.) There are two classes of neuron that are tricky, and one aspect of synapses:

- Purkinje Cells in the cerebellum have somewhere close to a quarter of a million incoming connections each. They do complex time-dependent correlation detection, and are strongly implicated in how instinctive control of motor neurons works.

- Pyramidal Cells in the cortex appear to be doing non-trivial computation in their dendritic tree. Working out what's going on here is hard, and is likely to involve a great deal more complex models. They're believed to important for how higher thought actually works… but nobody really knows. They're also ferociously complex.

- Many synapses are not constant, but rather instead change over the lifespan of the cells that they connect. This is pretty likely to be the core mechanism behind learning (along with moderation of the effective activity levels of synapses) and it's extremely unlike anything in standard computing hardware.

None of these things sits well with standard computing, and all are very very parallel and non-linear. If you like things linear, GTFO of neuroscience as nothing in it is linear. Most of the rest of biology is just as bad. Even more fun? Lots of scientists have spent their time looking for linear relationships in it… and so have missed a lot of obvious non-linear things along the way because they didn't want things to be complicated.

-

@Bulb said in Science and Replication:

Law, in and off itself, is free to just say this committee allocates that amount of money as it sees fit.

Maybe this would fly in some Anglo-Saxon countries, but here in Poland this would be struck down as unconstitutional*, specifically in violation of article 7. Not to mention some EU directives.

This might be (not necessarily) because we rely on the German "rechtsstaat" doctrine, as opposed to the precedent-based English common law system. It has some advantages though, because it removes 99% of accidental complexitiy resulting from the "regulation through litigation" framework. European countries have like 10x less lawyers as a result.*At least until 2015, when the Law and Justice party packed the constitutional court with their buddies, now nothing is certain.

-

@sebastian-galczynski Unconstutional? Does your constitution really go into such detail as defining what the budget rules for a budget organization can and cannot look like? Because if not, defining the budget rules as ‘committe of 12, nominated by the prime minister with parliament's approval (or whatever), scores the proposals as it sees fit’ would be perfectly fine budget rules. Of course the parliament won't want to delegate powers like that so they will create convoluted rules for how the projects shall be scored (and leave some non-obvious loopholes to be able to affect the scores to their or their party's liking).

@sebastian-galczynski said in Science and Replication:

Anglo-Saxon countries

No, Czech jurisprudence is based on the German one just like Polish.

-

@Bulb said in Science and Replication:

But this kind of ‘innovation’ is a bit different from basic research. Applications like that “facebook but for cat owners” (if it was “facebook, but for cats”, it might have worked ) are product development and that would better be funded by venture capital

They have one thing in common though: big risk. VCs also fund many bad ideas, usually only a tiny minority of startups succeeds, but those that do can offset the losses. With research funded by the government this model doesn't work, because the govt can't reap all the profits from a discovery (if any), and the losses are too visible to be tolerated by taxpayers. It also is very short-term in its thinking, much like a publicly traded corporation.

@Bulb said in Science and Replication:

But EU is a bureaucratic institution and bureaucrats won't give up their control, so they'll rather give out inefficient grants than actually support efficient private investments.

I don't think it has to do with bureaucrats hoarding power, pretty much the other way round. All EU grants are awarded on the level of national governments anyway, only the bulk sums and some rules are decided by the EU. I've seen the same failure mode on much lower levels, and the problem is mostly that the commitees are so overregulated that they can't use their own judgement and exercise power. For example, they may even be explictly forbidden to use any outside sources when evaluating applications, to the point that they will request attaching a signed quote for... city bus tickets*, so that they know that a bus ticket indeed costs $1, which they know anyway since they ride those buses everyday. But they can't even rely on that knowledge, much less on own judgement.

*this shit is 100% real.

-

@Bulb said in Science and Replication:

@sebastian-galczynski said in Science and Replication:

Anglo-Saxon countries

No, Czech jurisprudence is based on the German one just like Polish.

The Angles and Saxons were both Germanic people groups.

The Angles and Saxons were both Germanic people groups.

-

@sebastian-galczynski said in Science and Replication:

@Bulb said in Science and Replication:

But this kind of ‘innovation’ is a bit different from basic research. Applications like that “facebook but for cat owners” (if it was “facebook, but for cats”, it might have worked ) are product development and that would better be funded by venture capital

They have one thing in common though: big risk. VCs also fund many bad ideas, usually only a tiny minority of startups succeeds, but those that do can offset the losses. With research funded by the government this model doesn't work, because the govt can't reap all the profits from a discovery (if any), and the losses are too visible to be tolerated by taxpayers. It also is very short-term in its thinking, much like a publicly traded corporation.

Yeah, there is two different levels. Basic research, from which no direct benefits are going to be reaped (though it's obviously important in the long term for the society at large) and the applications, for which there should be.

The former needs to be funded from public funds, for the later private funds are more efficient (but in Europe the tendency is to make it more complicated for investors rather than less).

@Bulb said in Science and Replication:

But EU is a bureaucratic institution and bureaucrats won't give up their control, so they'll rather give out inefficient grants than actually support efficient private investments.

I don't think it has to do with bureaucrats hoarding power, pretty much the other way round. All EU grants are awarded on the level of national governments anyway, only the bulk sums and some rules are decided by the EU.

They are awarded on the national level, but they are binding it with rules. That is the power hoarding aspect I see.

I've seen the same failure mode on much lower levels, and the problem is mostly that the commitees are so overregulated that they can't use their own judgement and exercise power.

Certainly. It permeates all levels and even large corporations. I see it as a general distrust in subordinates.

For example, they may even be explictly forbidden to use any outside sources when evaluating applications, to the point that they will request attaching a signed quote for... city bus tickets*, so that they know that a bus ticket indeed costs $1, which they know anyway since they ride those buses everyday. But they can't even rely on that knowledge, much less on own judgement.

*this shit is 100% real.

Of course it is. I know. We have similar shit.

I was only arguing with the rule of law point. Rule of law allows giving the committees room to use their own judgement. It's different effects that prevent actually giving it.

-

@sebastian-galczynski said in Science and Replication:

@Bulb said in Science and Replication:

But this kind of ‘innovation’ is a bit different from basic research. Applications like that “facebook but for cat owners” (if it was “facebook, but for cats”, it might have worked ) are product development and that would better be funded by venture capital

They have one thing in common though: big risk. VCs also fund many bad ideas, usually only a tiny minority of startups succeeds, but those that do can offset the losses. With research funded by the government this model doesn't work, because the govt can't reap all the profits from a discovery (if any), and the losses are too visible to be tolerated by taxpayers. It also is very short-term in its thinking, much like a publicly traded corporation.

That sounds like the opposite of every government I'm familiar with. In any case, that money goes into some "Science Foundation" or it funds universities where the actual research is happening and so people don't complain about expected returns because it's generally understood that you're paying for basic research.

Around here universities often hold patents on their stuff which does pay them back. The government itself isn't allowed to do that.

-

@HardwareGeek said in Science and Replication:

@Bulb said in Science and Replication:

@sebastian-galczynski said in Science and Replication:

Anglo-Saxon countries

No, Czech jurisprudence is based on the German one just like Polish.

The Angles and Saxons were both Germanic people groups.

The Angles and Saxons were both Germanic people groups.But German law references The Holy Roman Empire of German Nation and the land where Angles and Saxons met wasn't part of that. Unlike Czech Lands that for much of its existence were.

-

@Bulb said in Science and Replication:

Unconstutional? Does your constitution really go into such detail as defining what the budget rules for a budget organization can and cannot look like? Because if not, defining the budget rules as ‘committe of 12, nominated by the prime minister with parliament's approval (or whatever), scores the proposals as it sees fit’ would be perfectly fine budget rules.

The constitution does not go into such detail, but the courts interpreting it do. And their idea of rule of law is pretty much that the state should operate like a computer - give predictable outputs for given inputs when interacting with the citizenry. Obviously the "as it sees fit" act would not fit this model.

The article 7 simply states that "Organs of the state operate on the basis, and within the bounds of law". The dominant interpretation is that "on the basis of law" means that arbitrary power should be restricted as much as possible. If there's no legal basis to choose A over B, then the bureaucrat exercising this choice is abusing his power.

-

@sebastian-galczynski Well, the administration should preferably give predictable outputs, but there needs to be some room for politics and as long as the committee is declared political rather than purely administrative, it should be able to get the power.

-

@Bulb said in Science and Replication:

I was only arguing with the rule of law point. Rule of law allows giving the committees room to use their own judgement. It's different effects that prevent actually giving it.

So you think it comes down to power-hungry guys at the top, whereas I think it comes down to a certain worldview, which can be summed up as "world as a (controllable) machine*". Maybe both reasons are at play. I've definitely seen this mindset infecting organizations without any top-down intervention. It's wrongness is really not so obvious to many people - you constantly encounter well-intentioned citizens petitioning for more, and more precise regulation.

-

@boomzilla said in Science and Replication:

people don't complain about expected returns because it's generally understood that you're paying for basic research

Remember this one?

-

@sebastian-galczynski said in Science and Replication:

whereas I think it comes down to a certain worldview

"dreaming of systems so perfect that no one will need to be good" *

-

@sebastian-galczynski said in Science and Replication:

All EU grants are awarded on the level of national governments anyway

No. It varies between programmes and funding objectives. Some are done that way, others are done directly, and some are done in multistage processes that are really complex. Rules for R&D are different to rules for construction are different to rules for …

-

@sebastian-galczynski said in Science and Replication:

@Bulb said in Science and Replication:

I was only arguing with the rule of law point. Rule of law allows giving the committees room to use their own judgement. It's different effects that prevent actually giving it.

So you think it comes down to power-hungry guys at the top, whereas I think it comes down to a certain worldview, which can be summed up as "world as a (controllable) machine*". Maybe both reasons are at play. I've definitely seen this mindset infecting organizations without any top-down intervention.

Yeah, it is probably both, plus general distrust in other people (which is related – replacing the untrusted people with rules).

It's wrongness is really not so obvious to many people - you constantly encounter well-intentioned citizens petitioning for more, and more precise regulation.

True. And it's most obvious when more and more precise regulation is proposed as a response to problems with people not following the current one. Why the hell do people think that changing the regulation will make people who don't follow it start to? So the end result is only that the ones that followed it already, and were not a problem, get less done, while the problem won't be solved. But many well intentioned people still believe in that solution.

-

@sebastian-galczynski said in Science and Replication:

@boomzilla said in Science and Replication:

people don't complain about expected returns because it's generally understood that you're paying for basic research

Remember this one?

What about it?

-

Well, the Congress canceled it, because the only thing they cared about (having a bigger one than the Russians) ceased to matter, and they couldn't care less about any Higgs boson etc. Same thing with space exploration really.