This Slashdot article

-

@dhromed said:

@boomzilla said:

But was it really?

Yeah, it's how it works when you ask a ton of people for improvements. You get a list of faults.Alahu Akhbar! Of course, but the question I was asking was who were the ton of people? Blakeyrat (PBUH) claimed that MS asked Linux guys who didn't admin Exchange servers. Now, I wouldn't be surprised to find out that MS looked beyond their existing base to see what other systems did. That's a normal outcome from competition. But I suspect the average Exchange admin is different from blakeyrat's (PBUH) "normal user." So it's very possible that some or a lot of these guys would like to have something more scriptable. Especially in large environments.

Consider the earlier example of "review[ing] mailbox sizes across a mailbox store." I imagine a GUI is very handy for this if you have only a handful of users. In a large environment it's probably worthless. We know that MS likes to collect usage data. What are the odds that they realized very few people were using the GUI because it wasn't useful due to the limitations of a GUI? Maybe that feature is used more now by admins using PS to dump the data into Excel (Alahu Akhbar!). That's all hypothetical, of course, but I think it fits better with MS's modus operandi than blakeyrat's (PBUH) theory that they let the h8ers design one of their flagship products.

-

If anything, you're dedicated to your cause. A Jihad, if you will.

-

@blakeyrat said:

You're telling me the CLI is only intended as a computer protocol, but here it is, right from the horse's mouth that it is intended for humans.

These two are not mutually exclusive. A major strength of CLIs is that they can be used both interactively and for scripting. If you notice yourself typing some commands repetitively in the command line, you can just write down the same commands you used before into a text file, optionally create place-holders for varying parameters (i.e. command-line arguments) and later just run that file. How do you imagine doing that with a GUI?

-

@spamcourt said:

These two are not mutually exclusive. A major strength of CLIs is that they can be used both interactively and for scripting. If you notice yourself typing some commands repetitively in the command line, you can just write down the same commands you used before into a text file, optionally create place-holders for varying parameters (i.e. command-line arguments) and later just run that file. How do you imagine doing that with a GUI?

Apple's approach was to have programs self-post IPC messages. All programs needed an Apple Event (structured IPC) handler to receive key messages from the OS (the required suite), and you'd simply recognise more messages for scripting purposes.

The next step was self-posting: for example, when the user selects Edit → Paste, the program would generate an Apple Event requesting a paste operation, and post that to itself. The Paste command would always be processed by the Apple Event handler, regardless of the source of the command (user interaction, or external messages from direct IPC or scripting).

At this stage, you could tell Script Editor to begin recording: the OS was aware of these self-posted messages, and each one would be resolved to a scripting command via the program's scripting dictionary (one or more resources inside the program binary) and inserted into your script.

You could then stop recording, and swap out some of the recorded data with variables.

I don't know how this works these days — this was how classic Mac OS handled it. If you remember Windows 3.1, you might recall Microsoft's approach, Macro Recorder! I have used it, but it really did suck.

Maybe we need a wiki devoted to all the different ideas out there in the industry, including all the system that are limping along and largely forgotten (e.g. RISC OS, BeOS (Haiku) and AmigaOS (MorphOS)), grossly distorted from how they were originally (e.g. Mac OS) and those that are well and truly dead.

-

@Daniel Beardsmore said:

I don't know how this works these days — this was how classic Mac OS handled it. If you remember Windows 3.1, you might recall Microsoft's approach, Macro Recorder! I have used it, but it really did suck.

I vaguely remember using it with Object Packager to make some truly catastrophic crashes.Oh, the joys of using someone else's computer with no data on it that you care about!

-

@flabdablet said:

@blakeyrat said:

The real problem with .NET is Microsoft won't fucking commit to it.

Since PowerShell is based on it, and the PowerShell designer and project lead is now the lead architect for their server operating systems, that's probably fixed.

Maybe, but the stuff I care about (XNA, DirectShow which never had proper .NET bindings, WinForms and WebForms) are all in the shitter right now. Either expressly cancelled, or have seen no work done in years.

-

@flabdablet said:

But those 50 versions were presumably created by 50 different authors, each of whom was clearly dissatisfied in some way with the ones available before theirs.

Isn't the whole point of the open source feel-good bullshit that you can fix the existing one instead of making a new one?

@flabdablet said:

I presume you did then do it your damned self. Did you happen to make the resulting library - the only one that actually works, apparently - available anywhere? Because that would be a generous thing to do, even if you then abandoned it completely and took no responsibility at all for maintaining it.

No, because I didn't even bother to make it a library, I just wrote exactly the functions my application actually needed. In any case, I didn't own the code.

-

@flabdablet said:

@blakeyrat said:

Why? Because the fallacy here was they were thinking, "if only we could appeal to that last 10% of people who didn't like the original game...". But they didn't ask the 90% who liked it why they liked it, they only asked the people who didn't like it... they ended up (maybe) appealing to the 10% while turning off the 90%.

You did notice you just undermined your entire argument for Git not having a CLI, yes?When did I make that argument? I wasn't aware I had argued for that.

-

@spamcourt said:

@blakeyrat said:

You're telling me the CLI is only intended as a computer protocol, but here it is, right from the horse's mouth that it is intended for humans.

These two are not mutually exclusive.

They are, if you spend a minute to think about it. Otherwise, answer this question: how do you improve the user experience of the program without breaking scripts that use the program?

The only possible way to do that is to separate the functions. A CLI is a *user* interfaces. User interfaces should evolve and change frequently, to increase their usability. A *scripting* interface has exactly the opposite need: it should remain as stable as possible for as long as possible so scripts don't break.

Unless Linux has some different way of solving that problem (pro-tip: they don't), the best solution is to separate the two entirely. This is also the solution chosen by Mac (Classic, then inherited by OS X, although God-knows how well it works after years of Unix-developer stewardship) and Windows. The fact that those two OSes represent something like 98% of the OS market might serve has a hint to you that they're doing *something* right.

@spamcourt said:

If you notice yourself typing some commands repetitively in the command line, you can just write down the same commands you used before into a text file, optionally create place-holders for varying parameters (i.e. command-line arguments) and later just run that file. How do you imagine doing that with a GUI?

On Mac Classic? Open Script Recorder, a utility app that comes with the OS. Hit Record. Perform your actions. Hit Stop. Hit Save. Double-click the resulting file any time you need to run those actions. It was that fucking simple. And it's not from some crazy sci-fi future, but from the glorious past.

-

@blakeyrat said:

@spamcourt said:

@blakeyrat said:

You're telling me the CLI is only intended as a computer protocol, but here it is, right from the horse's mouth that it is intended for humans.

These two are not mutually exclusive.

They are, if you spend a minute to think about it. Otherwise, answer this question: how do you improve the user experience of the program without breaking scripts that use the program?I don't see why the coupling is necessarily as tight as you assume. The same issues apply to any other interface, including an API. In particular, you add something new instead of changing or removing something old. Like all of the FooEx-style COM interfaces.

-

@blakeyrat said:

User interfaces should evolve and change frequently, to increase their usability.

There's a significant proportion of users who want nothing more than for things to stay exactly the same as they used to be. For them, usability is promoted by changing nothing, no matter how poor it is. These tend to be people who have pigeonhole minds rather than model minds — they fit each fact into its own pigeonhole, keeping it safely away from contact with all other facts, whereas people who think in terms of models will try to fit facts into an overall model of the world that explains those facts, allowing interpolation and extrapolation. The pigeonhole approach allows for great feats of memorisation, but the model approach is far more adaptable to new situations.Good programmers, engineers and scientists (and you and me!) tend to be model builders, but lots of other people simply aren't. Their brains don't seem to work that way.

-

@dkf said:

For them, usability is promoted by changing nothing, no matter how poor it is.

That doesn't even make sense. In fact I think it's an oxymoron.

@dkf said:

These tend to be people who have pigeonhole minds rather than model minds — they fit each fact into its own pigeonhole, keeping it safely away from contact with all other facts, whereas people who think in terms of models will try to fit facts into an overall model of the world that explains those facts, allowing interpolation and extrapolation. The pigeonhole approach allows for great feats of memorisation, but the model approach is far more adaptable to new situations.

This makes a lot more sense.

One of the reasons I can't use a CLI effectively is because I don't have the rote memorization it requires. The nice thing about a GUI is that you don't need to use rote memorization; you can use your spatial memorization for much of the work and, in human beings at least, spatial memorization is much, much, much more powerful.

@dkf said:

Good programmers, engineers and scientists (and you and me!) tend to be model builders, but lots of other people simply aren't. Their brains don't seem to work that way.

If you're implying that Linux CLI users are not good programmers or engineers, prepare for fisticuffs. That said, I'd agree with you.

-

@blakeyrat said:

@spamcourt said:

@blakeyrat said:

You're telling me the CLI is only intended as a computer protocol, but here it is, right from the horse's mouth that it is intended for humans.

These two are not mutually exclusive.

They are, if you spend a minute to think about it. Otherwise, answer this question: how do you improve the user experience of the program without breaking scripts that use the program?

The only possible way to do that is to separate the functions. A CLI is a *user* interfaces. User interfaces should evolve and change frequently, to increase their usability. A *scripting* interface has exactly the opposite need: it should remain as stable as possible for as long as possible so scripts don't break.

Unless Linux has some different way of solving that problem (pro-tip: they don't), the best solution is to separate the two entirely. This is also the solution chosen by Mac (Classic, then inherited by OS X, although God-knows how well it works after years of Unix-developer stewardship) and Windows. The fact that those two OSes represent something like 98% of the OS market might serve has a hint to you that they're doing *something* right.

@spamcourt said:

If you notice yourself typing some commands repetitively in the command line, you can just write down the same commands you used before into a text file, optionally create place-holders for varying parameters (i.e. command-line arguments) and later just run that file. How do you imagine doing that with a GUI?

On Mac Classic? Open Script Recorder, a utility app that comes with the OS. Hit Record. Perform your actions. Hit Stop. Hit Save. Double-click the resulting file any time you need to run those actions. It was that fucking simple. And it's not from some crazy sci-fi future, but from the glorious past.

That solution is just as vulnerable to UI changes as CLI-style interfaces, and it isn't as powerful. It's useful, definitely - I've used a similar feature in Notepad++ - but it's not a general purpose solution for scripting. Consider:

- How do you represent 'do something to everything in this variable-sized list'?

- If a program updates and control IDs change, your script can break.

- If control IDs aren't systematic it might be difficult to branch well. Say there are three radio buttons and you want to press a different one depending on some other state. Hope they're named something like 'radio1', 'radio2' etc. so that you can branch to them easily.

- If programs have custom controls, then they need to package and send the user-action event themselves, for the OS to record. That means scripts don't necessarily match up to what you did, because programs can take an arbitrarily long amount of time to make one of those objects.

- The editor for these script files must be getting pretty damned complex if it needs to do variables, branching and looping. Is that all GUI too, or does the script you've recorded turn into a textual representation that you can edit easily? I don't know about you, but I'm not so sure that dragging around boxes in a flowchart is a great way to program.

Windows and Mac OS have something like 98% of the /desktop/ OS market. If you consider only markets where scriptability is an important feature, surprise surprise linux is more popular.

I think you're underestimating exactly how easy it is to preserve backwards compatibility in a CLI interface. You've got a tool that does something to input. What 'tool some input here' does is unlikely to need to change to improve the UI. When you start adding options the same thing happens - why would you need to change the behaviour of grep's recurse option? Usually improvements come in the form of new features and options. Or new programs that support the interface of the old tool but do more with it - like flex and bison over lex and yacc (lex/flex generate lexers for a language from a regex-based desciption of the tokens in it, yacc/bison generate recursive-descent parsers for a language based on an EBNF grammar for it). If you want to completely overhaul the interface, you make a new tool that does the same thing with a totally different interface, and old scripts will continue to work while the new tool has a fancy-pants new interface that scripts can start using.

-

@blakeyrat said:

I can't use a CLI effectively is because I don't have the rote memorization it requires.

You can program. Clearly you're capable of remembering all these abritrary keywords and API method names, and the ins and outs of how a language works. What's different about a command line?

-

@j_p said:

That solution is just as vulnerable to UI changes as CLI-style interfaces, and it isn't as powerful.

It's a lot more interesting than you imagine. The technology—built into the OS since the early 90s—is called the Open Scripting Architecture, which maps human-language terminology to binary IPC message data. Apple designed it to support multiple programming languages (with AppleScript being the native offering) and multiple human languages per programming language — in the end result, most of the world stuck with AppleScript in English. Each scriptable software application binary contains a resource defining its list of its classes and methods, data types, global functions etc with the human-language terms and the mappings to IPC binary data. This is used to automatically build the documentation, and to allow human-language scripting to work with the program's IPC API. The IPC system supports complex nested object descriptors, leading to commands such as:

tell application "foo" to set the colour of the first letter of the first word of every paragraph of every window to "red"You're not scripting a GUI, you're controlling a program by an API, e.g.

tell application "Finder" to move file (some path to file) to (some destination)Scripts can refer to any application, they can control multiple applications, they can accept events (e.g. drag and drop files onto them, or serve as event handlers for programs) and can be run from within a program (many programs had a menu dedicated to this) or directly from the file system.

It's the Mac equivalent of the Windows Scripting Host, except it's orders of magntitude better designed, and not treated like a dirty secret — it's been suggested that AppleScript saved the Mac when Windows started to threaten its established base in the publishing industry. The OS has a lot more built-ins than you get with WSH, including clipboard access, file and folder choosing, etc.

Recording is simply a bonus feature to build scripts based on the description in my preceding post. I don't imagine most programs bothered with the recording aspect, but scripting support was common. Even Microsoft Outlook Express for Mac supported scripting and event handlers.

-

@dhromed said:

You disappoint me. The obvious answer is CLI interfaces don't have IDE environments. Namely, they don't have inspection mechanisms like eg. autocomplete with parameters or popup mini-documentation.@blakeyrat said:

I can't use a CLI effectively is because I don't have the rote memorization it requires.

You can program. Clearly you're capable of remembering all these abritrary keywords and API method names, and the ins and outs of how a language works. What's different about a command line?

We need better CLI interfaces.

Edit: Powershell may prove me wrong. I don't have experience with it and I should have checked first.

-

@Daniel Beardsmore said:

@j_p said:

That solution is just as vulnerable to UI changes as CLI-style interfaces, and it isn't as powerful.

It's a lot more interesting than you imagine. The technology—built into the OS since the early 90s—is called the Open Scripting Architecture, which maps human-language terminology to binary IPC message data. Apple designed it to support multiple programming languages (with AppleScript being the native offering) and multiple human languages per programming language — in the end result, most of the world stuck with AppleScript in English. Each scriptable software application binary contains a resource defining its list of its classes and methods, data types, global functions etc with the human-language terms and the mappings to IPC binary data. This is used to automatically build the documentation, and to allow human-language scripting to work with the program's IPC API. The IPC system supports complex nested object descriptors, leading to commands such as:

tell application "foo" to set the colour of the first letter of the first word of every paragraph of every window to "red"You're not scripting a GUI, you're controlling a program by an API, e.g.

tell application "Finder" to move file (some path to file) to (some destination)Scripts can refer to any application, they can control multiple applications, they can accept events (e.g. drag and drop files onto them, or serve as event handlers for programs) and can be run from within a program (many programs had a menu dedicated to this) or directly from the file system.

It's the Mac equivalent of the Windows Scripting Host, except it's orders of magntitude better designed, and not treated like a dirty secret — it's been suggested that AppleScript saved the Mac when Windows started to threaten its established base in the publishing industry. The OS has a lot more built-ins than you get with WSH, including clipboard access, file and folder choosing, etc.

Recording is simply a bonus feature to build scripts based on the description in my preceding post. I don't imagine most programs bothered with the recording aspect, but scripting support was common. Even Microsoft Outlook Express for Mac supported scripting and event handlers.

That is pretty neat. Of course, if you're writing a script in a text file to control GUI apps, you're still doing the same kind of composable-text-that-means-things approach that CLIs use. It's a cool way of allowing for that kind of thing in a GUI environment, though. I guess the biggest problems with it would be that programs have to specifically export their scriptability - programs are only as scriptable as they've put effort into being, whereas the CLI approach essentially has scripting for free - anything that a user can do with the program is by-definition scriptable. I'm not so crash-hot on natural language as the descriptor either.

-

-

@Zecc said:

@dhromed said:

You disappoint me. The obvious answer is CLI interfaces don't have IDE environments. Namely, they don't have inspection mechanisms like eg. autocomplete with parameters or popup mini-documentation.@blakeyrat said:

I can't use a CLI effectively is because I don't have the rote memorization it requires.

You can program. Clearly you're capable of remembering all these abritrary keywords and API method names, and the ins and outs of how a language works. What's different about a command line?

We need better CLI interfaces.

Edit: Powershell may prove me wrong. I don't have experience with it and I should have checked first.

They do have tab-completion and the ability to run 'whatever -h' or /? or whatever syntax is standard in your environment for asking for help. Surely blakey can at least remember the /names/ of the programs he's using. And, of course, there's no reason you couldn't make a inspection-mechanism for a CLI. Or just use multiple windows and look at the help for whatever command you're using - at least, on a unix box you can have another shell open with the man page for the command loaded.

-

@Zecc said:

You disappoint me. The obvious answer is CLI interfaces don't have IDE environments. Namely, they don't have inspection mechanisms like eg. autocomplete with parameters or popup mini-documentation.

We need better CLI interfaces.

Edit: Powershell may prove me wrong. I don't have experience with it and I should have checked first.

Bash, at least, has autocomplete with parameters.

Many (most?) applications that display a console / terminal / CLI have tabs where you can have multiple consoles going. I use Konsole, and it's very easy to move to a different tab and pull up the man page for something.

-

@j_p said:

That is pretty neat. Of course, if you're writing a script in a text file to control GUI apps, you're still doing the same kind of composable-text-that-means-things approach that CLIs use.

See script recording.

@j_p said:

I guess the biggest problems with it would be that programs have to specifically export their scriptability - programs are only as scriptable as they've put effort into being, whereas the CLI approach essentially has scripting for free - anything that a user can do with the program is by-definition scriptable.

Newsflash — writing good software is hard! This also ties in with the problem of people giving away free throwaway programs instead of everyone working together to focus on a single piece of quality software. This is not a technology problem, this is a human problem. People who write CLI programs can't even make their mind up on the format of the argument syntax!

@j_p said:

I'm not so crash-hot on natural language as the descriptor either.

I take it you missed the part about the framework being programming language–independent? People went with AppleScript out of choice. There's was also a third-party JavaScript engine that allowed you to script the Mac in JavaScript if you preferred.

Of course, that is only relevant within the context of OSA in OS X — for example if you wanted to apply the OSA concepts to D-Bus under *NIX, you could choose any language you wanted.

-

@Zecc said:

Don't bother. PowerShell is just bash with a bad case of second-system effect.We need better CLI interfaces.

Edit: Powershell may prove me wrong. I don't have experience with it and I should have checked first.

-

@blakeyrat said:

Actually, it handles them just fine. You can register diff handlers for various file types. As long as your CAD program, CG program, or Audio filter program have a way of being asked to show differences between two files, it's easy enough to register a diff handler so you can see useful differences between them. It just happens to have a text-diff program built-in, but even that can be overridden.And moreover, since I'm on my soapbox, why shouldn't Git be able to handle CAD drawings, or CG animation descriptions, or audio filters? Why the fuck NOT?

-

@blakeyrat said:

If you're implying that Linux CLI users are not good programmers or engineers, prepare for fisticuffs. That said, I'd agree with you.

I'm talking about a broad class of [i]users[/i] who all think the same way. I think a majority of programmers (or at least a majority of programmers who are introspective enough to care) think differently. (Most of the originators of WTFs from the main site would appear to be pigeonholers, of course.)It might also be the case that some people think in a sophisticated way within their comfort area, and pigeonhole-fashion elsewise; I wonder if I do that too and I'm merely too stuck in my ways to see it?

-

@blakeyrat said:

One of the reasons I can't use a CLI effectively is because I don't have the rote memorization it requires.

That's what the manual is for; remembering all the little details so you don't have to. To find things in the manual, useaproposor look online (of course).

-

@dkf said:

That's what the manual is for; remembering all the little details so you don't have to. To find things in the manual, use

aproposor look online (of course).That's the whole point. In a GUI, the computer tells you what you can do, and gives you an obvious means to do it.

With the CLI, you have to remember the name of the command, then bring up the argument list, scrutinise it for five minutes trying to remember what exactly it was you were supposed to type in to get the exact effect you want (as programs rarely "do the right thing" by default, or they do a lot of things and you need to specify exactly what you want — think of the obstinate replicators on Star Trek that demand exact parameters first). Then you have to double-check whether long args use - or --, make sure you've checked whether the key verb parameter takes a dash or not (e.g. apt-get update does not -- it's not apt-get --update or apt-get-update) — all sorts of strange syntax contortions to trip over.

Best example: Robocopy. Instead of "doing the right thing" (as Explorer's copy/move operations mostly do these days), you have an argument list of Tolstoy proportions, with all the sensible options off by default, and absurdities like infinite retry for fatal errors such as permissions problems. (Explorer's approach is getting quite good now, but it's still got serious problems of its own, especially when dealing with MAX_PATH.)

wget is another one where you have to scrutinise the argument list every time you want to do anything besides download one small file — how do I ensure retry is set correctly, how do I ensure it doesn't create a load of pointless subdirectories for the domain and URL path, things like that. While a GUI tends to keep the parameters contextual (or offer a wizard), a single command-line utility can have a massive argument vocabulary that's so time-consuming to analyse that the end-result is far more time is wasted than if you'd used a graphical tool.

Command lines are fantastic for the most common tasks, but for anything that you want to do infrequently, the burden of understanding and specifying the parameters becomes excessive.

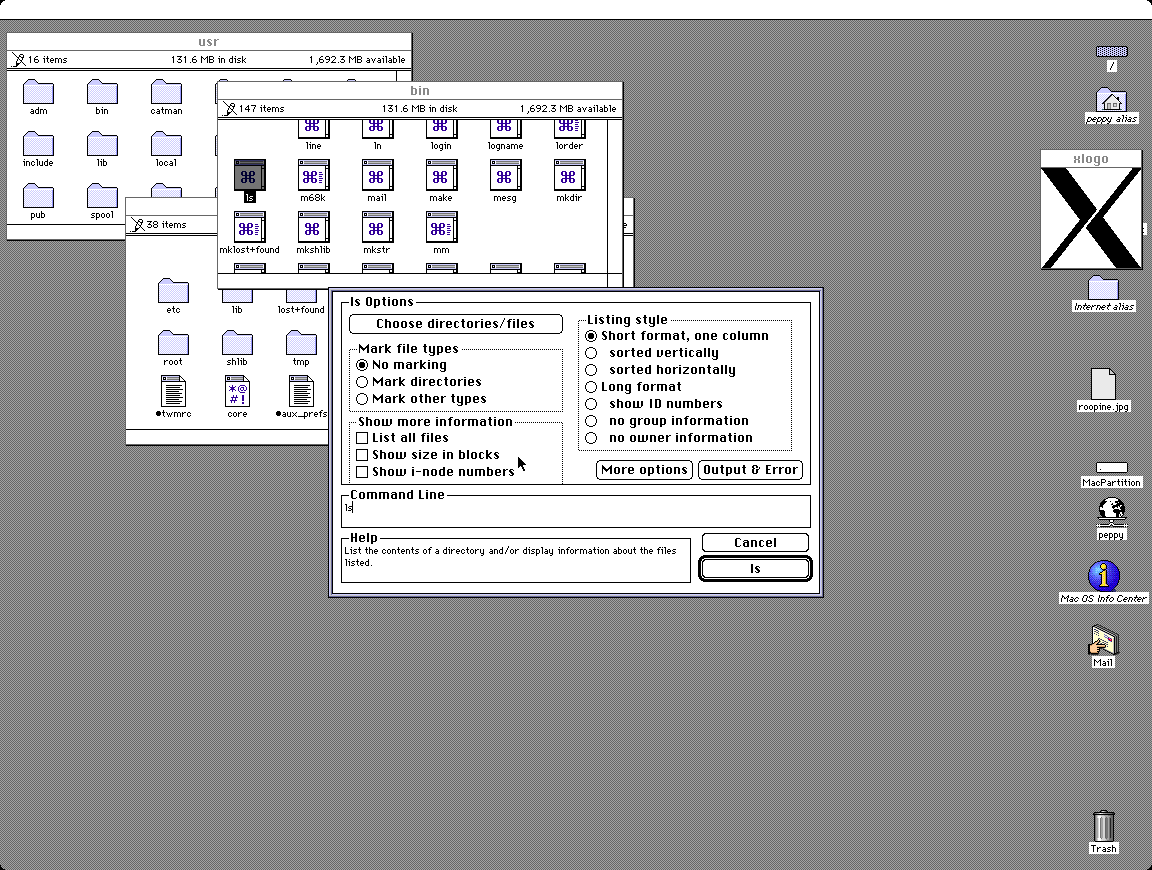

Apple had an interesting idea, with Commando in A/UX — it would present all the parameters to a UNIX command in a dialog box:

(From Toasty Tech — Apple A/UX)

-

@j_p said:

That solution is just as vulnerable to UI changes as CLI-style interfaces, and it isn't as powerful. It's useful, definitely - I've used a similar feature in Notepad++ - but it's not a general purpose solution for scripting. Consider:

- How do you represent 'do something to everything in this variable-sized list'?

- If a program updates and control IDs change, your script can break.

- If control IDs aren't systematic it might be difficult to branch well. Say there are three radio buttons and you want to press a different one depending on some other state. Hope they're named something like 'radio1', 'radio2' etc. so that you can branch to them easily.

- If programs have custom controls, then they need to package and send the user-action event themselves, for the OS to record. That means scripts don't necessarily match up to what you did, because programs can take an arbitrarily long amount of time to make one of those objects.

- The editor for these script files must be getting pretty damned complex if it needs to do variables, branching and looping. Is that all GUI too, or does the script you've recorded turn into a textual representation that you can edit easily? I don't know about you, but I'm not so sure that dragging around boxes in a flowchart is a great way to program.

You don't know a damned thing about how AppleScript worked.

BTW, Notepad++ can't even *draw menus* correctly, so it's not really a surprise its scripting feature is fucked.

@j_p said:

Windows and Mac OS have something like 98% of the /desktop/ OS market. If you consider only markets where scriptability is an important feature, surprise surprise linux is more popular.

If scripting were easy enough that anybody could do it, then it would be used on all types of computers.

This is a self-fulfilling prophecy: Linux scripting is so difficult that only those who devote their lives to maintaining Linux servers can pull it off. Therefore, only servers support scripting well. Gee, when you put it that way, it sounds really fucking stupid!

@j_p said:

I think you're underestimating exactly how easy it is to preserve backwards compatibility in a CLI interface. You've got a tool that does something to input. What 'tool some input here' does is unlikely to need to change to improve the UI. When you start adding options the same thing happens - why would you need to change the behaviour of grep's recurse option?

I don't know what grep's recurse option does, so I can't answer your exact question. But how about this example: you have to change the CLI command because you find out it has a data-losing bug, or a security flaw.

@j_p said:

Usually improvements come in the form of new features and options. Or new programs that support the interface of the old tool but do more with it - like flex and bison over lex and yacc (lex/flex generate lexers for a language from a regex-based desciption of the tokens in it, yacc/bison generate recursive-descent parsers for a language based on an EBNF grammar for it).

So you're saying:

1) The only way to improve a CLI program's interface is to write an entirely new CLI program

2) ... which must then support the exact same UI as the old oneWhich sums up to: there is no way to improve a CLI program's interface. Which is I believe the exact argument I've been making.

@j_p said:

If you want to completely overhaul the interface, you make a new tool that does the same thing with a totally different interface, and old scripts will continue to work while the new tool has a fancy-pants new interface that scripts can start using.

Oh, so the CLI has a versioning system so the scripts can specify which version they were built for? That is sure a handy feature! Too bad you made it fucking up and it doesn't exist.

Either that, or you're saying the system needs to have literally every version of every CLI tool ever made on it simultaneously to avoid breaking old scripts. And if one of those tools uses the command "search" (even if it does useless crap, or nobody's used it in decades) you can never, ever, ever make a new "search" that works better because of the polluted namespace.

To be fair though: your idea here could actually be made to work, if: 1) there were some form of versioning system, 2) there were some enforcement that scripts were using a "version marker" or however you implement 1, 3) the app disk size were small enough that it's not a big deal. It still breaks in the case of changes due to security, as you have to change the older versions as well.

-

@dhromed said:

@blakeyrat said:

I can't use a CLI effectively is because I don't have the rote memorization it requires.

You can program. Clearly you're capable of remembering all these abritrary keywords and API method names, and the ins and outs of how a language works. What's different about a command line?That's why I use an IDE with things like intellisense and auto-complete and right-click to go to definition and all that niceness.

Also: I probably *do* look up stuff more than other developers. Not that I've really measured. Why would you assume I don't?

As for learning a language, even a programming language, I honestly think that's a different skill than rote memorization. Probably somewhere inbetween rote and spatial memory. Think about how humans evolved: the oldest circuits in the brain was just remembering where physical objects are (spatial.) 100,000 years ago we were talking to each other and inventing languages (and hunter-gatherers knew a LOT of languages) so we didn't get killed by insulting the tribe next door. Only recently, the past 2000 years which is probably generous, were we actually memorizing facts with no (direct) biological survival component.

And honestly: I'm not even 100% sure my memory *is* worse than other developers. It's possible I'm just more honest and self-aware about it. Who knows? There's no way to directly compare two brains. And The Emperor Has No Clothes wasn't just a fairy tale. I know from long experience how easy is it for developers to delude themselves-- remember Mr. Lotus Notes in the yellow boxing gloves who came here genuinely bragging about how great Lotus Notes was?

-

@blakeyrat said:

So you're saying:

1) The only way to improve a CLI program's interface is to write an entirely new CLI program

2) ... which must then support the exact same UI as the old oneWhy do you think this is any different from any other sort of API? Before you say that you don't, recall that this was a big reason why you thought that a CLI was unfit for anything but actual command line / scripting usage.

-

@Daniel Beardsmore said:

You're not scripting a GUI, you're controlling a program by an API, e.g.

tell application "Finder" to move file (some path to file) to (some destination)^- this is the key point that j_p wasn't getting.

You don't script "click the second radio button on radio button group 'Gender'", you script "set the gender to male". Now when the program adds a new gender (indeterminate), your script didn't break. Magic.

This is what happens when people actually *think* about the OS and how it should work and spent 75% of the time in the designing room and only 25% in the coding room. This is the difference between a professionally-designed OS and a pile of glommed-together shit.

-

@j_p said:

I guess the biggest problems with it would be that programs have to specifically export their scriptability - programs are only as scriptable as they've put effort into being,

Mac programs were scriptable, because it would be stupid for them not to be. If you implemented AppleEvents, you for thousands of features from the OS for free. (It's the same argument for using native widgets as opposed to drawing your own.)

And all the accessibility features of Mac Classic, IIRC, were based on AppleEvents, so if you wanted to sell your application to the government you were required to implement it, basically. (How does Linux handle this, BTW? Do Linux GUIs even *have* accessibility features? Do they meet the government standards?)

@j_p said:

whereas the CLI approach essentially has scripting for free - anything that a user can do with the program is by-definition scriptable.

That's true of AppleEvents also. Unless, again, the developer of the software expended MORE effort to make his program WORSE. But you can't control for that.

@j_p said:

I'm not so crash-hot on natural language as the descriptor either.

Of course! That might make it too easy to use! And we don't want that rabble on our precious computers! We only one super geeky people who love typing bullshit in 80x25 text windows!

You hate it *because* Apple endeavored to make it accessible to everybody. Fuck that attitude. Computers are for human beings. Apple understood that. Ubuntu gives it lip-service. You obviously still have some issues with the concept.

-

@j_p said:

They do have tab-completion and the ability to run 'whatever -h' or /? or whatever syntax is standard in your environment for asking for help. Surely blakey can at least remember the /names/ of the programs he's using.

If I had an OS with a proper spatial windowing system, like Mac Classic, I wouldn't have to.

@j_p said:

And, of course, there's no reason you couldn't make a inspection-mechanism for a CLI.

Yeah there is: CLI developers hate discoverability, they had usability, and they hate change.

@j_p said:

at least, on a unix box you can have another shell open with the man page for the command loaded.

But you can't open two shells at once without a GUI!

And in any case, if you think "man" pages are good documentation, you and I will never agree on anything ever.

-

@Circuitsoft said:

Actually, it handles them just fine.

Obligatory post about how people who start posts with "actually," are inevitably douchebags.

@Circuitsoft said:

You can register diff handlers for various file types. As long as your CAD program, CG program, or Audio filter program have a way of being asked to show differences between two files, it's easy enough to register a diff handler so you can see useful differences between them. It just happens to have a text-diff program built-in, but even that can be overridden.

And how do you view diffs of a 3D model using Git, which only ships a CLI interface?

I get what you're saying: the internals do support it if someone else does a shitload of work. But that shitload of work also includes *building a whole new UI for Git*. If Git was a complete product, it'd already have a GUI and that work wouldn't be nearly as arduous.

I don't really have any technical objections to Git or how it works. (Well, one beef: being able to work offline is nice, being *forced* to work offline is annoying.) My problem with Git is that the UI is fucking awful, and the developers released it to the world, crowing about how great it was, despite it obviously being only about 1/10th of the way done.

-

@dkf said:

That's what the manual is for; remembering all the little details so you don't have to. To find things in the manual, use

aproposor look online (of course).The help command is named "apropos"? DISCOVERABILITY!

-

@blakeyrat said:

@Circuitsoft said:

You can register diff handlers for various file types. As long as your CAD program, CG program, or Audio filter program have a way of being asked to show differences between two files, it's easy enough to register a diff handler so you can see useful differences between them. It just happens to have a text-diff program built-in, but even that can be overridden.

And how do you view diffs of a 3D model using Git, which only ships a CLI interface?

I get what you're saying: the internals do support it if someone else does a shitload of work. But that shitload of work also includes *building a whole new UI for Git*. If Git was a complete product, it'd already have a GUI and that work wouldn't be nearly as arduous.

The GUI is irrelevant here. The point is that you can call out to any program you want to use for diffs. That will work if you use git via the CLI or some other GUI or whatever. Are you saying that a VCS isn't "complete" unless it can handle any arbitrary file type, without any sort of 3rd party add on? Or were you just being incoherent again trying to rationalize past comments?

-

@boomzilla said:

Are you saying that a VCS isn't "complete" unless it can handle any arbitrary file type, without any sort of 3rd party add on?

Diffing an image or an audio file is very hard (except the dumb-ass byte-by-byte way) and I imagine that it's even worse for video. The hard part is truly working out what “the same” means at all; it's one of those things that's “obvious” until you try to actually do it and then it's not obvious at all.

-

@blakeyrat said:

@j_p said:

That solution is just as vulnerable to UI changes as CLI-style interfaces, and it isn't as powerful. It's useful, definitely - I've used a similar feature in Notepad++ - but it's not a general purpose solution for scripting. Consider:

- How do you represent 'do something to everything in this variable-sized list'?

- If a program updates and control IDs change, your script can break.

- If control IDs aren't systematic it might be difficult to branch well. Say there are three radio buttons and you want to press a different one depending on some other state. Hope they're named something like 'radio1', 'radio2' etc. so that you can branch to them easily.

- If programs have custom controls, then they need to package and send the user-action event themselves, for the OS to record. That means scripts don't necessarily match up to what you did, because programs can take an arbitrarily long amount of time to make one of those objects.

- The editor for these script files must be getting pretty damned complex if it needs to do variables, branching and looping. Is that all GUI too, or does the script you've recorded turn into a textual representation that you can edit easily? I don't know about you, but I'm not so sure that dragging around boxes in a flowchart is a great way to program.

You don't know a damned thing about how AppleScript worked.

Not ever having used it, obviously not. I took a stab based on what I'd read so far, apparently it worked differently (Daniel Beardsmore actually explained it, notice), and then I discussed some of the implications of /that/ way of implementing it. Most of those criticism don't apply to the programs-export-verbs model, it's true (although I'm not entirely clear here on how the recorder turns actions you're taking in the UI into verbs to record). And if you want to do anything interesting in a script, you need to open it up in a text editor and write some text. Because it's basically a batch-processing CLI. Programs are programs, options to that program are verbs. The only difference is that it's got a way of interpreting a sequence of actions you take in the GUI and turning it into a script, which is only useful if you want to literally do the exact same thing over and over again.

BTW, Notepad++ can't even *draw menus* correctly, so it's not really a surprise its scripting feature is fucked.

I didn't say its scripting features are fucked. It's got a record-my-input-then-play-it-back feature, which is what I thought you were describing before. Its scripting features are entirely different. And yes, it does some weird things with menus, and the MRU tab switcher is just wrong on every level. It's still the best text editor I've found to date.

I'm amused at your "Doesn't do this thing correctly, therefore the entire thing is wrong" approach. Visual Studio doesn't do /tabs/ correctly. The 'next tab' shortcut goes in MRU order, and tab order in the tab bar isn't sorted in MRU, so you bounce around tabs at semi-random. Is it a totally awful IDE?

@j_p said:

Windows and Mac OS have something like 98% of the /desktop/ OS market. If you consider only markets where scriptability is an important feature, surprise surprise linux is more popular.

If scripting were easy enough that anybody could do it, then it would be used on all types of computers.

This is a self-fulfilling prophecy: Linux scripting is so difficult that only those who devote their lives to maintaining Linux servers can pull it off. Therefore, only servers support scripting well. Gee, when you put it that way, it sounds really fucking stupid!

I don't even understand what you're getting at here. My point is that CLI-style environments are superior for scripting. I present as evidence the fact that in environments where scripting is regularly used, people tend to use operating systems that provide CLI-based, pervasive scripting. Because its better for being able to write scripts. You seem to be claiming that users don't know that scripting will solve some of their problems (probable), but I have literally no idea where the chain of logic goes from there. Again, do you really think that the only reason linux is so popular for web servers, including those for giant corporate entities like Facebook and Google that would benefit incredible amounts from even minor technical advantages, is because its free? You make a claim like that and then point out the Windows/Mac dominance of the desktop OS market as if network effects and being there first didn't play a part?

@blakeyrat said:

@j_p said:

I think you're underestimating exactly how easy it is to preserve backwards compatibility in a CLI interface. You've got a tool that does something to input. What 'tool some input here' does is unlikely to need to change to improve the UI. When you start adding options the same thing happens - why would you need to change the behaviour of grep's recurse option?

I don't know what grep's recurse option does, so I can't answer your exact question. But how about this example: you have to change the CLI command because you find out it has a data-losing bug, or a security flaw.

grep does regular-expression-based searches. The recurse option makes it descend folders when searching - by default it only searches the working directory.

And in that scenario, it depends. If the bug is incidental to operation (say, if you pass it too much data it buffer-overflows) you just fix the bug. Any scripts that break were doing something they shouldn't have been doing anyway, and there probably aren't many. The tricky case, of course, is when the bug is implicit in the command - the gets() function in C is the best example I can come up with off the top of my head, even if it's not a CLI command. gets() retrieves a string from stdin into a pointer you provide, without any way of limiting length or getting length, so it's a guaranteed buffer overflow bug you can't do anything about if you use the function. It's very, very old, and still in the standard because of backwards compatibility. Every compiler and code-lint tool on earth will throw warnings at you if you use it, though. That's the solution here - if the foo -k option is fundamentally buggy or a security flaw, literally can't be made safe while doing the same thing, then you replace the functionality by adding in a new switch or whatever that does whatever similar useful thing that isn't a bug the original switch was intended for, you keep the buggy one in, and you make the program spit out warnings when its used while still doing what the command does. Same way you would if your DLL exports a function that's fundamentally buggy or a security flaw.

@j_p said:

Usually improvements come in the form of new features and options. Or new programs that support the interface of the old tool but do more with it - like flex and bison over lex and yacc (lex/flex generate lexers for a language from a regex-based desciption of the tokens in it, yacc/bison generate recursive-descent parsers for a language based on an EBNF grammar for it).

So you're saying:

1) The only way to improve a CLI program's interface is to write an entirely new CLI program

2) ... which must then support the exact same UI as the old oneWhich sums up to: there is no way to improve a CLI program's interface. Which is I believe the exact argument I've been making.

'adding new features and options'. Or in the case of bison, supporting more classes of input than yacc. Those are improvements. To the interface.

@blakeyrat said:

@j_p said:

If you want to completely overhaul the interface, you make a new tool that does the same thing with a totally different interface, and old scripts will continue to work while the new tool has a fancy-pants new interface that scripts can start using.

Oh, so the CLI has a versioning system so the scripts can specify which version they were built for? That is sure a handy feature! Too bad you made it fucking up and it doesn't exist.

Either that, or you're saying the system needs to have literally every version of every CLI tool ever made on it simultaneously to avoid breaking old scripts. And if one of those tools uses the command "search" (even if it does useless crap, or nobody's used it in decades) you can never, ever, ever make a new "search" that works better because of the polluted namespace.

To be fair though: your idea here could actually be made to work, if: 1) there were some form of versioning system, 2) there were some enforcement that scripts were using a "version marker" or however you implement 1, 3) the app disk size were small enough that it's not a big deal. It still breaks in the case of changes due to security, as you have to change the older versions as well.

I didn't say anything about versioning. I said you write a new tool and let the old one die, and people will use whichever one they want. However many scripts do you think still reference cvs, or even rcs? Probably some. You can install rcs if you've got one of those scripts. But if you're most people and don't, then you don't run apt-get cvs (your distro, of course, won't install it by default).

You know when I brought up DLL hell earlier? How, exactly, do you think that was solved? I'll give you a hint: in c:\windows\system32, I currently have d3dx9_24.dll through to d3dx9_43.dll. God only knows how many versions of the .net runtime I've got installed. How come the have-a-copy-of-every-version solution is fine for git.dll if it's not fine for git?

@blakeyrat said:

^- this is the key point that j_p wasn't getting.

You don't script "click the second radio button on radio button group 'Gender'", you script "set the gender to male". Now when the program adds a new gender (indeterminate), your script didn't break. Magic.

This is what happens when people actually *think* about the OS and how it should work and spent 75% of the time in the designing room and only 25% in the coding room. This is the difference between a professionally-designed OS and a pile of glommed-together shit.

'foo --gender=male' doesn't break if 'foo --gender=indeterminate' becomes an option either.

@blakeyrat said:

Mac programs were scriptable, because it would be stupid for them not to be. If you implemented AppleEvents, you for thousands of features from the OS *for free*. (It's the same argument for using native widgets as opposed to drawing your own.)

And all the accessibility features of Mac Classic, IIRC, were based on AppleEvents, so if you wanted to sell your application to the government you were required to implement it, basically. (How does Linux handle this, BTW? Do Linux GUIs even *have* accessibility features? Do they meet the government standards?)

But how scriptable? Does your text editor know what a paragraph is? The burden of implementing each verb is on the developer of each program, and I rather doubt the OS gives you much extra if you teach your program about concepts like that. The beauty of a CLI approach to scripting is that its the default. It doesn't require any effort. It's not an inducement to make your program script. Its so much of a default you can't even opt out.

I haven't used Linux as a desktop OS for a few years now, and I've never needed accessibility features, but they definitely do have them. What mechanisms they use or whether they meet certain government standards, I couldn't say.

That's true of AppleEvents also. Unless, again, the developer of the software expended MORE effort to make his program WORSE. But you can't control for that.

Is my model for this right? At the moment I'm picturing the design as:

- All input events to the program are AppleEvents

- The program can post itself AppleEvents it constructed and defined itself (Presumably its got some way of informing the windowing system that the AppleEvent that triggered it needn't be recorded)

- An AppleEvent represents an action of some kind - a verb - with various other attributes

- You perform actions solely by responding to AppleEvents - every GUI interaction just posts AppleEvents to the relevant queue or acts on an AppleEvent

- Programs have a metadata table they export specifying what verbs they support and what parameters they need.

Because the only-perform-actions-by-responding-to-appleevents aspects seems to me like it requires more work from developers, not less. And if you don't have that, then you've got actions that might not be readily scriptable taken (for example, because they occurred in response to a mousepress event, and doesn't have a nice name as a result). And features Daniel was discussing above, like saying "set the first word of every paragraph to red" requires a fair amount of effort from the program to understand 'word' and 'paragraph', work that only really benefits scripting. And I can see custom controls wreaking hell on that design if they're not very carefully designed.

@blakeyrat said:

Of course! That might make it too easy to use! And we don't want that rabble on our precious computers! We only one super geeky people who love typing bullshit in 80x25 text windows!

You hate it *because* Apple endeavored to make it accessible to everybody. Fuck that attitude. Computers are for human beings. Apple understood that. Ubuntu gives it lip-service. You obviously still have some issues with the concept.

If that's the only reason you think I might dislike natural language programming, you're more nuts than I thought. Natural-language environments inevitably aren't. Apple didn't solve general language parsing a decade ago. Usually you end up with a programming language where the keywords are all English, you can insert words like 'the' anywhere you want, and the syntax is a little flexible. Meanwhile, the language is weird to use and stilted because it tries to obey rules evolved for conveying information to a creature capable of inference with a great deal of context, rather than specifying processes for a pretty dumb machine with very little context, /and/ the language is close enough to natural language that all your natural-language-skills come into play and actively get in the way. I don't like natural language programming for the same reason mathematicians don't write proofs in natural language.

Yeah there is: CLI developers hate discoverability, they had usability, and they hate change.

Gasp! You've discovered my secret plan, as occasional user of a CLI, to make all software everywhere worse!

But you can't open two shells at once without a GUI!

And in any case, if you think "man" pages are good documentation, you and I will never agree on anything ever.

Errr... you've never actually used a linux system, have you? Basically every shell since god-knows-how-long has keystrokes for shifting between multiple terminals. CTRL-SHIFT-number is pretty common, IIRC. Often your GUI session is just running in one of those terminals, allowing things like restarting the GUI without restarting the computer (want to change some low-level config options or your graphics driver, X crashed, whatever reason). I wouldn't be surprised if some or all of them support having more than one of them on screen at once, in different columns or something.

And, y'know, I've never said GUIs are useless and awful and shouldn't exist. They're damned useful for a bunch of tasks. Even most tasks. I just think that textual input and the CLI is also useful for different sets of features and tasks, and that wrapping a GUI shell over a CLI engine is a good way of designing some programs.

The man pages aren't intended as documentation (often that's kept in 'info', or sometimes in your distro's native help system), they're a reference for people who already know roughly what they're trying to do. You don't learn how to drive grep by reading its man page, because it would need to teach you regular expressions first. You check man when you go "crap, does the pattern or the directory to search come first in grep? And is there an option to search text inside files instead of the file name?". You know, the same way inspection in IDEs doesn't provide the full documentation for the method you're pointing it at?

And how do you view diffs of a 3D model using Git, which only ships a CLI interface?

Christ, blakey, git doesn't do viewing diffs. It really doesn't. It calls out to other programs to do it. If you tell git "use cad-diff on files of the form *.cad" then when you get diffs for foo.cad it'll run cad-diff and ask it to display them. Whether that opens a 3D window or does ncurses-based text graphics or some fancy image processing to say "the other one's got more blocks in it" is up to that program. It's not a shitload of work - it's just coming up with a way of sensibly talking about differences for the things you're diffing. Hopefully the person who makes your CAD software already has a similar tool, because they'd be in the best place to create one. A GUI-based git would have the same problem, because they're not going to write a diff program for every possible filetype in the world.

I don't think you actually know anything about git's development. What happened was that the SCM that had been used for the linux kernel up until then (BitKeeper) withdrew the free license it had offered the Linux kernel devs, because someone reverse-engineered their protocols. So they needed a new SCM. Linus looked at the stuff available at the time (2005), decided that none of them met the needs of linux kernel dev, so they wrote their own. Specifically, it had to be fast to merge patches, it had to be guaranteed atomic, it had to allow for distributed version control (which was /new/ back then, keep in mind), and it should ideally use cvs as the model of what not to do. They needed it fast. So they wrote it fast (it was self-hosting in a /day/), and hey, they met their design goals. So they released it to the public because a) the public needed it to get the linux repository and b) if it was useful to them it might be useful to other people. And hell, if they were crowing maybe because its because they'd made a ludicrously fast source control system in a few months?

I don't even want to know what you'd consider a complete source control system to look like if you think git is only 9/10ths complete because you have to type things. I guess the existence of GUI wrappers like TortoiseGit doesn't help?

The help command is named "apropos"? DISCOVERABILITY!

'apropos' is a semi-standard alias for man -k - that is, search the man page for a string. So if you want to search but don't know what the command is, 'apropos search' will probably find it. Not so much a help command as a "What was the program again?" command. I thought you were literate, blakey - haven't you ever run into the word before? Regardless, it's almost certainly a command that's brought up in any kind of in-system help, 'man' on its own tells you about man -k which does the same thing, and most shells have a 'help' command. Yes, this isn't as discoverable as a big friendly window with buttons. That's the tradeoff for providing a readily composable and scriptable environment to do things in. Again, you're probably not running bash or equivalent exclusively unless you really have to.

-

Apple Events are the message-passing IPC (inter-process communication) system for Mac OS/OS X. It's how programs talk to each other, locally (and someone must have used this feature at some point) over a network, and how the operating system delivers high-level messages such as instructions to open or print documents, or quit. It's like a binary version of D-Bus, as unlike RPC/RMI, you're not calling real functions (although allegedly, with OS X and Cocoa you can now do that); rather, programs simply inspect the message's ID codes to determine what to do.

For example, when you select a bunch of files in the Finder and double-click one of them, the Finder creates an 'odoc' Apple Event message, and inserts a complete list of files into the message. The receiving program is handed all the file details at once, so you don't get the Windows vicious mutex dance. An extra event type of 'gURL' was introduced at some stage to pass URLs to a program. Suite codes are provided for namespace purposes.

If you have a text editor that has no idea what a paragraph is, the operating system's IPC mechanism is the least of your worries.

Obviously I'm not going to go into extensive detail of Apple Events/AppleScript/OSA/OSAX etc in a forum topic. Essentially I'm just making you and others aware that ideas and alternatives exist, and did exist, that may not be present on the operating systems that you're familiar with.

It should also be fairly apparent to you that none of Apple's decisions, past, present or future, are binding on any other system; you're not expected to bow down to the shrine of Jobs, but rather, learn from both the strengths and the weaknesses of other systems, past and present. For example, D-Bus is seemingly a completely independent invention along similar lines, that takes a different approach to solving the problem of soft-coded message-passing IPC.

BTW, Apple Event functions and methods use named parameters, which back in the 90s at least, had to be specified in the correct order. You could omit non-applicable parameters, but not specify them in the wrong order. That restriction aside, they're a lot like command line parameters.

-

@blakeyrat said:

And how do you view diffs of a 3D model using Git, which only ships a CLI interface?

Every OS I've used, that had a CLI, is perfectly capable of launching a graphical program from said CLI. That includes Linux, MacOS X, every version of Windows I've touched (3.1, 95, 98, 2000, XP, S2K3, 7, S2K8).

@blakeyrat said:

Ever heard of Git Gui? Try it. You can either go to a command line and type "git gui" or, if you've installed the official Git install, Start->All Programs->Git->Git Gui.I get what you're saying: the internals do support it if someone else does a shitload of work. But that shitload of work also includes building a whole new UI for Git. If Git was a complete product, it'd already have a GUI and that work wouldn't be nearly as arduous.

-

@blakeyrat said:

@j_p said:

Seriously? I knew you were wilfully ignorant Blakey, but that's just taking the fucking piss.I think you're underestimating exactly how easy it is to preserve backwards compatibility in a CLI interface. You've got a tool that does something to input. What 'tool some input here' does is unlikely to need to change to improve the UI. When you start adding options the same thing happens - why would you need to change the behaviour of grep's recurse option?

I don't know what grep's recurse option does,[...]

-

-

@dhromed said:

@j_p said:

Is it a totally awful IDE?

Yes. VS is coder-hostile.

It's actually one of the better IDEs I've used - keeping in mind that I mostly work in C++. Eclipse has been essentially awful every time I've wrestled with it, dev-cpp is out of date and was pretty bad when it was in-date. Haven't tried Code::blocks, not sure what other IDEs I'm missing. I've found that VS was pretty much stable, the text editing features mostly did the right thing (horrendous tab fuckup aside), and setting up projects was intuitive. The debugging features are excellent. My big issues with it have always been a succession of minor UI niggles, the total lack of C99 support in the compiler, and that the inspection/hinting features only ever worked half the time (and most of the crashes that did happen were because the intellisense database got corrupted somehow). To be entirely fair on that point, there was some occasional interesting preprocesser screwery going on.

Basically what I'm looking for in a C++ IDE or toolset:

- Really good debugging features.

- Really good profiler (the tools packaged with VS are okay, but require serious attention to understand).

- Functional text editor with tabs that doesn't get in my way and actually does tabs right

- Hinting features are nice, but only if they're reliable.

-

@j_p said:

Functional text editor

I am still looking, as part of an IDE, since, DEAR LORD ALMIGHTY programming somehow involves typings lots of text. Wow. Who'dathunk.

Small fry like Editplus beat up VS's text editor any day. Unfortunately, Editplus has no IDE-features and no real understanding of the code so I can't use it for C# development. :'(

I think MS is willfully slacking on making VS a supreme text editor because it has a complete and robust set of all the other features you need for large software projects so who gives a shit people will use it anyway.

I has a sad because of that.

-

@j_p said:Surely blakey can at least remember the /names/ of the programs he's using.

Unfortunately, names of executables aren't namespaced (though they can be hungaria...noted?), so for infrequently used programs you either know the exact first letters of the name or you'll have to fish for it in the larger sea of $PATH.There's always man -k, of course. And the internet.

@j_p said:And, of course, there's no reason you couldn't make a inspection-mechanism for a CLI.

Wait, am I the one who's got assigned to do this? Dammit, I'm not very good at implementing compilers/linkers. Can't we get someone else to do it?

@boomzilla said:Bash, at least, has autocomplete with parameters.

To be pedantic, the bash-completion package is what gives it this ability, because to my understanding bash can only be told about completion options rather than find them out by itself. The thing is, argument completion is more free-form and can fetch completion from more diverse sources than simple functions calls, it has to be done on a program-by-program basis.Bash completion is wonderful; but it's not automatic like Intellisense, where the IDE has direct access to metadata about function signatures, expression value types and symbol names.

@boomzilla said:

This is what I do too.

Many (most?) applications that display a console / terminal / CLI have tabs where you can have multiple consoles going. I use Konsole, and it's very easy to move to a different tab and pull up the man page for something.

@blakeyrat said:But you can't open two shells at once without a GUI!

There's GNU screen. I've been a happy user for a part of my life.

@dhromed said:VS is coder-hostile.

You're just mad at it because it points out all your grammar mistakes.

-

@Zecc said:

You're just mad at it because it points out all your grammar mistakes.

I never make grammar mistakes.

-

@Zecc said:

@boomzilla said:

Bash, at least, has autocomplete with parameters.

To be pedantic, the bash-completion package is what gives it this ability, because to my understanding bash can only be told about completion options rather than find them out by itself. The thing is, argument completion is more free-form and can fetch completion from more diverse sources than simple functions calls, it has to be done on a program-by-program basis.Bash completion is wonderful; but it's not automatic like Intellisense, where the IDE has direct access to metadata about function signatures, expression value types and symbol names.

Yes, I'm aware of how it works. The point was that if you open a modern version of bash, you get all that. I don't think it's so different from Intellisense in this manner, either. Does Intellisense work for some random programming language that isn't supported by VS?

Certainly, by blakey's typical standard, it's automatic, because it's just there for the user and he doesn't have to do anything or even know how it works.

-

@boomzilla said:

But blakeyrat doesn't use random programming languages that aren't supported by VS unless he has to. When he does have to, he complains loudly here about it. So it is either automatic, or the language in question is unusable by humans.Does Intellisense work for some random programming language that isn't supported by VS?

Certainly, by blakey's typical standard, it's automatic, because it's just there for the user and he doesn't have to do anything or even know how it works.

-

@blakeyrat said:

@j_p said:

I'm not so crash-hot on natural language as the descriptor either.

Of course! That might make it too easy to use! And we don't want that rabble on our precious computers! We only one super geeky people who love typing bullshit in 80x25 text windows!

You hate it *because* Apple endeavored to make it accessible to everybody. Fuck that attitude. Computers are for human beings. Apple understood that. Ubuntu gives it lip-service. You obviously still have some issues with the concept.

I've forgotten the source, but I recall an amusing story (unlikely to be true, but proves a point):

Some researchers were demoing a remarkable piece of software. It was a database of all the world's geographical data, but even more impressively, it could respond to queries given in plain English.

So, the first user walks up and he types in, "what is the tallest mountain in Europe and Africa?" To which the machine replies, "there are no mountains in Europe and Africa."

Before your objection: relevance — English (and really all native human languages) is a terrible language for specifying technical requirements. It has too many ambiguities, relies too much on context, is too interpretive, you have problems like idioms, homographs, homophones, and homonyms. Humans have the remarkable skill of figuring out what someone meant even though it very frequently differs from what they said.

Our example above, the user said "and" but meant "or." Should the computer try to second-guess the user? What if the user actually meant it literally? Is it in any way possible to tell?

Now what about in cases where getting the command wrong can result in undesirable effects, such as data loss? The user said to format this disc, so I did; how was I supposed to know he wanted me to fix the whitespace in his documents?

Computer languages exist as such because they can unambiguously express intent. The programmer still can (and occasionally does) screw up the command, but at least every command has exactly one way it can be interpreted. Edit: except for C++ because C++ is a shitty language

-

@joe.edwards said:

Some researchers were demoing a remarkable piece of software. It was a database of all the world's geographical data, but even more impressively, it could respond to queries given in plain English.

Ok...

@joe.edwards said:

So, the first user walks up and he types in, "what is the tallest mountain in Europe and Africa?" To which the machine replies, "there are no mountains in Europe and Africa."

So it can't respond in plain English.

@joe.edwards said:

Before your objection: relevance — English (and really all native human languages) is a terrible language for specifying technical requirements. It has too many ambiguities, relies too much on context, is too interpretive, you have problems like idioms, homographs, homophones, and homonyms. Humans have the remarkable skill of figuring out what someone meant even though it very frequently differs from what they said.

Our example above, the user said "and" but meant "or." Should the computer try to second-guess the user? What if the user actually meant it literally? Is it in any way possible to tell?

Now what about in cases where getting the command wrong can result in undesirable effects, such as data loss? The user said to format this disc, so I did; how was I supposed to know he wanted me to fix the whitespace in his documents?

Computer languages exist as such because they can unambiguously express intent. The programmer still can (and occasionally does) screw up the command, but at least every command has exactly one way it can be interpreted.

Yada, yada, none of this changes the fact that some languages are more usable than others, and HyperTalk was very, very usable.

-

@joe.edwards said:

Edit: except for C++ because C++ is a shitty language

In practice, many C implementations recognize, for example, #pragma once as a rough equivalent of #include guards — but GCC 1.17, upon finding a #pragma directive, would instead attempt to launch commonly distributed Unix games such as NetHack and Rogue, or start Emacs running a simulation of the Towers of Hanoi.[7]

Fuck. And people ask me why I started to hate open source.