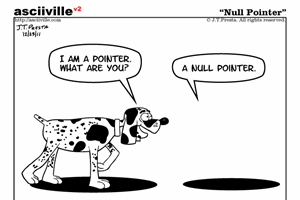

NULL: the worst mistake of computer science

-

####What is wrong with NULL?

The short answer: NULL is a value that is not a value. And that’s a problem.

It has festered in the most popular languages of all time and is now known by many names: NULL, nil, null, None, Nothing, Nil, nullptr. Each language has its own nuances.

Some of the problems caused by NULL apply only to a particular language, while others are universal; a few are simply different facets of a single issue.

NULL…

subverts types

is sloppy

is a special case

makes poor APIs

exacerbates poor language decisions

is difficult to debug

is non-composableHe then goes on to elaborate on each point. All good arguments on why

nullcreates problems.He then goes on to propose the solution:

NULL has become so pervasive that many just assume that it’s necessary. We’ve had it for so long in so many low- and high-level languages, it seems essential, like integer arithmetic or I/O.

Not so! You can have an entire programming language without NULL. The problem with NULL is that it is a non-value value, a sentinel, a special case that was lumped in with everything else.

Instead, we need an entity that contains information about (1) whether it contains a value and (2) the contained value, if it exists. And it should be able to “contain” any type. This is the idea of Haskell’s Maybe, Java’s Optional, Swift’s Optional, etc.

Basically, his nirvana is something like C#'s

Nullable<T>container.Meh. Too complicated for my taste. No one's gonna use that in its current verbose form.

I guess unless someone comes up with a better Nullable syntax, null is here to stay for the time being, despite all its faults.

-

Meh. Too verbose for my taste. No one's gonna use that in its current verbose form.

I quite like annotating a variable as being known nullable or guaranteed non-null, and then having the compiler enforce that. I've found a number of evil bugs in my code from doing this…

-

I quite like annotating a variable as being known nullable or guaranteed non-null, and then having the compiler enforce that. I've found a number of evil bugs in my code from doing this…

That would be sweet.

R - my current learning target - does no such thing.

NULL is at best a simple placeholder for many subtler ideas....

I think NULL will only go away when missing data goes away.

-

I quite like annotating a variable as being known nullable or guaranteed non-null,

C++ is actually quite nice for doing this. All my function parameters are pointers if they are known to be nullable (optional parameters, basically), or references to guarantee they are non-null. I haven't forgotten to check for null in a long time.

-

I'm not much of a dev, so correct me if I'm wrong:

Is he saying "the problem isn't developers writing code incorrectly, it's the language itself!!1"?

-

Instead, we need an entity that contains information about (1) whether it contains a value and (2) the contained value, if it exists. And it should be able to “contain” any type.

-

@cartman82 said:

Meh. Too verbose for my taste. No one's gonna use that in its current verbose form.

I quite like annotating a variable as being known nullable or guaranteed non-null, and then having the compiler enforce that. I've found a number of evil bugs in my code from doing this…

Yeah, that's really nice. With IntelliJ it will tell you when you might be passing in a null to a @NotNull method too.

-

... what does he propose relational databases use instead?

EDIT: he doesn't even ONCE mention SQL, and there's just a comment in the comments section saying, "hey, yo, buddy, this is a really stupid topic to discuss without once mentioning SQL."

-

... what does he propose relational databases use instead?

I remember someone on WTDWTF advocating getting rid off NULLs from databases as well. We had a big argument and everything.

-

I remember several conversations about the demented way Oracle handles null strings, but I don't recall any discussion about getting rid of NULL in databases altogether.

I mean. It could be done, but then what do you use to, for example, represent that a record from only one table is present when a LEFT JOIN is used? If you get rid of NULL you have to either have some incredibly clever solution for this, or you need to get rid of the concept outer joins altogether, which is really throwing a lot of really good stuff right in the toilet.

-

ChristianNeumanns has a point:

-

NULL is a value that is not a value. And that’s a problem.

Got as far as that and stopped reading /taking it seriously. Why?

Zero is a number that is not a number. And that's a problem

Filed under:

-

I mean. It could be done, but then what do you use to, for example, represent that a record from only one table is present when a LEFT JOIN is used? If you get rid of NULL you have to either have some incredibly clever solution for this, or you need to get rid of the concept outer joins altogether, which is really throwing a lot of really good stuff right in the toilet.

Yeah that was it.

The problem is that, as you do outer join, the NULLS you get are ambiguous. You have no idea whether the NULL is from the tuple in the joined table, or from there not being a tuple to join with.

And the solution is, of course, to go the javascipt route and have two NULL-like types, instead of zero.

-

The problem is that, as you do outer join, the NULLS you get are ambiguous. You have no idea whether the NULL is from the touple in the joined table, or from there not being a touple to join with.

I need you to elaborate on this, and also spell "tuple" correctly.

Do you mean a scenario when you have:

FOOLEFT JOINBARLEFT JOINBAZWhere

BARjoins to a value inFOO, andBAZjoins to a value inBAR? Therefore ifBAZis null, you aren't certain whether it's because theFOOhas noBAR, or there's noBARto join aBAZto?If my guess is correct, follow-up question: is there any circumstance in which that matters?

Still, the real point is: the fact that SQL is missing from that article makes it amazingly fucking useless. And also makes me wonder what this guy codes-- video games? Even video games, except cheap indies, usually have databases, though...

-

-

Do you mean a scenario when you have:

FOO LEFT JOIN BAR LEFT JOIN BAZ

Where BAR joins to a value in FOO, and BAZ joins to a value in BAR? Therefore if BAZ is null, you aren't certain whether it's because the FOO has no BAR, or there's no BAR to join a BAZ to?

If my guess is correct, follow-up question: is there any circumstance in which that matters?

SELECT * FROM fooid | bar_id ------------ 1 | 'A' 2 | 'B' 3 | NULLSELECT * FROM barid | value ---------------------- 'A' | 'Value exists' 'B' | NULLSELECT foo.id, bar.value FROM foo LEFT JOIN bar ON foo.bar_id = bar.idfoo.id | bar.value -------------------- 1 | 'Value exists' 2 | NULL 3 | NULLIn the final result set, there are two NULL-s. One is from the

valuefield of the joined tuple frombar. The other is from there not being a tuple to JOIN with id 3. You can't tell them apart. BecauseNULLis used for both occasions.It's a similar problem to the "4. NULL makes poor APIs" section from the OP. The solution is, IMO, to have two types of NULL instead of having some kind of special verbose interface like

Nullable<T>(which wouldn't work in SQL anyway).

-

In the final result set, there are two NULL-s. One is from the value field of the joined tuple from bar. The other is from there not being a tuple to JOIN with id 3. You can't tell them apart. Because NULL is used for both occasions.

Ok, but who cares? How would knowing why it's NULL help you in any way?

-

Ok, but who cares? How would knowing why it's NULL help you in any way?

Err... yeah? You lose information. That's a pretty big deal when working with an information storage system.

Sure you can work around it (eg. do two queries or replace one type of NULL with a placeholder value), but you shouldn't have to.

-

Err... yeah? You lose information.

But if the information you "lose" useful in any way? You keep dodging the question; either answer it or send me all the moneys.

Sure you can work around it (eg. do two queries or replace one type of NULL with a placeholder value), but you shouldn't have to.

Why would you ever want to?

-

The solution there is actually just to add a

NOT NULLconstraint tobar.value- either it exists, or it doesn't. If you want to track that it existed at some point, record the changes into another table (or use a data storage solution designed to handle such things).

-

Select foo.id, bar.id, bar.value?

-

But if the information you "lose" useful in any way? You keep dodging the question; either answer it or send me all the moneys.

Sigh. Fuck it. Here are your monkeys. Hope you're happy.

-

Blakeyrat wins again.

-

-

Do you mean with this, if the second monkey doesn't shit, whether that's due to the first or the second monkey's constipation?

-

On the original topic:

a) C# nullables already do have pretty good sugar, don't they? Something like

T?as shorthand forNullable<T>? Between that and Elvis operator, C# option syntax is already pretty good. The problem C# has is they can't remove nullable-by-default from reference types without breaking everything.

b) The problem isn't null itself, it's that every single variable could be null. Do you honestlywant tocheck every argument to every function you ever write?

c) There is a better syntax already, but it's not in widespread use yet. Enumerated types ala Rust (and some other languages that are hardly worth mentioning 🍨) are the way of the future.Filed under: pattern-matching syntax is kind of headache-inducing though

-

You lose information.

If you wanted that information kept, you wouldn't have asked for that type of join.

-

[quote="

Paul Draper"]

What is wrong with NULL?

The short answer: NULL is a value that is not a value. And that’s a problem.[/quote]

This sounds like a problem for...

###NaNNaNNaNNaNNaNNaNNaNNaNNaNNaNNaNNaNNaNNaNNaN

#Batman!

-

@cartman82 said:

You lose information.

If you wanted that information kept, you wouldn't have asked for that type of join.

Yeah, it's a bit like: "Oh, converting from

floattointwill lose information!"In this case one didn't need the information to begin with or you'd have made the column NOT NULL.

-

The problem is that, as you do outer join, the NULLS you get are ambiguous. You have no idea whether the NULL is from the tuple in the joined table, or from there not being a tuple to join with.

And the solution is, of course, to go the javascipt route and have two NULL-like types, instead of zero.

Or you could just check the primary key of the joined table, which is not allowed to be NULL.

-

Or, we could simply give the null object a counter that indicates how many times it has been passed on.

Filed under: The Evil Ideas Thread is

-

ChristianNeumanns has a point:

I read both articles today and I find myself siding with Neumanns (on most points).

Take

nullaway from a language and replace it with something like Java'sOptional<T>, and not much changes:- Still need to consciously use it.

- Still need to check if it has a value.

- Still throws an exception if misused.

I'll admit, it is a better way to show that your function returns (or accepts) 'possibly nothing', than comments.

Or am I missing a glaringly obvious use case forOptionalwhere it really shines?

-

The problem with not having nulls is that most programmers also want a way to declare a variable without initializing it.

You can force people to write a default initializer, but in cases you want a proper object and not an empty one, you'd still need to write checks - so congratulations, you just reinvented nulls, except ten times as fragile, since it doesn't throw right away.

You can force a function declaration not to accept nulls, but the compiler has no way to verify with 100 percent certainity that a variable has been initialized. So that's kinda out too.

Finally, you can force people to initialize on declaration, and either condemn them to ternary hell, or have them construct empty throwaway objects. Or rather, whole object trees, since the internal fields can't be empty either.

So yeah, nulls might not be too great, but I think it's the best we can do.

-

Take null away from a language and replace it with something like Java's Optional<T>, and not much changes:

Still need to consciously use it.

Still need to check if it has a value.

Still throws an exception if misused.So having the error show up at compile time rather than run time has no value?

-

Never mind the compile time error -- you can reason about "optional" values by looking at the source code and realizing that a value can be 'nothing' instead of having to read documentation to realize that it could be 'null'.

This makes your life easier.

data Maybe a = Just a | Nothing thing :: Maybe Object thing = ... queryWrittenAgainstThing :: Maybe Object -> Bool queryWrittenAgainstThing (Just thing) = ... queryWrittenAgainstThing Nothing = ...In other words, 'null' is an opaque error you have to actively guard against, in nearly all circumstances, while 'nothing' is just a possibility when you have a 'Maybe' type.

-

It was my understanding that the biggest advantage of Haskell's

Maybemonad is that it forces you to address to case of missing data - anything that has a possibleNothingstate must have a case analysis that handles that case. Not having used Haskell much, I am uncertain if I have that right, though.

-

Almost. It throws a warning if you miss a case. You can force the compiler to throw errors for warnings, but it's not fine-grained enough to just throw errors for missing cases.

That said, the bare fact that the maybe-ness is right there in the source code increases your productivity, since you know that everything that isn't a maybe is never

null.

-

the compiler has no way to verify with 100 percent certainity that a variable has been initialized

That's an entirely wrong assertion when it comes to local variables.

You can also do it for globals/instance-/class-variables, though I'm not quite as familiar with the analysis required.

You can also do it for globals/instance-/class-variables, though I'm not quite as familiar with the analysis required.

-

int x; if (very_difficult_problem(constant_input)) { x = 42; }I dunno. Maybe it would work, but it would either require some insanely powerful static analysis tools, or the compiler to chew you off on missing cases that are impossible anyway.

-

That's an easy case, actually. It would chew you off for not defining it on all code paths. It doesn't try to second guess whether a boolean test passes (except for the most utterly obvious cases) but just assumes that it's sometimes true and sometimes false. Static analysis doesn't view things in quite the same way as programmers normally do. :)

-

True; but I can certainly debate that C# (for example) shouldn't make object types nullable by default. (Which, incidentally, is being rectified in the next major .Net update, from what I understand.)

-

Why is county null in this join?

Select p.addressId, CASE WHEN a.addressId IS NULL THEN False ELSE True END as DoesAddressRecordExist, a.county from person p join address a where p.addressId = a.addressId

Bam, now you know the difference.

-

You can force a function declaration not to accept nulls, but the compiler has no way to verify with 100 percent certainity that a variable has been initialized. So that's kinda out too.

It doesn't need to. Like dkf mentioned:

That's an easy case, actually. It would chew you off for not defining it on all code paths.

Generally speaking it works that way, because static analysis tools mostly operate on the level of preconditions and postconditions. They're not interested in what happens inside a method, as much as they are interested in what a method describes must go into it and what a method describes as guaranteed to come out of it.

Good static analyzers can infer missing conditions based on pre- and postconditions from other methods and can handle simple branching logic as well, giving you the illusion that they're quite a bit more intelligent than they reeally are (.NET Data contracts are quite amazing at this, actually, especially for a free solution) but in the end it still boils down to 'all logical branches must fullfill all guarantees needed by the (inferred or not) post-conditions.

-

That's not where it's annoying. Where it's annoying is:

bool x = some_external_value; int y; if( x ) { y = 42; } /* some code which does not touch x or y */ if( x ) { printf( "%d\n", y ); }

-

CASE WHEN a.address IS NULL THEN False ELSE True END

Some kind of allergy to

Some kind of allergy to IS NOT NULLhere?(And you say nothing explicitly about

countyso I've no idea even which table it's coming from. I'm not going to play random guess and debug the schema for you.)

-

If county is NULL, you check the other flag in the query to see if the address record exists.

Point being, there are ways to setup a join to where you can tell if the values are null because the outerjoin produced null values, vs. the record existing and having null values.

-

Does that matter? You aren't getting the value either way.

-

I was entertaining cartman's concern that you can't tell the difference in an outer join.

But, you can, if you setup the query to tell you that.

I'm not taking it any more seriously than.

"I wonder if you can do that."

-

Of course you can, you just wouldn't.

-

If county is NULL, you check the other flag in the query to see if the address record exists.

Why does it matter? With that join, you are asking for a result set where each result is a tuple that might (or might not) have information about the county associated with a person's address. For the uses where a join makes sense, worrying about why the county is NULL indicates a dedication to Doing Things The Discourse Way.

SQL gives you what you actually ask for, not what you think you asked for. Pesky computers, doing what they're told!