JUnit "test"

-

public void testXXX() { try { // try something System.out.println("Test passed"); } catch (Exception e) { System.out.println("Test failed"); } }For those not familiar with JUnit, exceptions are caught and not rethrown, and no assertions fail, so the test always passes!

-

Ah, "not clear on the concept." I've not used JUnit, but in TestNG, you have to add "throws Exception" to your test method. I assume the original author forgot to do this, and the IDE (I think you guys use Eclipse) offered to fix the error, offering to add try/catch blocks or a throws statement.

"But it compiles, and the test report says it worked!!!"

-

Not quite. Yes, we use eclipse, but this code isn't declared to throw an exception (our entire app only throws RuntimeExceptions; because the original developers didn't want to be bothered declaring: throws x,y,z).

They should have done try/catch and call fail() in the catch block.

For those tests that are expected to throw exceptions, you are correct, you can use an annotation to tell JUnit that a particular exception is expected, and the lack thereof is a failure.

But they didn't do that. They just did: print pass/fail, so everything passed every time.

-

I could see this being a placeholder for a test to be implemented later.

Knowing your stories, though, this test has been in place for the last three years.

-

@Justice said:

This is an actual test. Regardless of either, there is no reason to SWALLOW THE EXCEPTION AND REPORT SUCCESS (printing "failure" to the console will not report a failure to JUnit)I could see this being a placeholder for a test to be implemented later.

Knowing your stories, though, this test has been in place for the last three years.

-

@Sutherlands said:

@Justice said:

This is an actual test. Regardless of either, there is no reason to SWALLOW THE EXCEPTION AND REPORT SUCCESS (printing "failure" to the console will not report a failure to JUnit)I could see this being a placeholder for a test to be implemented later.

Knowing your stories, though, this test has been in place for the last three years.

Srsly. If it's a placeholder it never should have been committed where other people could see it.

-

@morbiuswilters said:

@Sutherlands said:

@Justice said:

This is an actual test. Regardless of either, there is no reason to SWALLOW THE EXCEPTION AND REPORT SUCCESS (printing "failure" to the console will not report a failure to JUnit)I could see this being a placeholder for a test to be implemented later.

Knowing your stories, though, this test has been in place for the last three years.

Srsly. If it's a placeholder it never should have been committed where other people could see it.

Oh, I agree; I was taking a stab at how/why this would have ended up in the codebase, not trying to defend it. My fault for not making that clear.

Of course, the more likely explanation is that somebody just doesn't understand how JUnit works.

-

Disgusting. Why didn't they use

System.err.println()?

-

Nice catch. In our product we got so fed up with this type of tests that we finally resorted to class-path scanning for sysout/syserr usage and fail the build when found. We also add listeners to log4j at test-time to detect non-supressed error logging during tests. So now the console is clean during test-runs. Works wonders, although we were met with scepticism at the start.

Nitpick: You don't need to catch and fail, if any exception propagates out of your test method you get fail unless they are in the list of expected exceptions.

Btw, having only runtime exceptions is a good choice imo. Have you looked at old checked exception code like early versions of JBoss? Horrid. No wonder C# left them out. In fact, checked exceptions fail to solve the original problems that exceptions were supposed to solve: Being able to trigger a specific type of error and have it propagate up the stack to an error handler without the intermediate layers being aware of that specific type of error.

-

@Obfuscator said:

Nitpick: You don't need to catch and fail, if any exception propagates out of your test method you get fail unless they are in the list of expected exceptions.

What, exactly, are you nitpicking?

-

@Obfuscator said:

Btw, having only runtime exceptions is a good choice imo. Have you looked at old checked exception code like early versions of JBoss? Horrid. No wonder C# left them out. In fact, checked exceptions fail to solve the original problems that exceptions were supposed to solve: Being able to trigger a specific type of error and have it propagate up the stack to an error handler without the intermediate layers being aware of that specific type of error.

I don't know, I'm no lover of Java, but I tend to think checked exceptions are good. One of the problems with runtime exceptions is that it's hard to know what a particular method is going to throw without inspecting the whole thing. Checked exceptions also guarantee that the programmer either cathes or propagates, but at least is aware of the exceptions in code they are using. I see a lot of code from dynamic languages and there is almost no exception handling because nothing makes it necessary.

-

I was nitpicking on snoofle's statement:

@snoofle said:

They should have done try/catch and call fail() in the catch block.

-

@morbiuswilters said:

I don't know, I'm no lover of Java, but I tend to think checked exceptions are good. One of the problems with runtime exceptions is that it's hard to know what a particular method is going to throw without inspecting the whole thing. Checked exceptions also guarantee that the programmer either cathes or propagates, but at least is aware of the exceptions in code they are using. I see a lot of code from dynamic languages and there is almost no exception handling because nothing makes it necessary.

Yeah, they are a pretty good idea in theory, they just have to many problems in practice. They spread a bit like const in C++ (infectous) and encourage using exceptions for normal program flow. But the main problem IMO is that even a fairly moderate sized program has so many failure modes that the number of exceptions get out of hand. Devs then solve this by grouping, so signatures like 'throws sqlexception, filenotfoundexception, ioexceptions' gets replaced by 'throws myappexception'. This is logical from a dev perspective, since it also doesn't leak your abstractions (do you really want to let your client know that you are using sql?).

When you then write a lib which internally usines several other libs with checked exceptions, you repeat and group again. So your stack trace ends up like 'toplibexception caused by lib1exception caused by ioexception: file not found'. This happened in Jboss. Eventually the top level guys figured that grouping exceptions didn't add anything useful, and started to add 'throws exception' to all methods (i.e throws *any* ttpe of exception. When you reach that point you might aswell drop that declaration and use runtime exceptions instead.

Personally I try to avoid exceptions for program logic as much as possible and use Result-classes with 'wasSuccessful' type of manual checks where requests have multiple outcomes. I'm quite alone in that choice though I feel, and several of my collegues would choose checked wxceptions in those situations. But i always feel that it's both hard and wrong to pass data with exceptions, they were never made for normal program flow and it shows.

-

Sorry for all the typos, but I'm writing on my cell and my Android refuses to navigate the text field properly: can't get an overview and it keeps jumping back to last edit location when I scroll sideways. Worse than the double backspace problem!

-

@Obfuscator said:

But the main problem IMO is that even a fairly moderate sized program has so many failure modes that the number of exceptions get out of hand. Devs then solve this by grouping, so signatures like 'throws sqlexception, filenotfoundexception, ioexceptions' gets replaced by 'throws myappexception'. This is logical from a dev perspective, since it also doesn't leak your abstractions (do you really want to let your client know that you are using sql?).

I can see how this is a problem in frameworks and 3rd party libraries, but in the code I've written I'm rarely going to be propagating SQLException or FileNotFoundException exceptions. Either the error is recoverable, in which case the software will try to remedy the error, or it is fatal, in which case I just log the exception and pass a more generic exception to be handled at the top-level (e.g. a SQLException or FileNotFoundException will often result in the server returning an HTTP 5xx code). It sounds like the problem is with how exceptions are being used and not the exceptions themselves.

@Obfuscator said:

Personally I try to avoid exceptions for program logic as much as possible and use Result-classes with 'wasSuccessful' type of manual checks where requests have multiple outcomes. I'm quite alone in that choice though I feel, and several of my collegues would choose checked wxceptions in those situations.

I think return-type error checking is fine but usually prefer exceptions.

@Obfuscator said:

But i always feel that it's both hard and wrong to pass data with exceptions, they were never made for normal program flow and it shows.

I agree. Exceptions should never be used for flow control, which should be obvious. I basically just need 3 pieces of data with the exception: the type, the error string and the stack trace.

-

@snoofle said:

public void testXXX() {

try {

// try something

System.out.println("Test passed");

} catch (Exception e) {

System.out.println("Test failed");

}

}

For those not familiar with JUnit, exceptions are caught and not rethrown, and no assertions fail, so the test always passes!

I have no experience with testing in this sense, unfortunately. However, I read about it in a Python book once, and the author had a point that made me go "hmmm I wouldn't have thought of that". He said it was important to see your test fail, lest you accidentally write a test that can't fail, ever. So first, he says he writes a test that fails, and he knows it fails because he saw it fail in the test report, and then carefully mods it so it tests what he wants it to test. So I thought that was a good point and a pitfall I would have fallen into, if I ever got the chance to actually use test-driven programming at work.

-

@toon said:

I have no experience with testing in this sense, unfortunately. However, I read about it in a Python book once, and the author had a point that made me go "hmmm I wouldn't have thought of that". He said it was important to see your test fail, lest you accidentally write a test that can't fail, ever. So first, he says he writes a test that fails, and he knows it fails because he saw it fail in the test report, and then carefully mods it so it tests what he wants it to test. So I thought that was a good point and a pitfall I would have fallen into, if I ever got the chance to actually use test-driven programming at work.

Well, if you're using TDD, you write the test so that it tests what you want to add, it fails, then you update the code to make the test pass.

-

@Sutherlands said:

@toon said:

I have no experience with testing in this sense, unfortunately. However, I read about it in a Python book once, and the author had a point that made me go "hmmm I wouldn't have thought of that". He said it was important to see your test fail, lest you accidentally write a test that can't fail, ever. So first, he says he writes a test that fails, and he knows it fails because he saw it fail in the test report, and then carefully mods it so it tests what he wants it to test. So I thought that was a good point and a pitfall I would have fallen into, if I ever got the chance to actually use test-driven programming at work.

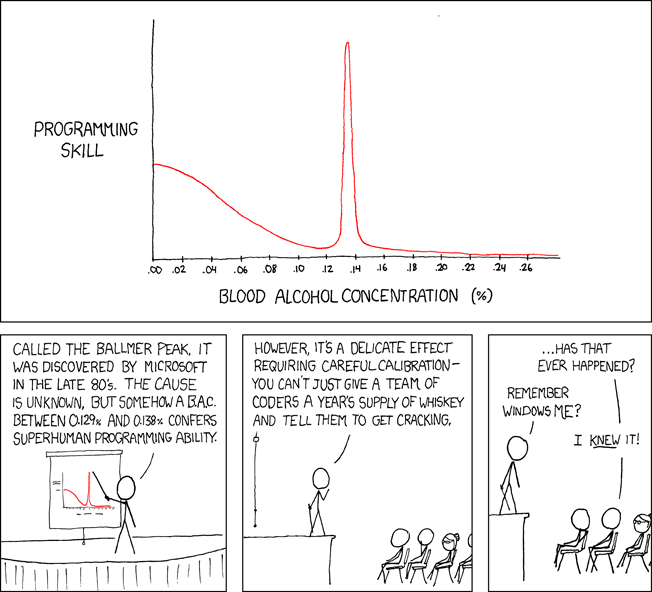

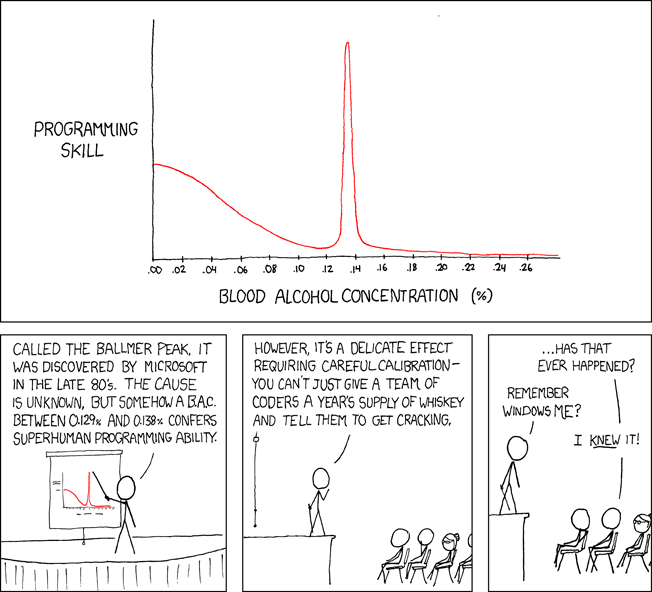

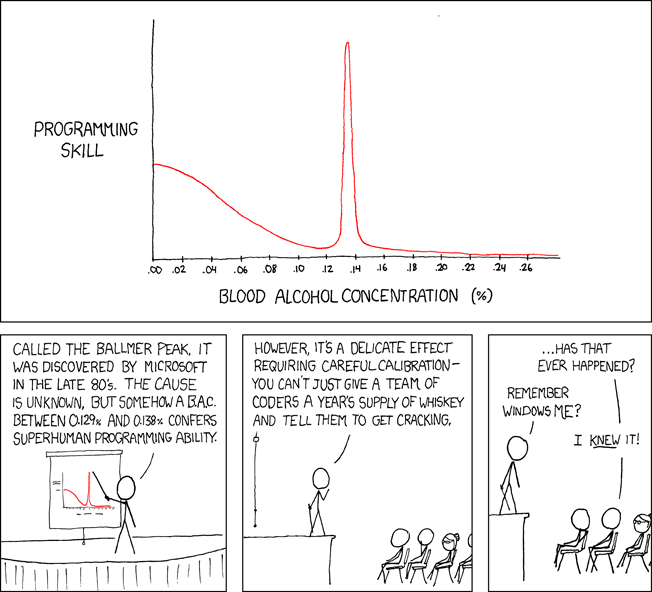

Well, if you're using TDD, you write the test so that it tests what you want to add, it fails, then you update the code to make the test pass.You also spend most of your time writing and maintaining tests while watching your productivity fall off a cliff. Then somebody asks for a change and you take a lot longer doing it because you had to update a dozen tests. You also watch as you are unable to attract good developers because they don't want to spend all day writing tests to confirm that a function which adds two numbers, in fact, adds those two numbers. Eventually you turn to drinking until one night you exceed the AMA-recommended limit of 3 drinks in 24 hours, slip into an alcoholic coma and die.

-

@morbiuswilters said:

Eventually you turn to drinking until one night you exceed the AMA-recommended limit of 3 drinks in 24 hours, slip into an alcoholic coma and die.

ObRosie:

-

@morbiuswilters said:

@Obfuscator said:

But i always feel that it's both hard and wrong to pass data with exceptions, they were never made for normal program flow and it shows.

I agree. Exceptions should never be used for flow control, which should be obvious. I basically just need 3 pieces of data with the exception: the type, the error string and the stack trace.

A friend of mine once told me about a return-by-exception construct he had used in a variant class (I think it was just proof of concept, not serious code). It went something like this:

Variant var = get_some_value(); try { var.get_value(); } catch(int) { // it's an int } catch(std::string) { // it's a string } catch(Foo) { // it's a Foo } // etc.

-

@morbiuswilters said:

You also spend most of your time writing and maintaining tests while watching your productivity fall off a cliff. Then somebody asks for a change and you take a lot longer doing it because you had to update a dozen tests. You also watch as you are unable to attract good developers because they don't want to spend all day writing tests to confirm that a function which adds two numbers, in fact, adds those two numbers. Eventually you turn to drinking until one night you exceed the AMA-recommended limit of 3 drinks in 24 hours, slip into an alcoholic coma and die.

Firstly.. is it only coders that are writing tests? I'd have thought that function would have been delegated out to a testing group as part of creating the requirements.

Secondly, if you're spending all day writing tests for a small change, there's deeper issues than using TDD.

-

@Cassidy said:

Secondly, if you're spending all day writing tests for a small change, there's deeper issues than using TDD.

Actually, that's a common failure mode of Test Driven Development. The "big problem" with TDD is that it requires developers to "get it". It looks tempting as a magic procedure that can be enforced to increase code quality. In reality, it requires developers to know which tests to write and which not to write. So, developers that already have a good sense of testing - and therefore would have manually unit tested the right stuff - write good tests, and developers that don't have a good sense of testing spend way too much time or don't write the right tests at all. In the end, you get the same quality as before.Having tests built as part of requirements guarantees that you will never test the system at a component level, and that the person creating the test doesn't have a sense for where a test is likely to be most necessary. That makes for less effective tests.

Where TDD shines is bug fixing. By writing a test that exposes a bug before fixing it, you get a regression test for a defect that is likely to actually happen.

-

I think the bigger problem with TDD is that it (if followed strictly) requires you to have the ability to break your code down into small test-able steps-- BEFORE you've written any code. I don't know about you guys, but I can't do that.

-

@blakeyrat said:

I think the bigger problem with TDD is that it (if followed strictly) requires you to have the ability to break your code down into small test-able steps-- BEFORE you've written any code. I don't know about you guys, but I can't do that.

I think that's one of the big benefits of TDD. Easily testable code is usually better designed and more reusable than difficult to test code. I've required some of my developers to follow TDD in the past just to encourage them to design the API up front.

-

@Jaime said:

Actually, that's a common failure mode of Test Driven Development. The "big problem" with

TDDunit testing is that it requires developers to "get it". It looks tempting as a magic procedure that can be enforced to increase code quality. In reality, it requires developers to know which tests to write and which not to write.FTFY. The thing about TDD is that is pretty much requires you write a test for every function you are going to create; over testing is baked in.

@Jaime said:

Where TDD shines is bug fixing. By writing a test that exposes a bug before fixing it, you get a regression test for a defect that is likely to actually happen.

I'm pretty sure that's recommended best practice for non-TDD testing as well. At least, I've always seen bug fixes done in that way.

-

@tdb said:

@morbiuswilters said:

This is a pattern a former coworker was fond of using:@Obfuscator said:

But i always feel that it's both hard and wrong to pass data with exceptions, they were never made for normal program flow and it shows.

I agree. Exceptions should never be used for flow control, which should be obvious. I basically just need 3 pieces of data with the exception: the type, the error string and the stack trace.

A friend of mine once told me about a return-by-exception construct he had used in a variant class (I think it was just proof of concept, not serious code). It went something like this:

Variant var = get_some_value(); try { var.get_value(); } catch(int) { // it's an int } catch(std::string) { // it's a string } catch(Foo) { // it's a Foo } // etc.<pre>Public Overridable Function Process() As Exception</pre>And then, of course, check to make sure the function returned Nothing before continuing from where it was called.

-

@morbiuswilters said:

You also watch as you are unable to attract good developers because they don't want to spend all day writing tests to confirm that a function which adds two numbers, in fact, adds those two numbers.

You know what else is annoying? Writing programs that output "Hello World", all the bleedin' time.

-

@token_woman said:

You know what else is annoying? Writing programs that output "Hello World", all the bleedin' time.

Like, real-time? Or intervalled? Or only when called?

:> runreport.exe

hello world

:> grep /ass/butt

hello world

:> ping /t

hello world

hello world

hello world

hello world

hello world

hello world

hello world

hello world

hello world

hello world

hello world

hello world

hello world^C

hello world

:>exit

hello world

:> sudo exit

hello universe

-

@boomzilla said:

@morbiuswilters said:

Eventually you turn to drinking until one night you exceed the AMA-recommended limit of 3 drinks in 24 hours, slip into an alcoholic coma and die.

ObRosie:

What is taking shadow mod so long?

-

@dtech said:

@boomzilla said:

@morbiuswilters said:

Eventually you turn to drinking until one night you exceed the AMA-recommended limit of 3 drinks in 24 hours, slip into an alcoholic coma and die.

ObRosie:

What is taking shadow mod so long?

Shadow Mod only comes when you least expect it.